Table of Contents

Force Control Algorithms for Sclera Eye Surgery

Last updated: May 9, 2017 12:56:01 pm

Summary

The aim of the project is to develop control algorithms which surpasses the efficiency of the existing control algorithms for Johns Hopkins Eye Robot 2.1 in terms of smoothness of motion, force sensing, high intraocular dexterity, natural motion guidance, RCM tool guidance and tool coordination. Currently, the robot works on the hands-on cooperative control. The admittance robot control both in the constant and variable form have been employed and tested on the robot. The transition between the lower bound and the upper bound is considered to be the straight line function but the study has to be conducted for the nonlinear functions as it is assumed that it won't be a linear function for human operative conditions.

- Students: Ankur Gupta, Saurabh Singh

- Mentor(s): Dr. Marin Kobilarov, Dr. Iulian Iordachita and Dr. Russell H. Taylor

Background, Specific Aims, and Significance

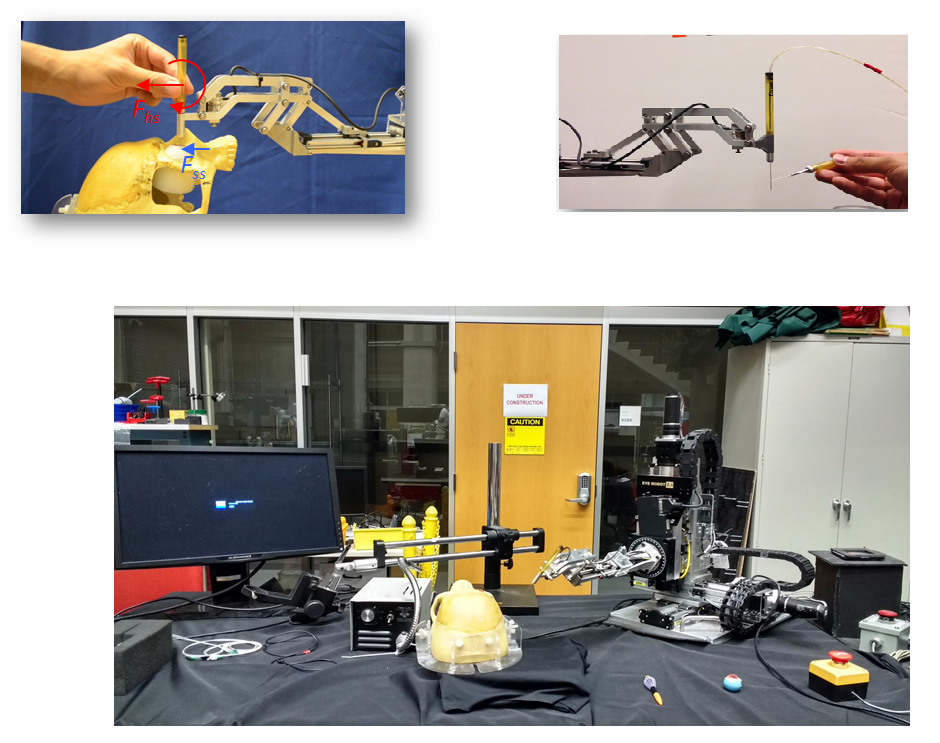

The microsurgeries related to the retina and sclera of the eye have many challenges. Some of them can be classified in terms of motion(hand tremor of surgeon), force(so small to be felt by the surgeon), feedback(unavailability of haptic feedback), surgical skills(these surgeries are hard to perform, require intense practice and dexterity). Since robots provide precise and accurate motion which is helpful to operate the delicate eye tissue. To address such issues Johns Hopkins University has been working to build/improve eye robot for the last 15 years.

The problems to design a control algorithm for the eye robot are numerous-:

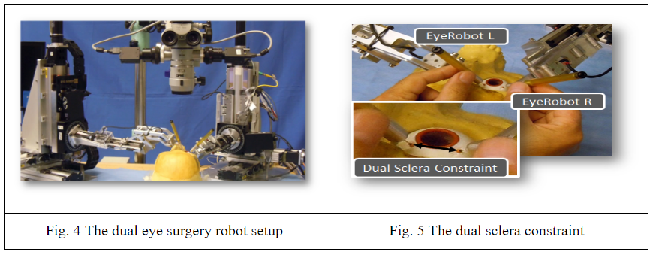

1) RCM is not fixed in the vitreoretinal surgery and can move up to 12 mm. 2) The eye robot in various situations blocks the view of the surgeon and makes it difficult to view the retina/sclera in the microscope. 3) To make the eyeball fixed the use of two sclerotomies is employed by the surgeons. It involves the use of two dual robot setup and the distance between the two incisions(sclerotomies) has to be made fixed. This problem of eyeball motion becomes worse when the surgeon cannot feel the force exerted at the two sclerotomies.

In such scenario, a surgical robot which can assist a surgeon to interact with the patient tissues i.e., by providing quantifications of the real-time interactions of the tissue manipulation at the tool tip and the contact between the tool shaft and sclerotomy comes in handy. These objectives in the current control methodology are gained by using the variable admittance control. Despite the effectiveness of the robot has been tested on the rabbits the various interaction parameters, force scaling parameters and the control methodology switch from the force scaling to the variable admittance control has a linear intermediate path. This is path is considered to be nonlinear for the human operative conditions.

Deliverables

- Minimum: (Expected by April 30)

- Software and Algorithm bridge source code

- Ported backbone code to C++, if required

- Procured and Calibrated Fiber-Optic Force sensing tool

- Replace Fibre Optic tool, with low-cost workable tool, if FO tool not procured

- Identified and documented source code that pertains to Sclera force sensing, drivers, APIs, and backbone algorithm

- Restored the full setup to state, working with all tools and sensors attached

- Eye socket phantom

- Eye Phantom

- Camera Mount

- Video Streaming pipeline codebase

- Expected: (Expected by May 5)

- Analysis of force feedback curve with multiple and unique coefficients

- Introduction of non-linear behavior to force feedback

- Proof of concept with ‘untrained’ hands on eye phantom mounted on eye socket with video capture, such that forces obey desired profile in the process.

- Maximum: (Expected by May 15)

- Data collection from ‘experts’ or doctors to validate our model with ground truth force feedbacks.

- Written research paper with modified approach.

Technical Approach

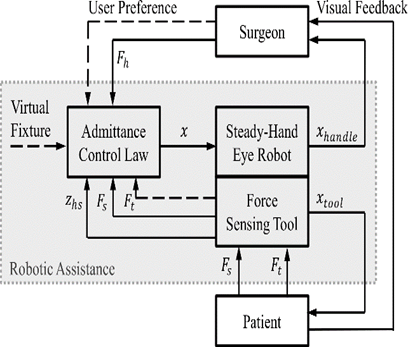

Image 3: The control loop of ER2.1. [1]

Setup the system to determine the position of the sclera and verify that that is accurate up to an arbitrary precision. This is very important as the sense of depth for the tool will heavily depend on this metric. If this not accurate we may have to have the tool undergo pseudo-pivot-calibration to align the frames of the tool in the desired orientation.

Next, we collect data from hand ATI FT force sensor, tool embedded FBG readings, and sclera depth along with the tip of end-effector. This data is first collected from non-experts such as lab members and then from experts such as surgeons so that we can fit a natural curve to these readings making Eye Surgery Robot much more usable and intuitive for the surgeon to use.

Dependencies

- Develop a formal understanding of the project and a meeting time with the mentors to discuss about the project.

- Xingchi He’s (graduated) time to discuss the existing code and the procedure for guidance.

- The surgery tool from Dr. Iordachita (needs to be searched).

- Microscope.

- Time with FBG Machine (Currently three projects are using it).

- Development/sharing of the phantom and sharing of the tissues/ membranes for the eye surgery.

- Getting time with doctors to collect the data and validate the results the results obtained for the nonlinear function.

- Unavailability of the license for the eye robot for conducting tests on the living animals.

Milestones and Status

- Milestone name: System Setup

- Planned Date: April 8, 2017

- Expected Date: April 6, 2017

- Status: Done

- Completed Date: March 20, 2017

- Milestone name: Collect Data Force Sensing

- Planned Date: May 5, 2017

- Expected Date: April 20, 2017

- Status: Started

- Milestone name: Go Through and Understand Code

- Planned Date: March 14, 2017

- Expected Date: March 22, 2017

- Status: Completed

- Minimum Deliverables:

- Planned Date: April 30, 2017

- Expected Date: April 30, 2017

- Status: Completed on April 23, 2017

- Expected Deliverables:

- Planned Date: May 5, 2017

- Status: Completed on May 9, 2017

Plan and Status

- Find a tool that behaves consistently for force measurement and sclera measurement. : Resolved (Dual Bone)

- Calibrate the tool axis to robot axis : Partially Resolved (L2-norm)

- Calibrate the force output to get a transformation matrix between actual force applied and output from Eye Robot software : Resolved

- Code a data collection pipeline : Done

- Make a new phantom for eye-socket to reduce friction eye phantom. : Done

- Find a camera/microscope for fixing the optical axis : Done(Camera simulating Video Microscope)

- Program a multi-threaded application for the video grabbing pipeline. . : Done

- Add optical axis marker in the image acquisition pipeline : Done on some indices

- Prepare the mount for camera. : Done

- Setup light source around the phantom so that camera/microscope can see markings inside the eye clearly : Done(Camera has LED array)

- Collect force data at sclera with varying depth : Done

- Analyze the behavior of force vs depth data : Done

- Setup an experiment that to make the process agnostic to user : Done

- Collect the data from novices : Done

- Learn this behavior, (possibly not complex statistically). : Done

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Presentation

- Project Final Report

- links to any appendices or other material

Project Bibliography

[1] Xingchi He, Force Sensing Augmented Robotic Assistance for Retinal Microsurgery, PhD Thesis, Jul 2015, Johns Hopkins University, Baltimore

[2] P. Gupta, P. Jensen, and E. de Juan, “Surgical forces and tactile perception during retinal microsurgery,” in International Conference on Medical Image Computing and Computer Assisted Intervention, vol. 1679, 1999, pp. 1218– 1225.

[3] S. Charles, “Techniques and tools for dissection of epiretinal membranes.”, Graefe’s archive for clinical and experimental ophthalmology = Albrecht von Graefes Archiv für klinische und experimentelle Ophthalmologie, vol. 241, no. 5, pp. 347–52, May 2003.

[4] R. Taylor, P. Jensen, L. Whitcomb, A. Barnes, R. Kumar, D. Stoianovici, P. Gupta, Z. Wang, E. DeJuan, and L. Kavoussi, “A Steady-Hand Robotic System for Microsurgical Augmentation,” The International Journal of Robotics Research, vol. 18, no. 12, pp. 1201–1210, 1999

[5] B. Mitchell, J. Koo, I. Iordachita, P. Kazanzides, A. Kapoor, J. Handa, G. Hager, and R. Taylor, “Development and application of a new steady-hand manipulator for retinal surgery,” in IEEE International Conference on Robotics and Automation, 2007, pp. 623–629.

[6] R. H. Taylor, J. Funda, D. D. Grossman, J. P. Karidis, and D. A. LaRose, “Remote center-of-motion robot for surgery,” U.S. Patent 5,397,323, 1995.

[7] A. Menciassi, A. Eisinberg, G. Scalari, C. Anticoli, M. Carrozza, and P. Dario, “Force feedback-based microinstrument for measuring tissue properties and pulse in microsurgery,” in IEEE International Conference on Robotics and Automation, 2001, pp. 626–631.