Table of Contents

Ultrasound-based Visual Servoing

Last updated: 2015-05-11 11:59am

Summary

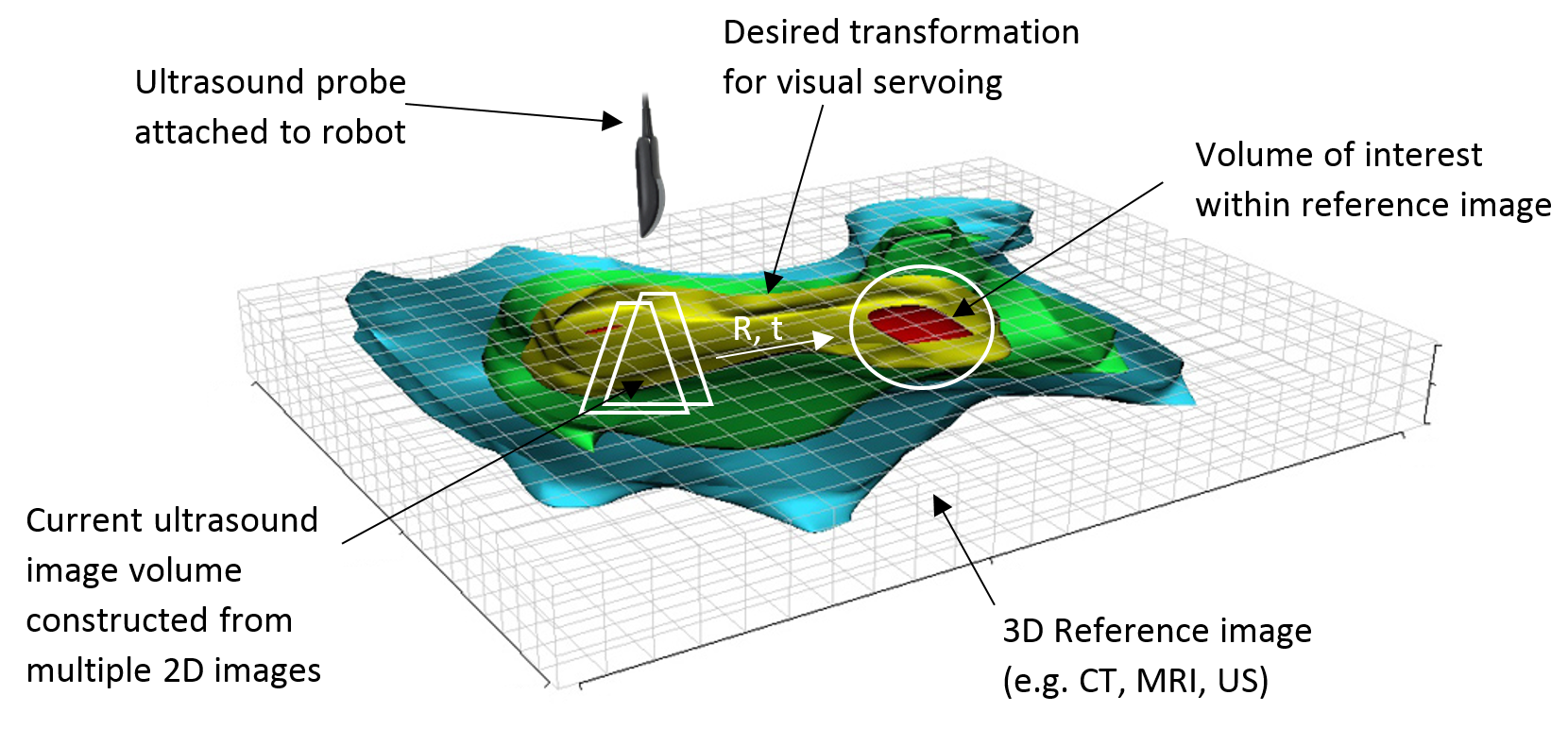

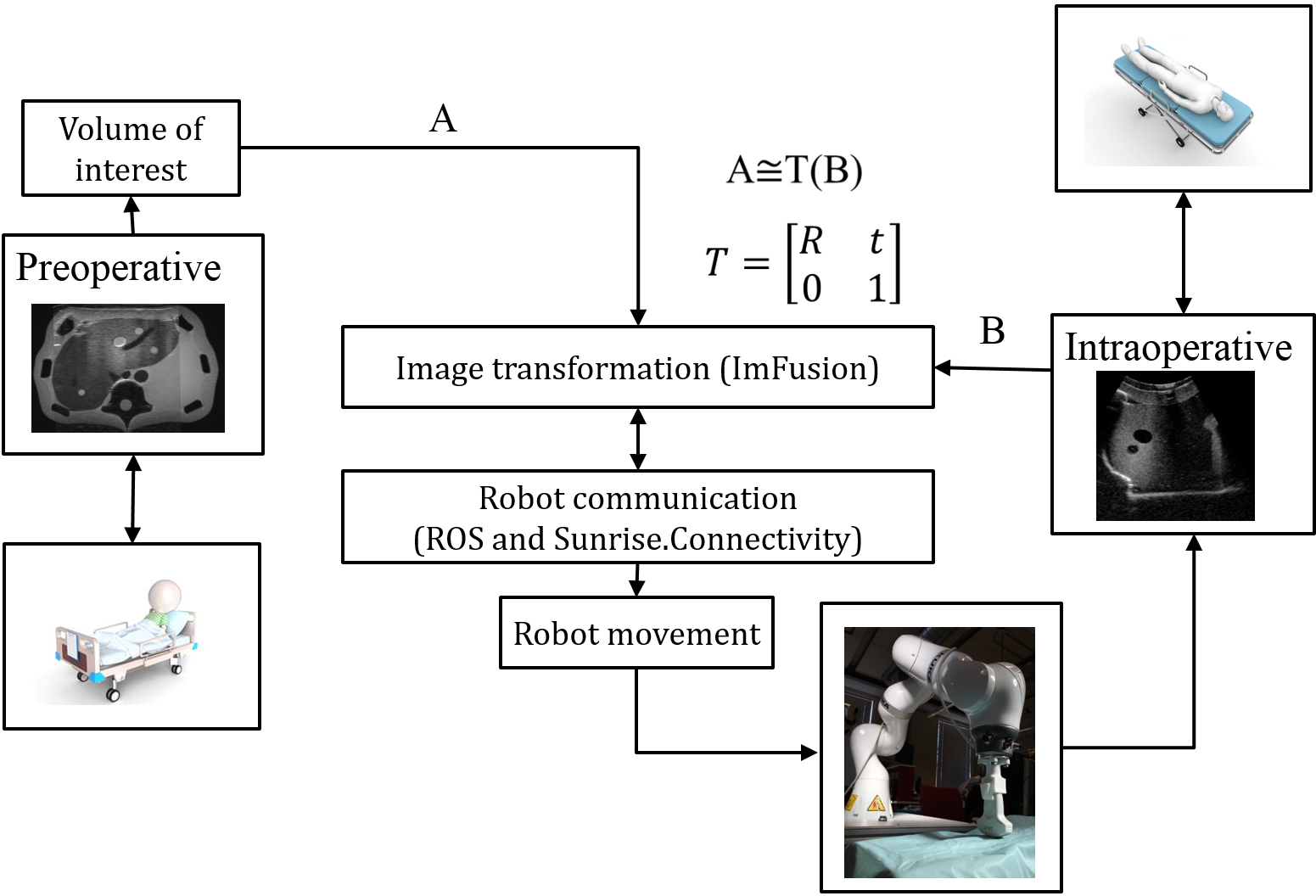

The goal of this project is to develop visual servoing for intraoperative robotic ultrasound. Using a workstation with a GUI, the doctor would select a predefined volume from a reference image such as CT or MRI that was obtained before the procedure. The robot would subsequently image the patient with an ultrasound probe at the predefined volume of interest. This technique would allow the doctor to obtain accurate anatomy in real time, even when deformations and organ movement cause the reference image to differ from reality. An ImFusion plugin will be developed which constructs a 3D ultrasound volume from individual ultrasound probe B-mode images, then calculates the 3D transformation between the current ultrasound volume and the volume of interest to move a KUKA iiwa lightweight robot to the location corresponding to the volume of interest.

- Students: Michael Scarlett

- Mentor(s): Bernhard Fuerst, Prof. Navab

Background, Specific Aims, and Significance

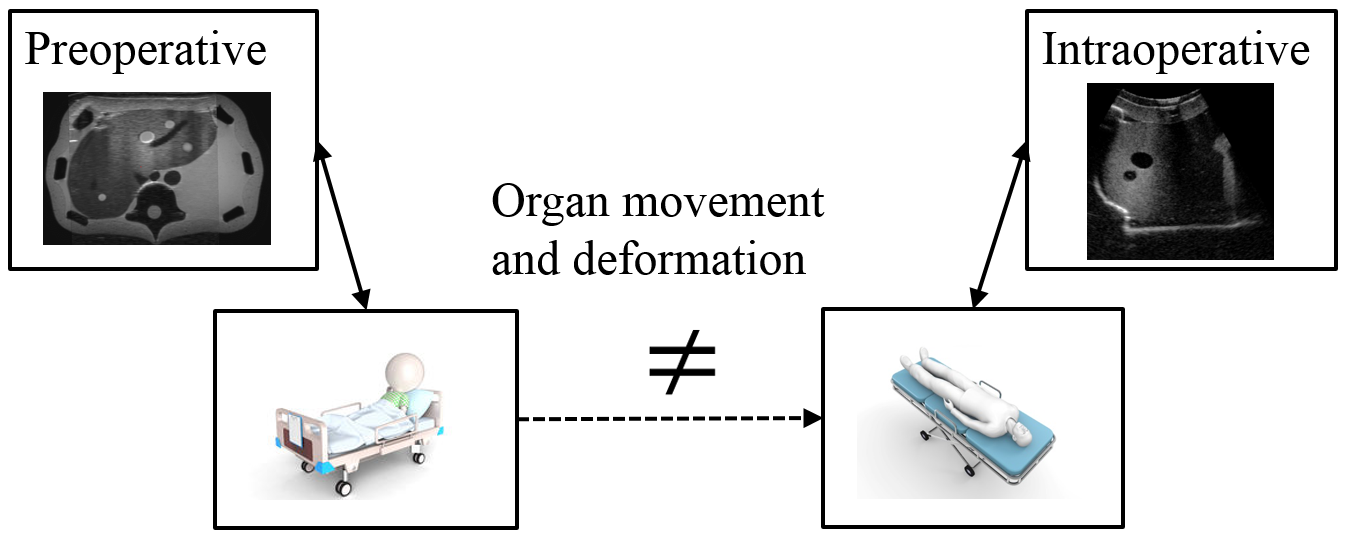

Certain medical procedures require a high level of anatomical precision, such as cancer tumor removal or neurosurgery. Although preoperative imaging modalities such as CT and MRI allow for a high level of contrast and spatial resolution, this data differs from the current anatomy of the patient during surgery due to factors such as breathing. As a result, intraoperative imaging is necessary to obtain the real-time anatomy of a patient’s organs.

Among the different medical imaging modalities, ultrasound has several advantages: it is cheap, portable, provides images in real-time, and does not use ionizing radiation. In comparison, CT and MRI provide much higher contrast than ultrasound, which provides them considerable clinical utility for preoperative imaging. Unlike ultrasound, these provide static images that are difficult to update during surgery. As a result ultrasound is commonly used for intraoperative imaging to obtain real-time anatomical data. Doctors may also use preoperative ultrasound with intraoperative ultrasound for certain procedures. However performing a hand held 2D ultrasound scan is user-dependent because it requires unique skills and training to acquire high-quality images.

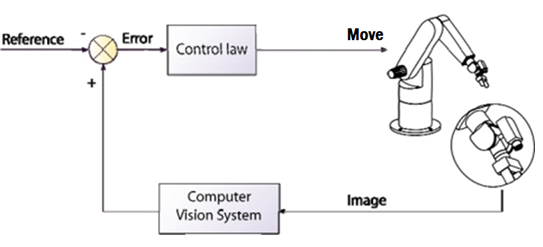

Robotic ultrasound is a thriving branch of computer-assisted medical interventional research. Due to the greater precision and dexterity of a robot for positioning of an ultrasound probe, robotic ultrasound can improve patient outcomes and shorten procedure times. By acquiring 2D slices of B-mode scans and tracking the probe location, it is possible to obtain a 3D ultrasound volume. Prior work has focused on the use of an ultrasound probe controlled by a human operator via a telemanipulation system, but this project focuses on the automatic guidance of the ultrasound probe by visual servoing. Visual servoing performs registration of the current input image and a volume of interest to obtain the transformation that will move the robot to the location of the volume of interest. The robot obtains a new image once it has moved and repeats the process until the current image corresponds to the volume of interest.

To combine the advantages of both intraoperative ultrasound and preoperative imaging, a hybrid solution is proposed which allows the doctor to select a particular volume in the reference image and have the robot acquire the ultrasound image at its corresponding location. The robot will perform visual servoing by using three-dimensional registration of ultrasound with a reference image to obtain the transformation between the current probe location and the desired probe location. This transformation will be used to update the position of the robot accordingly to acquire a new ultrasound image until the current probe location is sufficiently close to the desired probe location.

The ImFusion software has extensive medical image processing functionality, with existing modules for calculating the transformation between the 3D volumes. As a result, the main task is to integrate ImFusion modules with the KUKA robot to create a visual servo controller that moves the ultrasound probe.

The specific aims of the project are:

- To understand visual servoing and robotics communication

- To create an ImFusion plugin that enables communication with a robot

- To implement the technique of visual servoing such that given a volume of interest selected from a preoperative image, the robot can automatically position the ultrasound probe at the corresponding location and acquire an updated image

Deliverables

- Minimum: (Completed by 4/17)

- Enable control of KUKA robot from ImFusion

- ImFusion plugin for automated ultrasound-MRI and ultrasound-ultrasound registration that returns transformation between the three-dimensional images

- Expected: (Completed by 5/7)

- Given an initial location of the ultrasound adjacent to the patient and the reference image, find the transformation then have robot automatically move to location of reference image

- Perform validation of path planning on different ultrasound phantoms

- Evaluate performance of different registration techniques

- Provide documentation of completed portions

- Maximum: (Not completed)

- Integrate ImFusion plugin for visual servoing with Kinect sensor

- Given only the reference image and volume of interest, find the corresponding ultrasound image and move robot to location of reference image regardless of initial position

Technical Approach

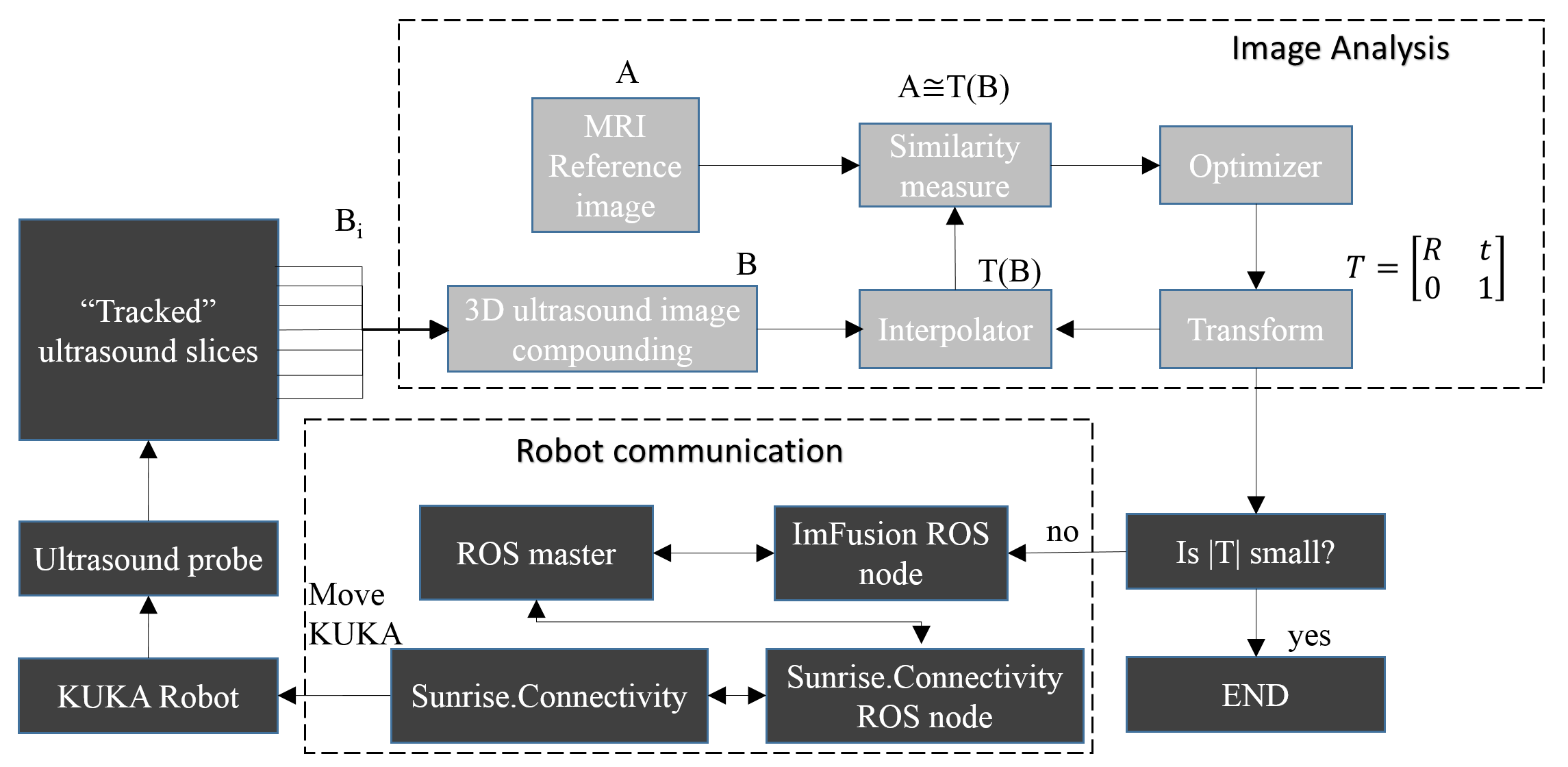

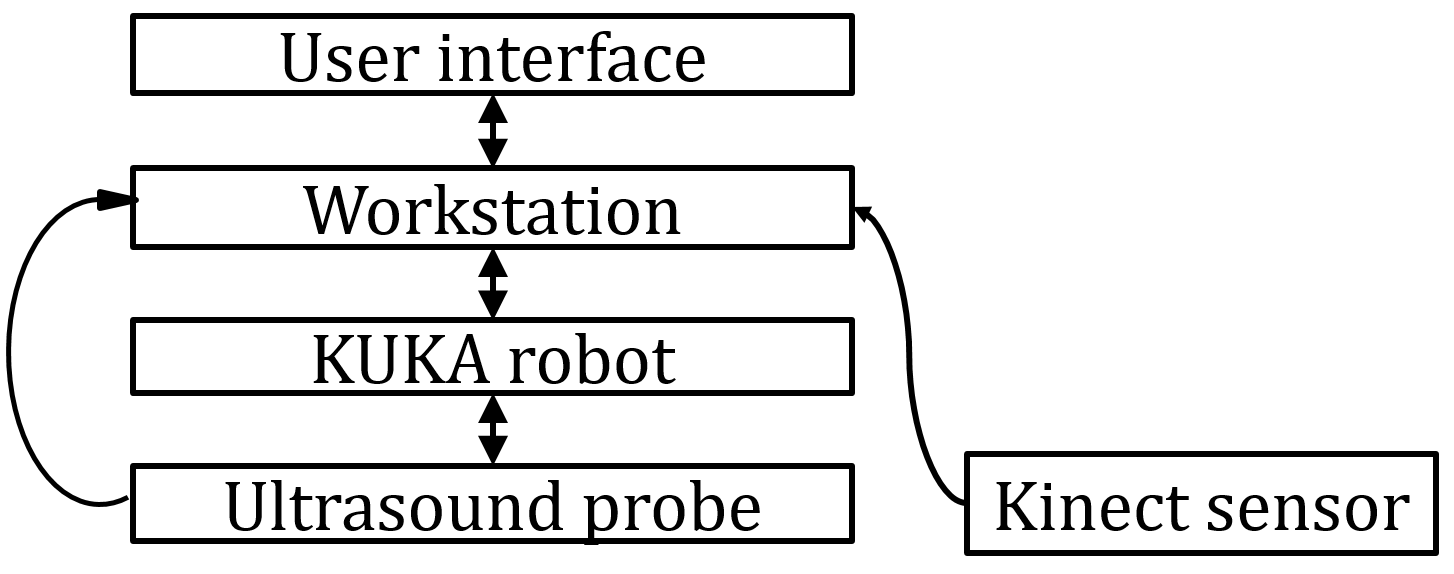

The project consists of two main software components: i.) an image analysis module which compounds a 3D volume from the ultrasound probe images and calculates the transformation between the 3D ultrasound volume and reference image, and ii.) a robot communication module which transfers information between ImFusion and KUKA regarding the current robot state and the commanded movement.

<fs medium>Image Analysis</fs>

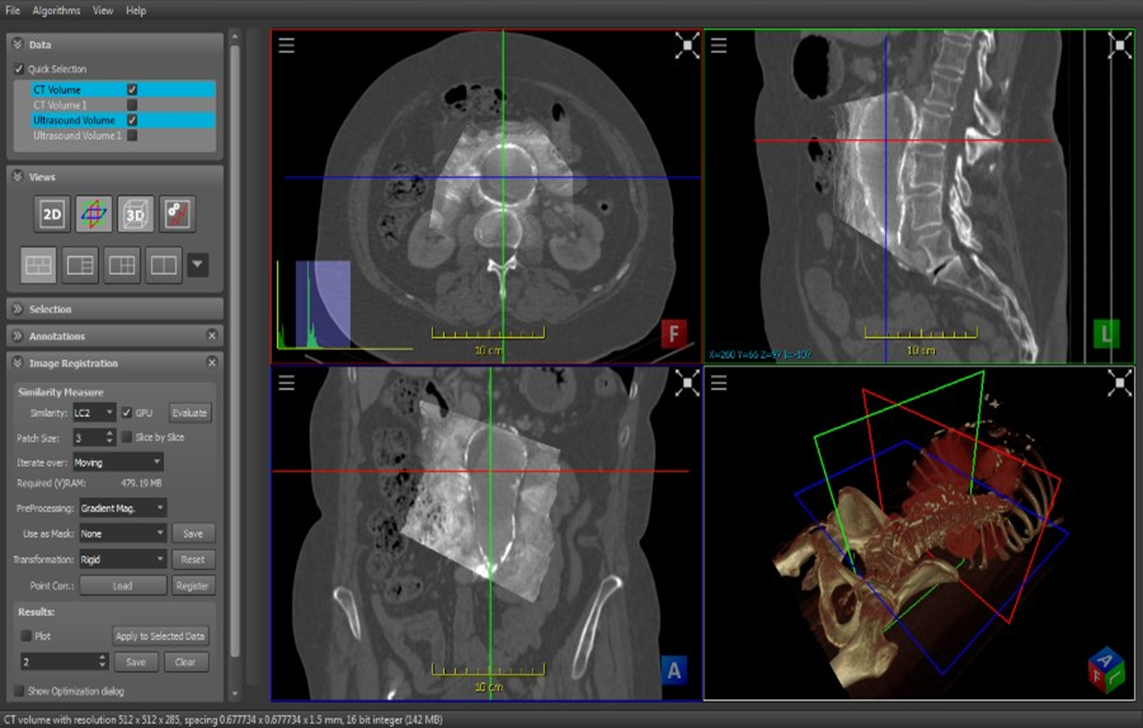

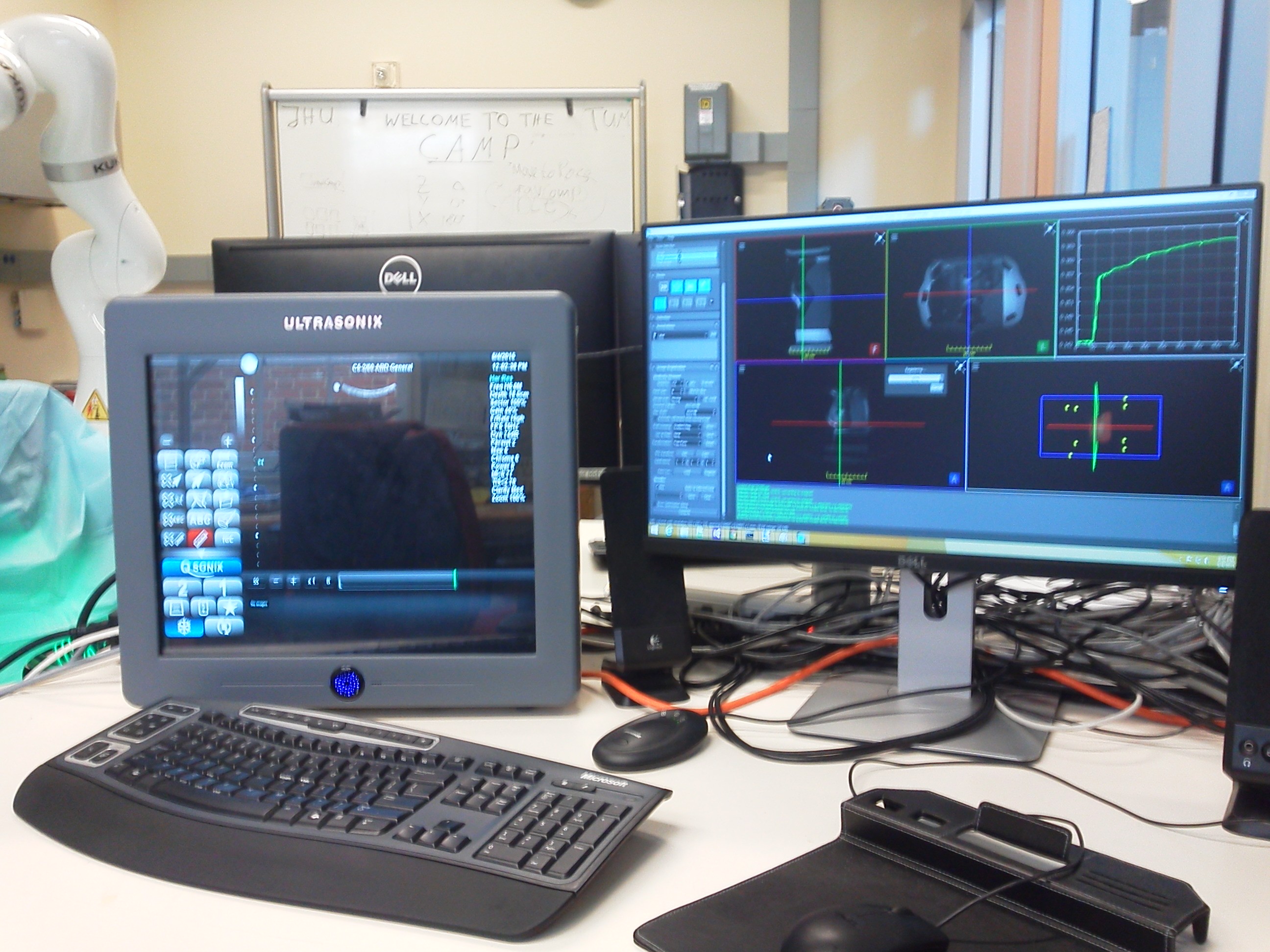

A workstation is used to run ImFusion, which is a medical imaging software suite created by research fellows at CAMP. It provides existing C++ modules for three-dimensional ultrasound-MRI and ultrasound-ultrasound registration, as well as functions that obtain the transformation between images and reconstruct a 3D ultrasound volume from planar ultrasound scans with known poses. The software also provides a SDK that allows the addition of new plugins and the development of a graphical user interface.

The ImFusion SDK is used to create a new plugin that communicates with the KUKA robot controller. The plugin takes as input the 2D B-mode images from the ultrasound probe along with the current location of the probe at each position. It stores a 3D reference image, the volume of interest, and a 3D ultrasound volume at the probe’s current location obtained from aligning the 2D B-mode images based on position. Built-in modules are used to obtain the three-dimensional rotation and translation between the ultrasound volume at the probe’s current location and the volume of interest within the reference image. The plugin then communicate instructions to move the ultrasound probe according to the computed transformation.

<fs medium>Robot communication</fs>

The KUKA iiwa robot, which has 7 degrees of freedom, manipulates an ultrasound probe that is mounted on its tool tip using motion plans from the workstation running ImFusion. Since movement and image data must be sent via LAN between the KUKA robot and workstation, a network protocol must be used for transferring information between them. Robot control is provided using the KUKA Sunrise.Connectivity framework, and communication between Sunrise and ImFusion uses ROS to pass messages between the two processes. ROS works using a peer-to-peer topology by running processes on independent nodes and using a master server to pass messages between nodes. ROS provides packages for commonly-used robot functionality, and ROS nodes can be created for Java, C++, and other programming languages.

Since ImFusion only runs on Windows, a possible pitfall was that the core ROS system might have compatibility issues with Windows because it is only designed for Unix-based platforms according to the documentation. An alternative would be to run a ROS server within MATLAB and use OpenIGTLink to communicate between ImFusion and the ROS server, then use a ROS node for Sunrise. This would maintain the ability to use ROS to still be functions, and ImFusion already contains a module for OpenIGTLink. If this approach does not work, then a third alternative is to avoid using ROS completely and create an OpenIGTLink interface for Sunrise that will enable communication with the OpenIGTLink interface for ImFusion. This approach has the benefit of avoiding Windows compatibility issues, but a disadvantage is that ROS provides greater existing functionality than OpenIGTLink.

The final approach was to use an experimental version of ROS called WinRos that allows native development on Windows. Since it compiles with Microsoft Visual Studio, this package is compatible with ImFusion.

Dependencies

* Permission to use ImFusion

- Reason: ImFusion is proprietary software which is not freely available to the general public.

- Resolution: A research agreement with CAMP allows free usage of ImFusion for academic purposes, so Prof. Navab can provide access to this software.

- Backup plan: This project cannot be done without ImFusion because the goal is to develop an ImFusion plugin.

- Planned resolution date: 2/20

- Status: resolved

* J-Card access to the Robotorium and Mock OR

- Reason: To gain access to the KUKA robot and a workstation for ImFusion. The KUKA robot in the robotorium will be used for testing and debugging, and the KUKA robot in the Mock OR will be used for experimental validation of the visual servoing algorithm. These robots are already connected to workstations with ImFusion set up.

- Resolution: Get Hackerman Hall J-Card access form signed by a faculty member then give to Alison Morrow.

- Backup plan: This project cannot be done without a KUKA robot. If this dependency is not met then it is unclear how a KUKA robot could be accessed. J-card access to either the Mock OR or the Robotorium is sufficient to gain access to a KUKA robot, but both are desired for convenience.

- Planned resolution date: 2/27

- Status: resolved

* Computer with GPU powerful enough for ImFusion

- Reason: ImFusion uses GPGPU computing for fast image processing and needs the ability to operate in real time for visual servoing.

- Resolution: Work with mentors to obtain an account on CAMP workstation in Robotorium which has a Tesla GPU and already has ImFusion installed.

- Backup plan: Obtain permission to run ImFusion on personal laptop by acquiring a license from mentors. This would increase the runtime of the visual servoing and would require extra time to configure the software, but it would have negligible impact on milestones.

- Planned resolution date: 2/27

- Status: resolved

* Ultrasound probe and phantoms

- Reason: To validate the path planning of the KUKA robot for experimental testing.

- Resolution: These should be located in the Mock OR already.

- Backup plan: Work with mentors to locate an ultrasound probe and phantom and buy these if necessary.

- Planned resolution date: 2/27

- Status: resolved

* Kinect sensor

- Reason: The maximum deliverable requires being able to start with the ultrasound probe away from the patient. This requires a sensor which can find the patient’s location across a gap of air. Air forms a barrier that reflects ultrasonic waves and depth information is needed to locate the patient in three dimensions, so a RGBD camera is desired.

- Resolution: This should be located in the Mock OR already.

- Backup plan: Work with mentors to locate a Kinect sensor and buy one if necessary.

- Planned resolution date: 2/27

- Status: resolved

* Reliance on other students to make ROS node for KUKA Sunrise

- Reason: I met with Oliver Zettinig and Risto Kojcev of the CAMP group who agreed to make a ROS node for KUKA Sunrise. Since they are working on projects which also involve a KUKA ROS node, it makes sense for us to collaborate regarding this.

- Resolution: Check to see that sufficient progress is being made on this task

- Backup plan: Contribute to the development effort or make the ROS node myself

- Planned resolution date: 4/17

- Status: Resolved

Milestones and Status

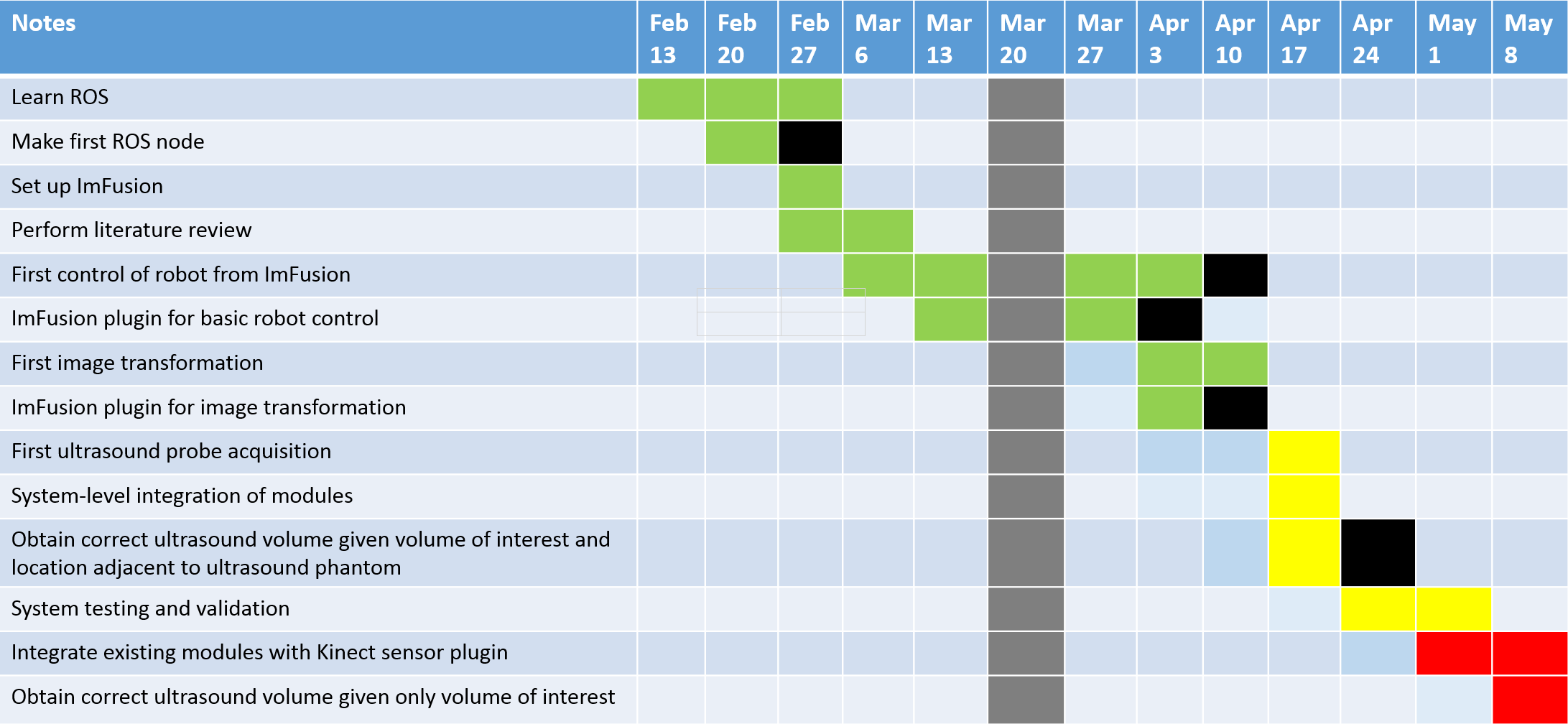

- Create ROS node for first time

- Planned Date: 2/27

- Expected Date: 2/27

- Status: Completed

- Make ROS node compatible with ImFusion

- Planned Date: 3/13

- Expected Date: 3/13

- Status: Completed

- First control of robot from ImFusion

- Planned Date: 3/24

- Expected Date: 4/19

- Status: Completed

- ImFusion plugin that performs basic robot control from ImFusion

- Planned Date: 3/27

- Expected Date: 4/3

- Status: Completed

- ImFusion plugin that performs image registration and outputs transformation

- Planned Date: 4/3

- Expected Date: 4/10

- Status: Completed (use existing ImFusion plugin from Oliver Zettinig which calculates transformation between images)

- Obtain correct ultrasound volume given initial and reference images

- Planned Date: 4/17

- Expected Date: 5/7

- Status: Completed

- Obtain correct ultrasound volume given only reference image

- Planned Date: 5/8

- Expected Date: Not expected

- Status: Not started

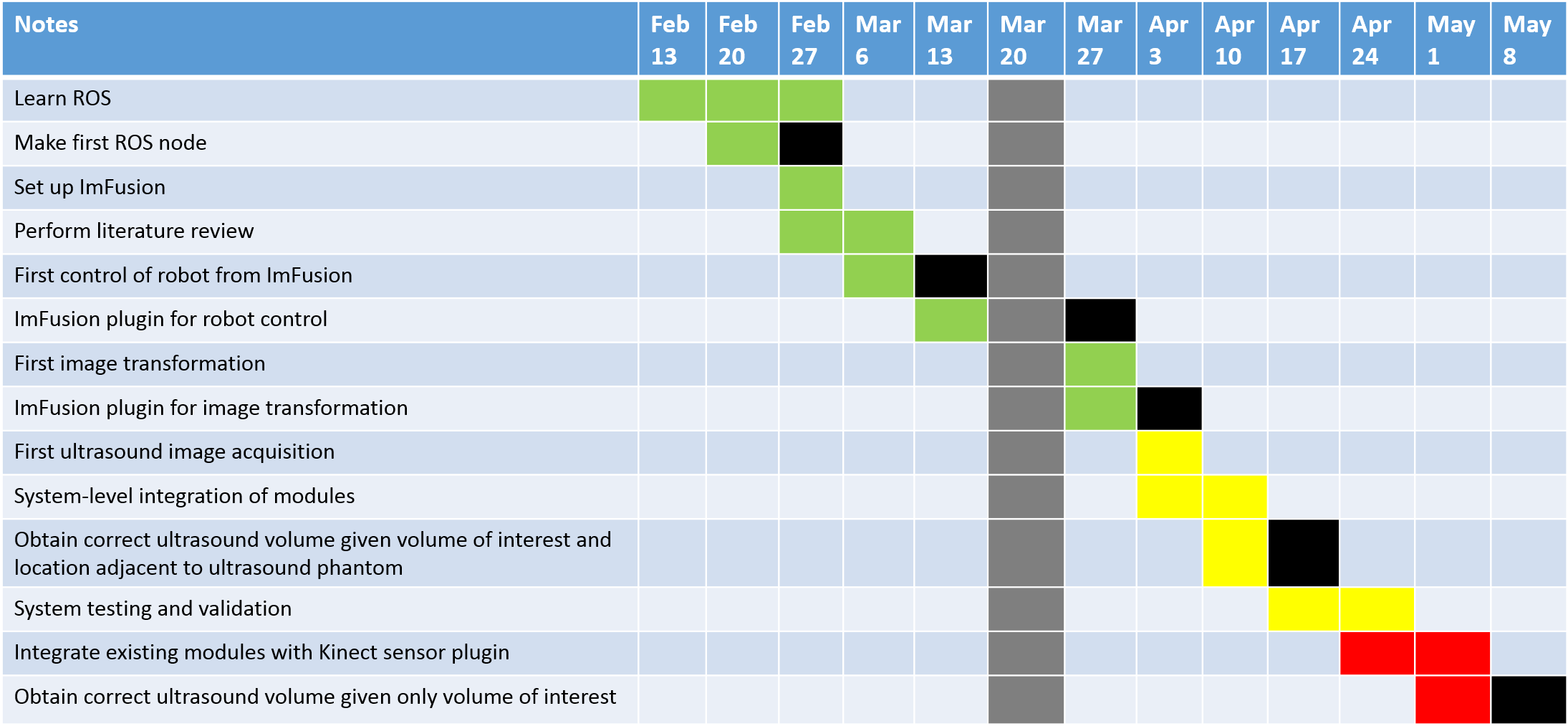

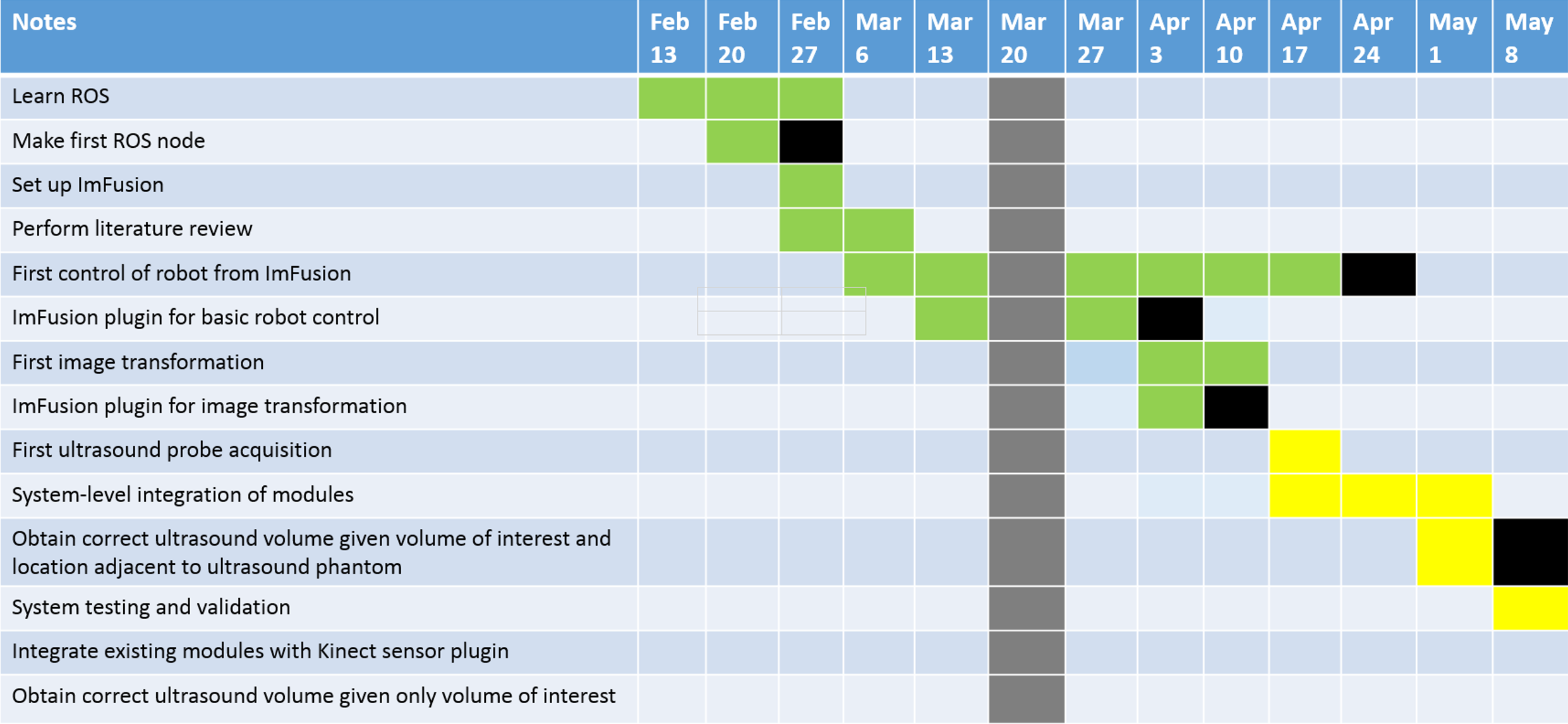

Updated timeline after checkpoint presentation:

Green - Min deliverables

Yellow - Expected deliverables

Red - Max deliverables

Black - Milestones

Results

Communication between ImFusion and the KUKA robot has been successfully achieved on Windows using ROS. The WinRos MSVC SDK allows ROS binaries to be built from source in Microsoft Visual C++ 2012. This is required for compatibility with the ImFusion SDK. A ROS plugin has been developed for ImFusion which sends a pose message to the KUKA robot consisting of the position and orientation that the robot should move to, and accepts a pose message from the robot with the updated position. This is important for other projects within the CAMP research group so that the reuse of existing ROS modules allows less development effort and code duplication. An existing CAMP Sunrise.Connectivity module was utilized that performs visual servo control of the KUKA robot.

ImFusion has also been used to create a 3D volume from 2D ultrasound probe images with known poses from an existing ImFusion plugin, which then provides transformations to the robot based on the registration of the ultrasound probe volume and the volume of interest within the reference image to which the robot should move. This transformation is then sent to the robot to obtain a new position of the ultrasound probe, where the image registration is repeated until the transformation between the ultrasound probe location and the volume of interest is 1mm or less.

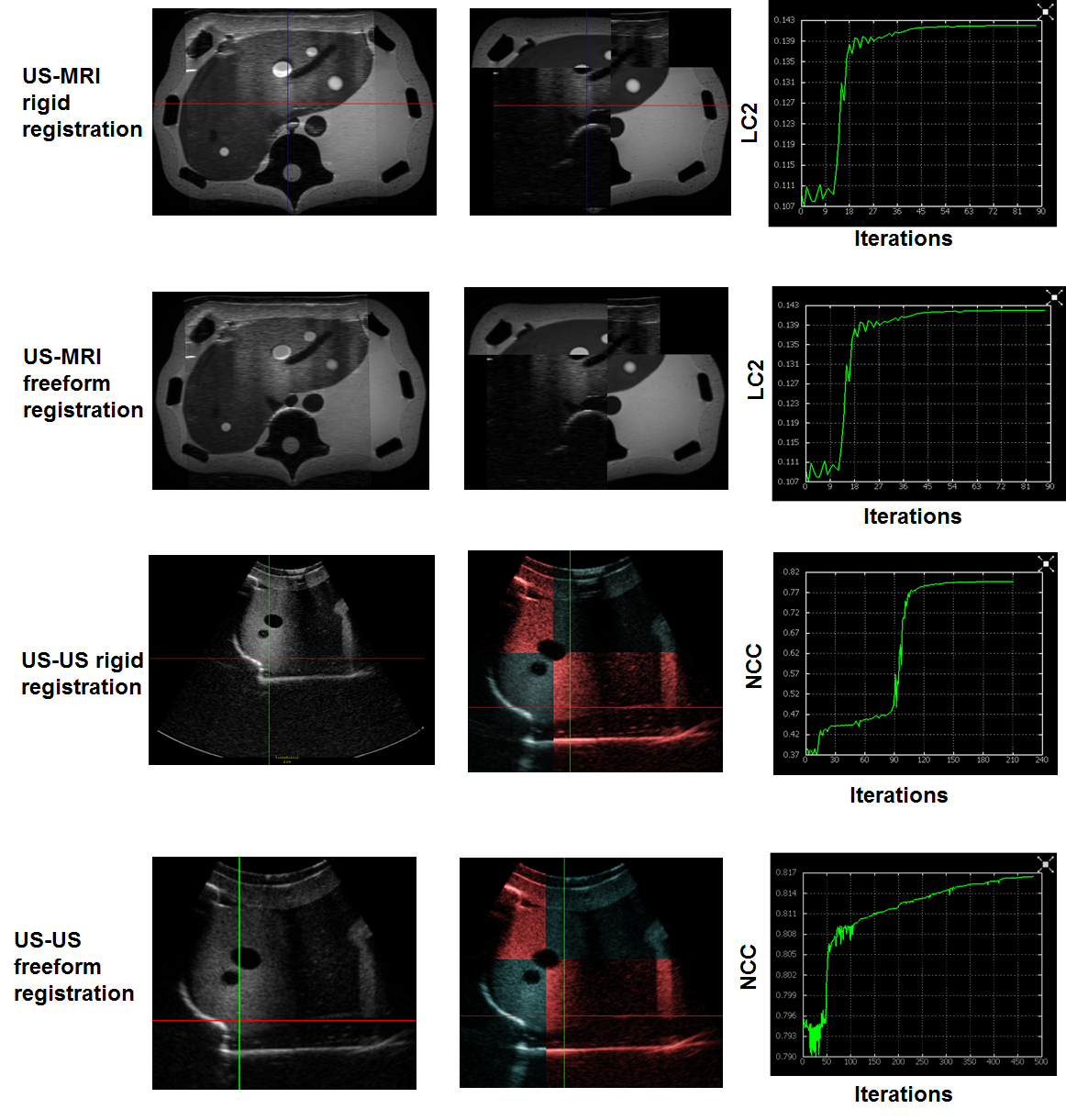

Visual servoing was performed on an ultrasound phantom. The experiment was conducted using ultrasound-MRI and ultrasound-ultrasound registration given an initial ultrasound position, and both rigid registration and freeform registration were used. The visual servoing algorithm was applied until the transformation between the current ultrasound image and the volume of interest were sufficiently small. Next, accuracy of final registration between the ultrasound probe image and the reference image was measured as a function of iterations.

In all cases, the robot accurately obtains the ultrasound scan corresponding to the volume in the preoperative image, as observed from the results of registration of the ultrasound volume with the volume of interest from the reference image. These images are shown in Figure 3. The ultrasound-ultrasound registration gives an image with less misalignment relative to the ultrasound-MRI image.

Accuracy was quantitatively defined using normalized cross-correlation (NCC) for ultrasound-ultrasound registration and linear correlation of linear combination (LC2) for ultrasound-MRI. These metrics measure the intensity-based similarity between two images, with the intuition that the images will become more similar as their alignment becomes more optimal. It was observed that registration accuracy converges to a stable value after a large number of iterations.

Both rigid and freeform give comparable accuracy, which may be due to the ultrasound phantom having a stiff design that prevents the occurrence of deformities.

Conclusion

The results provide a proof-of-concept that ultrasound-based visual servoing is feasible for obtaining the updated anatomy of a patient during surgery. Although prior work has focused on visual servoing using registration between two ultrasound images, the results present evidence that visual servoing between ultrasound and MRI might be feasible.

Since testing was only done using a single ultrasound phantom, future work should involve testing with additional phantoms to see if results are reproducible. Additional testing also needs to be done with more preoperative images.

Future work will involve integrating the existing ImFusion plugin with a Kinect sensor. This would provide visual feedback of the patient when the ultrasound probe is in a location away from the body of the patient. This is necessary because no ultrasound feedback is available when the probe is separated from the patient by air. Another future task is to integrate this project with a current project that also uses visual servoing with ImFusion, but communicates with the KUKA robot using OpenIGTLink. Both of these would require similar image registration functionality and should be used to create a general-purpose module for visual servoing.

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Presentation

- Project Final Report

Project Bibliography

- Abolmaesumi P, Salcudean SE, Zhu WH. Visual servoing for robot-assisted diagnostic ultrasound. Proc 22nd Annu Int Conf IEEE Eng Med Biol Soc (Cat No00CH37143). 2000;4:2532-2535. doi:10.1109/IEMBS.2000.901348.

- Abolmaesumi P, Salcudean S, Sirouspour MR, DiMaio SP. Image-guided control of a robot for medical ultrasound. IEEE Trans Robot Autom. 2002;18(1):11-23. doi:10.1109/70.988970.

- Bischoff R, Kurth J, Schreiber G, et al. The KUKA-DLR Lightweight Robot arm – a new reference platform for robotics research and manufacturing. In: Joint 41th Internatiional Symposium on Robotics and 6th German Conference on Robotics.; 2010:741-748.

- Chaumette F, Hutchinson S. Visual Servo Control. IEEE Robot Autom Mag. 2007;1(March):109-118.

- Chatelain P, Krupa A, Navab N. Optimization of ultrasound image quality via visual servoing. 2015.

- Choset H. Principles of Robot Motion: Theory, Algorithms, and Implementation. (Choset HM, ed.). MIT Press; 2005:603. Available at: http://books.google.com/books?id=S3biKR21i-QC&pgis=1. Accessed October 27, 2014.

- Conti F, Park J, Khatib O. Interface Design and Control Strategies for a Robot Assisted Ultrasonic Examination System. Khatib O, Kumar V, Sukhatme G, eds. Exp Robot. 2014;79:97-113. doi:10.1007/978-3-642-28572-1.

- Fuerst B, Hennersperger C, Navab N. DRAFT : Towards MRI-Based Autonomous Robotic US Acquisition : A First Feasibility Study.

- Gardiazabal J, Esposito M, Matthies P, et al. Towards Personalized Interventional SPECT-CT Imaging. In: Golland P, Hata N, Barillot C, Hornegger J, Howe R, eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2014.Vol 8673. Lecture Notes in Computer Science. Cham: Springer International Publishing; 2014:504-511. doi:10.1007/978-3-319-10404-1.

- Janvier M-A, Durand L-G, Cardinal M-HR, et al. Performance evaluation of a medical robotic 3D-ultrasound imaging system. Med Image Anal. 2008;12(3):275-90. doi:10.1016/j.media.2007.10.006.

- Krupa A. Automatic calibration of a robotized 3D ultrasound imaging system by visual servoing. In: IEEE International Conference on Robotics and Automation, 2006.Vol 2006.; 2006:4136-4141. doi:10.1109/ROBOT.2006.1642338.

- Nakadate R, Matsunaga Y, Solis J. Development of a robot assisted carotid blood flow measurement system. Mech Mach Theory. 2011;46(8):1066-1083. Available at: http://www.sciencedirect.com/science/article/pii/S0094114X11000607. Accessed August 25, 2014.

- Schreiber G, Stemmer A, Bischoff R. The Fast Research Interface for the KUKA Lightweight Robot. In: IEEE Workshop on Innovative Robot Control Architectures for Demanding. ICRA; 2010:15-21.

- Shepherd S, Buchstab A. KUKA Robots On-Site. McGee W, Ponce de Leon M, eds. Robot Fabr Archit Art Des 2014. 2014:373-380. doi:10.1007/978-3-319-04663-1.

- Tauscher S, Tokuda J, Schreiber G, Neff T, Hata N, Ortmaier T. OpenIGTLink interface for state control and visualisation of a robot for image-guided therapy systems. Int J Comput Assist Radiol Surg. 2014. doi:10.1007/s11548-014-1081-1.