Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Photoacoustic registration is a promising new technology that has the potential to replace existing registration methods such as EM an optical tracking. Photoacoustic imaging involves shining rapid pulses of laser on seeds which are placed in the surgical site. Seed will absorb and convert the light energy into sound waves which can then be detected by ultrasound. In this project, however, we propose that matter excited with high energy laser pulses will also produce a photoacoustic signal even without the seeds. The main goal of this project is to perform a point-to-point registration between ultrasound and stereocamera coordinate systems, i.e. if one takes a stereocamera image of an object hit by a laser and an ultrasound image is also obtained of same object hit by the laser, a point-to-point mapping can be obtained between the two coordinate systems.

The photoacoustic effect was first discovered by Alexander Graham Bell in 1880. It is the principle by which electromagnetic (light) waves are converted to acoustic waves due to absorption and thermal excitation. The photoacoustic effect has been previously exploited to lead to the invention of photoacoustic spectroscopy and is currently used in biomedical applications such as structural imaging, functional imaging, and molecular imaging.

When matter is exposed to high frequency pulses of light, most of the light’s energy will be absorbed by the molecules in the incident matter. As the energy from the light is converted to heat, the molecules become thermally excited. Heat waves will then radiate away from the matter causing sound waves due to pressure variations in the environment around the medium. These sound waves can then be detected by acoustic devices such as ultrasound.

Current surgical tracking systems are limited in their capabilities and lack effortless integration into a surgical setting. These technologies include electromagnetic (EM) and optical tracking modalities. The primary shortcomings of such systems include their intrusive integration into the OR, sterility, and cost-effectiveness.EM and optical tracking systems usually consist of a third party reference base station, implantable fiducial markers, and associated surgical instruments, each of which has a sensor attached to it.

In order to track the position of each tool with respect to the patient, fiducial markers first need to be implanted in the patient so that a patient to base station “registration” (i.e. a mapping of the current patient position and base station position) can be computed. Only after completing this initial registration can each surgical instrument be registered to the base station and tracked with respect to the patient.

Base stations are intrusive due to their large form factor and complicate the layout of an OR. Additionally, base stations can limit the physical capabilities of a surgeon due to the fact that the patient can only be place on the base a specific way and the surgeon must work around that placement. In optical tracking systems, a clear line of sight between each optical sensor and the reference tracker must be constantly maintained in order to track each tool. All personnel in the OR, including the surgeon, must be careful in his/her movements to prevent blocking this line of sight. In EM tracking, any metal object in the OR can cause interference between the EM transmitter base and each surgical tool. Furthermore, mutual interference between tools can occur if tools are brought into close proximity with one another, which is required in most surgical procedures.

The attachment of sensors onto every surgical instrument also presents sterility and cost issues. The EM and optical sensors attached on surgical instruments cost about $500 per unit – an expensive cost for such a small piece of equipment. As a result, most ORs have a limited quantity of these trackers; the number of surgical tools usually greatly outnumbers the number of trackers. Sterility issues arise from the fact that every instance a new surgical instrument is required by the surgeon, a sensor must be sterilized and attached onto the tool. This can get complicated when there are tools of many sizes; if a surgeon selects a specific size tool pre-surgery and intra-surgery realizes he needs a different size, he needs to wait for a sensor to be sterilized before he can use the new tool since it is likely that all other available sensors are being used on other tools. This time could be critical to the success of the surgery and could separate life from death.

Our specific aims are:

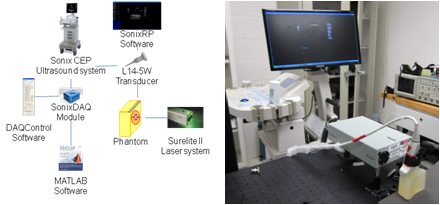

Ultrasound and photoacoustic signals were acquired simultaneously. In our imaging system, an Nd: YAG laser operating at 532 nm or 1064 nm wavelength was used to irradiate our phantom. For our ultrasound system, Sonix CEP ultrasound system and Sonix DAQ module were used to detect and acquire the acoustic waves. The block diagram and the picture of our combined imaging system setup are shown below:

Several “one-point” experiments were performed. In these experiments, a single laser point was projected onto the phantom, and the photoacoustic waves were acquired. A single laser source with 1064 nm wavelength was projected onto the phantom with no brachytherapy seed, one seed, and three seeds. In addition, a laser source with 532 nm wavelength was projected onto the phantom without any seed.

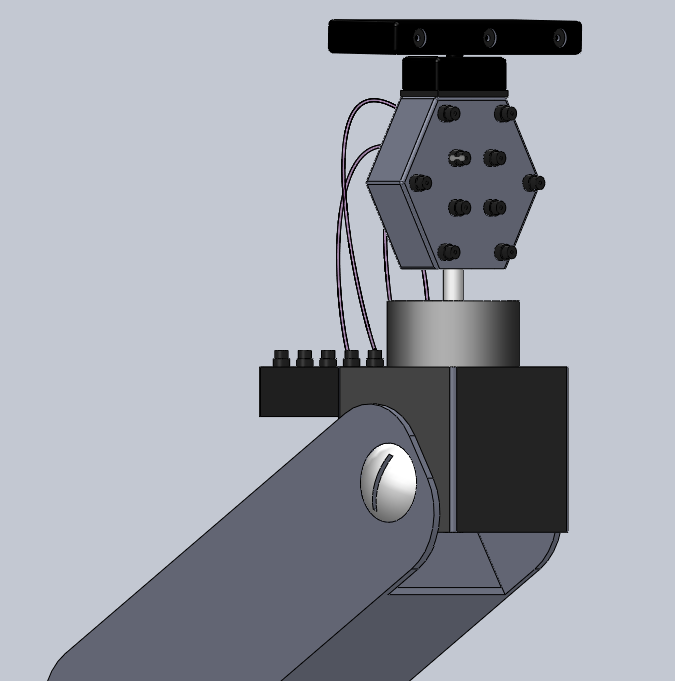

After the one-point experiments, a laser pattern with 532 nm wavelength using three laser points were fired to the phantom. In this experiment, a single laser point was projected onto the phantom at three different locations sequentially to create the three-point pattern. A stereocamera was integrated to the ultrasound-photoacoustic imaging system to perform the point-cloud registration between the stereocamera and ultrasound images. A picture of the ultrasound-photoacoustic imaging system with the stereocamera is shown in figure 3. In order to determine the accuracy of the rigid registration, a single laser point was projected onto the phantom at a different location and its coordinates in the ultrasound domain were compared with those computed from the rigid registration.

Ultrasound signals were converted to pre-beamformed images which were in turn transformed to beamformed images. In order to detect the point on the ultrasound image where the laser was projected, the brightest (highest intensity) point was detected by detecting the brightest point (via centroid function) in the thresholded version of the beamformed image. The stereocamera images were thresholded to find the coordinates of the points where the laser was projected.

We designed a surgical navigation technology named iPASS which could eliminate all drawbacks of current surgical navigation systems and submitted our design to the BMEStart Competition. Surgical navigation is not a new technology, and similarly the Photoacoustic effect is not a new phenomenon. The iPASS platform, however, is the first surgical system that is able to combine the two into creating one powerful, potent, and most importantly easy-to-use next-generation technology. Perhaps one of the most desirable features of the iPASS technology is its easy integration (with a minimal learning curve) into a surgical setting. Unlike its predecessors, the entire registration process takes less than a second to perform; calibration and registration of EM and Optical navigation system could take several minutes to an hour in some cases. Additionally, the only training the medical staff would require is a one-hour online laser safety-training course.

The iPASS platform technology goes beyond just basic surgical tracking. The iPASS system is also capable of providing real-time video overlay of anatomical features of interest onto the patient to help guide the surgeon with a specific procedure. An application of such a powerful feature would be in Laparoscopic Partial Nephrectomy. The surgeon is interested in finding and excising a tumor, with the help of real-time video overlay the surgeon is able to pinpoint the incision spot and guide his surgical instrument to scoop out the tumor while avoiding critical anatomy (arteries, etc) and minimizing loss of good tissue. The iPASS is referred to as a “platform technology” not only because of its tracking ability, or its video-overlay application, but because it contains several other common features which are critical in several procedures. The iPASS platform is capable of performing temperature imaging which is common in tumor ablations; iPASS already contains an integrated laser source as well as an ultrasound system. The ultrasound system is able to record changes in the temperature (speed of sound changes due to heat) while the surgeon moves the laser around to remove the tumor. There are numerous other such applications as well such as molecular imaging, thermal imaging and elastography. Therefore, it is clear that the iPASS platform is not only able to perform basic surgical navigation (with added precision and elimination of all the problems with current EM and Optical systems), but is also has the potential to replace numerous surgical devices (with redundant functions) and drive down costs associated with purchasing and maintaining other devices.

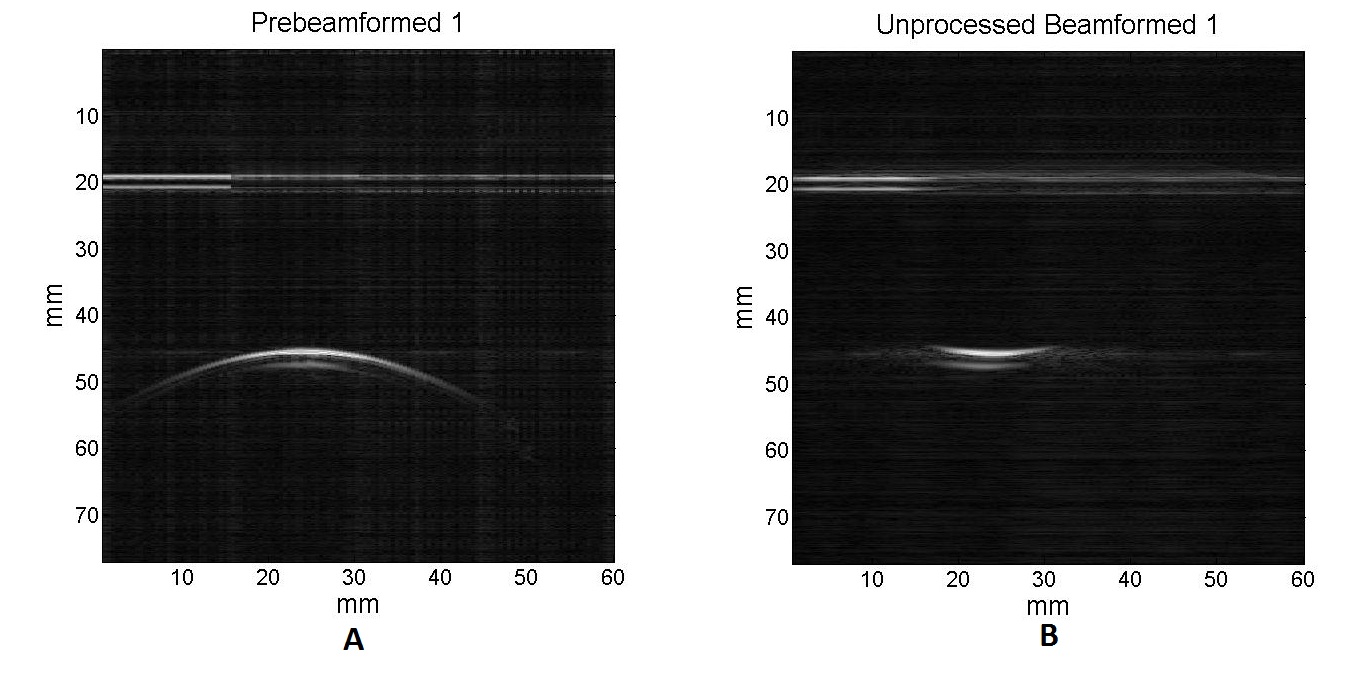

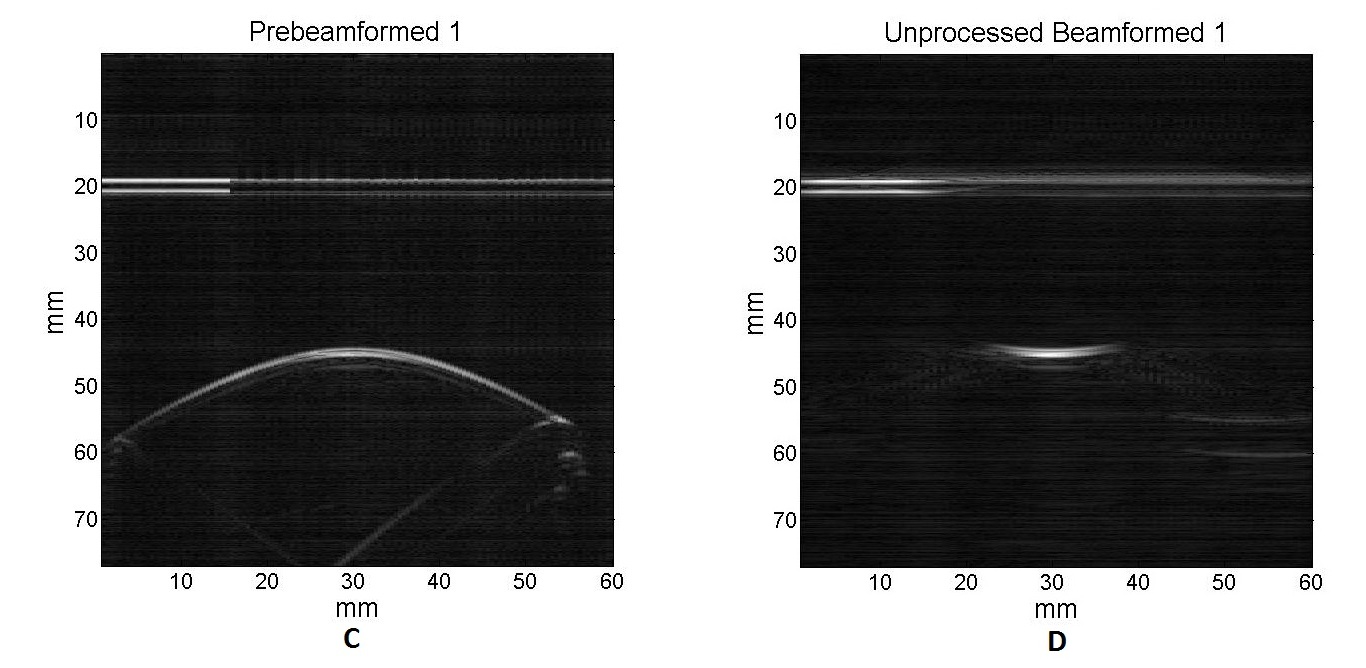

For the one-point experiments, the photoacoustic waves were detected using the phantom with either 532 nm or 1064 nm wavelength laser. The results are shown below:

A and B are prebeam and beamformed images using a 532 nm wavelength laser source, respectively. C and D are prebeam and beamformed images using a 1064 nm wavelength laser source, respectively.

A and B are prebeam and beamformed images using a 532 nm wavelength laser source, respectively. C and D are prebeam and beamformed images using a 1064 nm wavelength laser source, respectively.

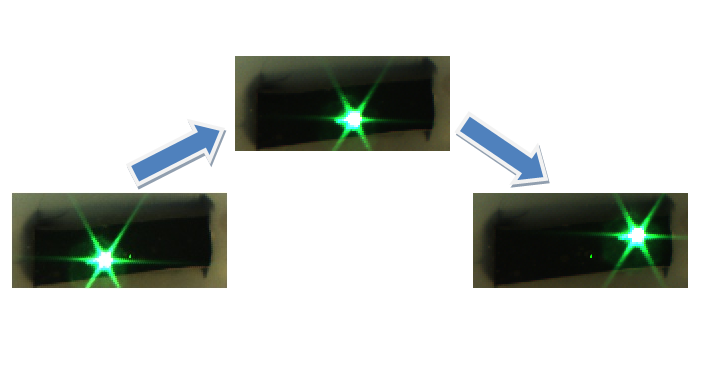

A single laser point was projected onto the phantom at three different locations sequentially and the stereocamera image at each location was acquired as shown below:

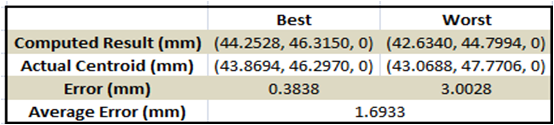

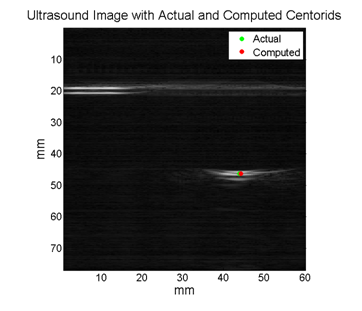

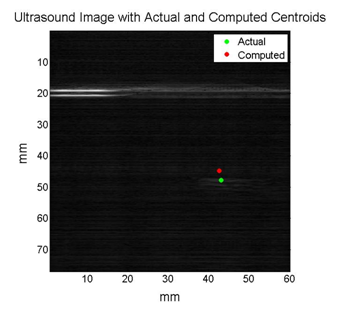

2D Ultrasound images of the phantom and the laser point at each location were acquired simultaneously, and the rigid registration from the camera domain to the ultrasound domain was performed. In order to verify the registration, a single unknown laser point was projected onto the phantom and the coordinates of the point in the ultrasound coordinate system were compared to the coordinates computed from the registration. A laser points projected to the upper right corner and the lower left corner of the phantom were used for the verification process. The registration performed the best when the upper right corner laser point was used for the verification, while the worst registration performance occurred when the lower left corner laser point was used. The table summarizes the results of the registration, and the figures below show the computed and the actual coordinates of the verification points.

We were able to perform the point cloud transformation between stereocamera and ultrasound domains using the photoacoustic effect. Therefore, we have achieved the maximum deliverable which was to integrate a stereocamera and perform the point cloud transformation. However, we have encountered several technical problems.

For the three-point experiment, we planned to project three laser points at the same time on the phantom instead of projecting them one by one sequentially. Unfortunately, we did not have the right equipments (mirror mounters, optical fibers, etc…) to split the laser into three beams. In addition, when the laser was projected through an optic fiber, its power was reduced.

Our registration was overall very accurate, but it can be improved further by calibrating the stereocamera and employing more points for the registration.

There appears to be a problem with these functions running on a Mac OS X version of MATLAB. They were tested on a Windows Platform. Therefore, in order to ensure an error free experience, please run these programs only on a Microsoft Windows Platform, MATLAB version R2009b or later.

Please download the “FINAL CODE” zip-file below. Un-zip the file, and then immediately navigate to the Readme.txt and follow the directions.