Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Last updated: April 4th, 2017

Enter a short narrative description here

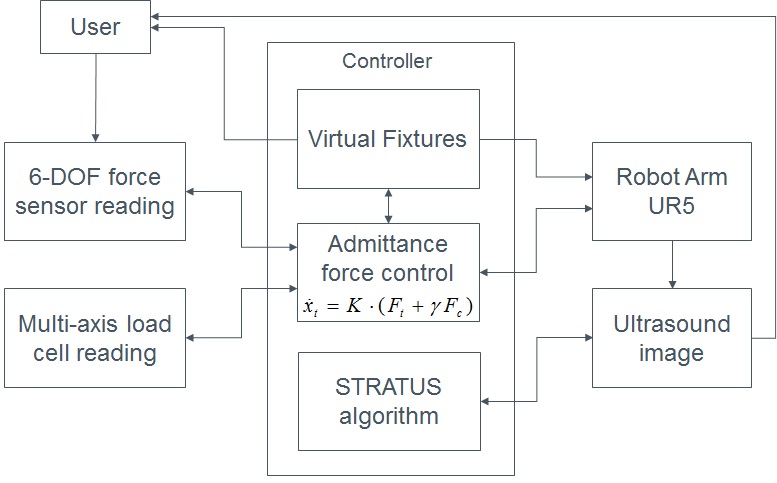

Sonographers commonly suffer from musculoskeletal pain and repetitive strain injuries because the clinical necessity of applying a large force against patients during an ultrasound scanning. Especially when facing obese patients, pregnant women or imaging of deep organs, large contact force is inevitable to find a clear, diagnosable ultrasound image. To overcome this challenge, this project aims to use a cooperatively controlled robotic arm, UR5, to help the operator apply forces and improve the images stability at the same time (Figure 1). Moreover, the robotic system enables the implantation of synthetic tracked aperture ultrasound (STRATUS) imaging, which improves the resolution of ultrasound images (Figure 2).

A paragraph or so here. Give background of the problem. Explicitly state specific aims (numbered list is good) and explain why they are important

Background

In many clinical situations, sonographers have to apply large force against patients to acquire a clear, diagnosable ultrasound (US) image; especially when facing obese patients, pregnant women, or making deep organs diagnosis. Because of these common demands, sonographers often suffer from musculoskeletal pain and repetitive strain injuries. To overcome this challenge, we are using a robotic arm, to assist the sonographer during the US scanning procedure. This project started in 2015 by Rodolfo Finocchi, Dr. Russ Taylor and Dr. Emad Boctor; I, Ting-Yun Fang, joined the project in 2016 and started with improving the previous control algorithm and mechanical design for an US device (Figure 3). The co-robotic US imaging system is constructed with a robotic arm, UR5, an US machine and probe, a 6 degree-of-freedom (DOF) force sensor and a 1-DOF load cell. The dual force sensors allow the robot to differentiate the force applied by the sonographer and the actual contact force between the US prove and the tissue. Our experiment shows that the co-robotics system can reduce the force applied by the user while improving the US image stability. The co-robotic US system is also used in synthetic tracked aperture ultrasound (STRATUS) Imaging algorithm. STRATUS uses the robotic arm to track and actuate the US probe then transform the US images into a uniform coordinate. By summing up the US signal in the uniform coordinate, the resulting US image has a better resolution and would be beneficial for making diagnosis. This algorithm can be applied to both lateral and elevational direction and has a potential to perform 3D object scanning.

Project Aims

Combining the two valuable features of the co-robotic US imaging system: 1) reduces the force applied by the user and improves the stability of the US images; 2) enables the STARTUS algorithm and increases the image resolution; this project aims to integrate the two applications and makes the system fits better into practical clinical applications. Using cooperative control corresponds with virtual fixtures enables the operator to move the robot and applies STRATUS algorithm on specific region of interests. The virtual fixtures restrain the motion of the robot/user and makes sure the fixed direction of probe motion. The optimum goal would be implementing real time STRATUS that generates US images with better resolution during a scan. Moreover, in order to cover general clinical practices, measuring contact forces between probe and tissue along three directions is inevitable. For example, in the case of echocardiography, the US probe is first pushed against the middle of the chest then orientated to find a preferable view. By replacing the current 1-DOF load cell with a multi axis force sensor, force measurement can be extended to other axes and the robot dexterity can be increased.

Significance Using the co-robotic US imaging system, we want to maximize the use of robot arm. It has potential to assist regarding the US scanning procedures in multiple clinical applications.

here describe the technical approach in sufficient detail so someone can understand what you are trying to do

1. Admittance robot control

2. STATUS algorithm

3. Virtual fixtures The general idea of virtual fixture is to solve for delta_q (joint increment) for the linear least square system:

where Δq is the desired incremental motions of the joint variables, Δxd, Δx are the desired and the computed incremental motions of the task variables in Cartesian space, respectively. J is the Jacobean matrix relating task space to joint space. W is a diagonal matrix for weights. We must ensure proper scaling of weights corresponding to different components. Δt is the small time interval of control loop, which is ignored in the algorithm.

There are multiple kinds of geometric constants, the first one we applied is stay at a point, where the robot will maintain a tool position at a desired location.

where ϵ1 is a small positive number that defines the size of the range that can be considered as the target. It implies that the projections of δ p+Δx p on the pencil through Pt be less than ϵ1. We approximate the sphere of radius ϵ1 by polyhedron having n×m vertices, and rewrite the equation by a group of linear inequalities.

Then we can set H and h as:

Larger values of n and m can reduce the size of the polyhedron, and make it closer to the inscribed sphere.

Most of the virtual fixtures, such as stay on line and stay in plane, have the robot maintain the tool direction, using the constrain:

where ϵ2 is a small positive number that defines the size of the range that can be considered as the desired direction. Similar to stay at point, we set H and h as

During ultrasound imaging, a virtual fixture allowing the tool tip to move in a line can improve the imaging quality of STATUS. To guide the tool to move along a reference line, the user must provide the reference line. The default options include moving along the X tool axis and Y tool axis. The linear system has to satisfy the inequality:

Where up is the projection of vector δ p+Δxp on the plane which is perpendicular to line L, and ϵ3 is a small positive number that defines the distance error tolerance to the reference line. A rotation matrix is generated to determine up by generating two unit vectors v1 and v2 that spacn the plane, and defining an arbitary vector l:

Using the information of the plane, we can re write the inequality as

And the H and h matrices will be:

To confine the tool in a given plane, the system must satisfy the inequality:

where ϵ5 is a small positive number, which defines the range of error tolerance. Then H and h are set as

The values of ϵi,i=1,⋯,5 define the range of the error tolerance. They specify how much the robot can drift away from the reference constraints.

describe dependencies and effect on milestones and deliverables if not met

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space.