Table of Contents

RGBD Camera Integration into Camera Augmented Mobile C-arm

Last updated: Apr 9 and 5:38PM

Summary

- Students: Han Xiao

- Mentor(s): Dr. Nassir Navab, Bernhard Furest, Javad Fotouhi

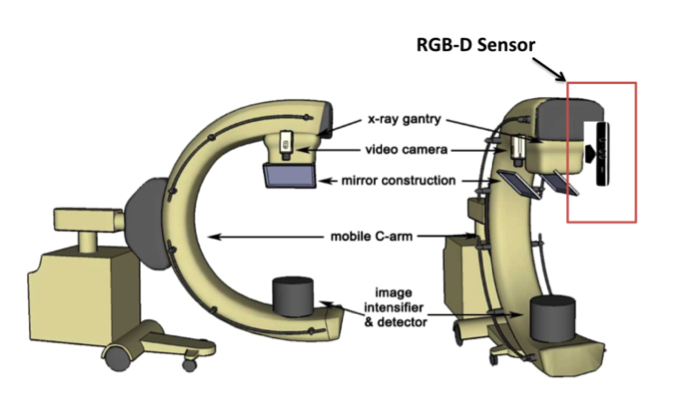

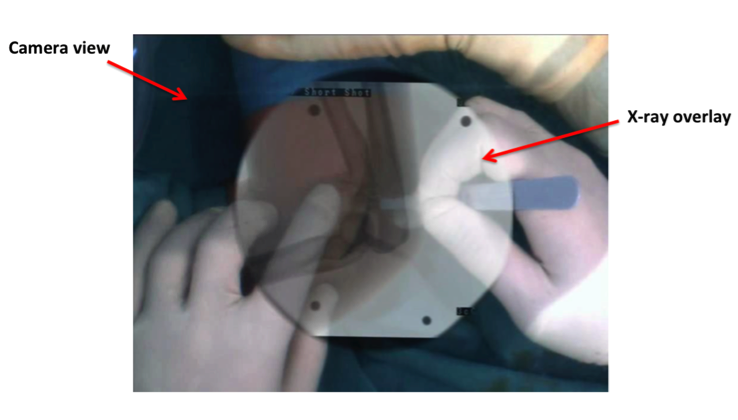

Camera Augmented Mobile C-arm (CamC) is a system that fuses X-ray images and real time video. The X-ray image overlay provides an intuitive interface for surgical guidance with high accuracy, and can be used for many trauma and orthopedic surgeries. Currently, the system provides a view as shown in Fig. 1. The overlay is based on 2D-3D vision calibration. The current system is not able to tell the spatial relationships between surgeon’s hands, tools and targets due to the lack of depth information in the image. As a result, surgeon’s hands and tools are partly covered by the X-ray overlay.

The overlay is based on 2D-3D vision calibration. The current system is not able to tell the spatial relationships between surgeon’s hands, tools and targets due to the lack of depth information in the image. As a result, surgeon’s hands and tools are partly covered by the X-ray overlay.

Our goal is to integrate a depth sensor (Kinect sensor) into the CamC system to overcome this limitation. We can then segment hands and tools according to depth data, and create an enhanced X-ray overlay that will not block hands and tools. This will give us a more intuitive view of spatial relationships between targets, hands, and tools.

Deliverables

- Minimum: (March 27)

- ImFusion plugin for X-ray image acquisition, and CCD camera video acquisition.

- Kinect sensor mounting and point cloud acquisition.

- X-ray image – video calibration, and video – point cloud registration.

- Expected: (April 17)

- Enhanced X-ray overlay rendering.

Maximum:(May 1)Phantom validation and surgical procedure evaluation.Add more useful overlays according to depth information.

Technical Approach

The mechanical setup for the Kinect sensor is illustrated in Fig. 2.

We will mount the Kinect sensor on the side of the X-ray gantry using 3D-printed mounts. The Kinect should have a distance from the image intensifier of about 1 meter, which will reserve sufficient space between objects being scanned and the Kinect. The Kinect will be positioned so that it can cover most space on the area around the image intensifier (see Fig. 3). After mounting the Kinect sensor, we will perform the following steps to complete this integration.

- Distortion correction: We will use a nonlinear radial distortion model and compute a lookup table for fast distortion correction for the video camera.

- X_un=D+X_dist with X_un∈R X are points on the image

- D= X_dist (k_1 〖r^2+ k〗_2 r^4+⋯+k_i r^2i ) A polynomial function of the distortion coefficients k_i

- The coefficients are computed using calibration techniques with a calibration object.

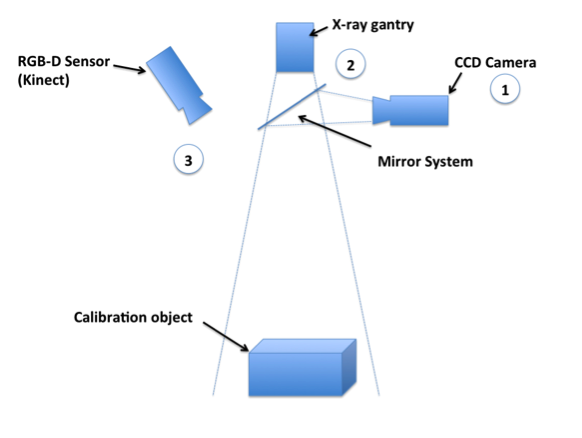

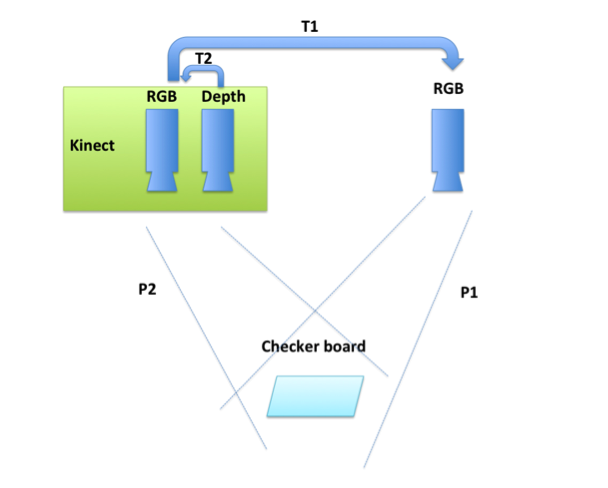

- Kinect and camera calibration: We will use the checkerboard calibration technique to calibrate the Kinect and CCD camera in following steps (see Fig. 4).

- Scan a checkerboard in multiple positions with Kinect and CCD camera from two different views. Extract interest points on the checkerboard. According to the above information, we compute the projection matrixes P1 and P2 (Fig. 4) of CCD camera and Kinect sensor. Based on the projection matrixes, we can find out the transformation T1 from the Kinect RGB camera to the CCD camera. The Kinect depth and RGB data is already co-registered in the OpenNI (T2 in Fig. 4).

- Finally, we can transform the depth data from Kinect frame to CCD camera frame. We will also need to optimize our calibration algorithms to minimize occlusion and preserve depth information.

- Enhanced X-ray overlay: After successful calibration, we will be able to get depth data co-registered to the CCD camera view. We will develop an enhanced X-ray overlay by the following steps:

- Take an X-ray image and at the same time store the depth mesh of the phantom as a static reference.

- Track the depth video stream and find out the depth changes. 3) Compare depth to the reference, and render overlay according to spatial relationships.

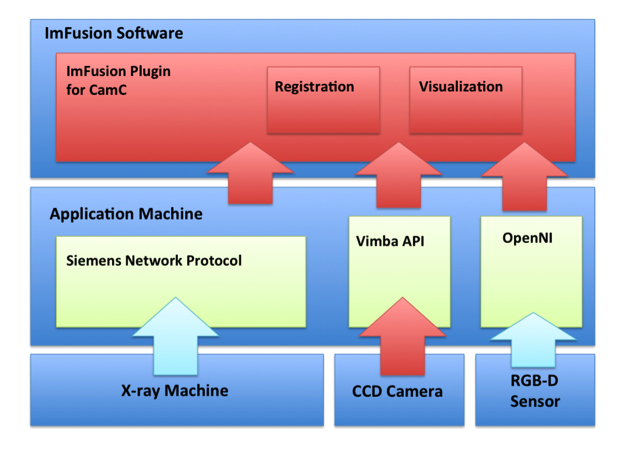

- Software architecture: We are developing software for CamC on top of ImFusion in this project. The block diagram is shown in Fig. 5.

- Application machine: A fast PC runs software communicating with multiple devices.

- Siemens network protocol: Software developed by Siemens that dumps new X-ray images to a directory on application machine as raw files.

- Vimba API: API used to communicate with CCD Camera.

- OpenNI: Open source software for natural interaction devices. Used to communicate with the Kinect device.

- ImFusion: Powerful software solutions for medical image processing and computer vision.

- The red blocks are parts we need to develop and other blocks exist tools we rely on. Ideally, we will have an ImFusion plugin for a new CamC system.

Dependencies

* PC and remote control of C-arm application machine

- I already got a fast PC for this project in the Malone Hall. I also need the help from Bernhard to setup a remote control on this PC to control the C-arm application machine in Mock OR.

- Expected resolve date: February 20

- Resolved

* Kinect sensor and its mounting supports

- I got a Kinect sensor from the CAMP lab. Bernhard Furest already designed and ordered the mounting tool for Kinect mounting. The tool should be arrived on February 20. Then, I will work with Bernahrd Furest and Javad Fotouhi to figure out a best position to mount the Kinect.

- Expected resolve date: March 6

- Resolved

* ImFusion source code for point cloud data

- I need the ImFusion source code for point cloud gathering. My mentor already contacted the company and asked for a version for CAMP researches.

- Expected resolve date: February 27

- Resolved

* Registration and calibration tools

- I need to get calibration checkerboard and objects from the CAMP group, I will first figure out what will be the calibration method and then discuss in weekly meeting.

- Expected resolve date: March 4

- Resolved

* Animal tissue specimen and phantoms

After developing the new system, I need to do validation with phantoms on the new system. I will get animal tissue specimens from the CAMP group.Expected resolve date: April 22To be resloved

Milestones and Status

- Milestone name: Finish developing ImFusion plugin for X-ray image and video acquisition.

- Expected Date: February 27

- Status: Achieved

- Milestone name: Kinect mounted on C-arm and get point cloud data from ImFusion.

- Expected Date: March 6

- Status: Achieved

- Milestone name: Kinect point cloud and video are registered; X-ray image and video are registered

- Expected Date: March 27

- Status: Achieved April 4

- Milestone name: An enhanced overlay developed

- Expected Date: April 17

- Status: Achieved April 30

Milestone name: Finish animal tissue specimen validation and evaluationExpected Date: May 1Status: Not started

- Milestone name: Final poster presentation

- Expected Date: May 6

- Status: Achieved

Reports and presentations

- Project Plan

- Project Background Reading

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Presentation

- Project Poster Presentation

- Project Final Report

- links to any appendices or other material

Project Bibliography

* here list references and reading material

- Navab, Nassir, S-M. Heining, and Joerg Traub. “Camera augmented mobile C-arm (CAMC): calibration, accuracy study, and clinical applications.” Medical Imaging, IEEE Transactions on 29.7 (2010): 1412-1423.

- Navab, Nassir, A. Bani-Kashemi, and Matthias Mitschke. “Merging visible and invisible: Two camera-augmented mobile C-arm (CAMC) applications.”Augmented Reality, 1999.(IWAR'99) Proceedings. 2nd IEEE and ACM International Workshop on. IEEE, 1999.

- Traub, Joerg, et al. “Workflow based assessment of the camera augmented mobile c-arm system.” AMIARCS, New York, NY, USA. MICCAI Society (2008).

- Wang, Lejing, et al. “Long bone x-ray image stitching using camera augmented mobile c- arm.” Medical Image Computing and Computer-Assisted Intervention–MICCAI 2008. Springer Berlin Heidelberg, 2008. 578-586.

- Zhang, Zhengyou. “A flexible new technique for camera calibration.” Pattern Analysis and Machine Intelligence, IEEE Transactions on 22.11 (2000): 1330-1334.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space.