Table of Contents

Project IX: Big Data Meets Medical Physics Dosimetry

Last updated: May 9, 2014 at 1:00pm

Summary

This project’s goal is to apply “Big Data” analytic techniques to create a toxicity risk model(s). Oncological radiotherapy planning accounts for nei- ther the internal structures within organs, nor the other data that is available — such as knowledge from experience with other patients or the current patient’s health records.

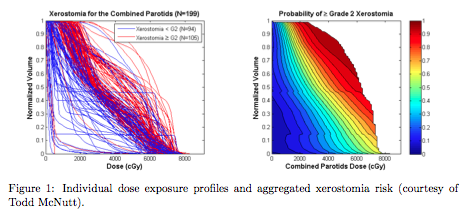

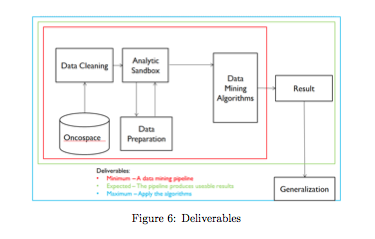

The project entails applying data analytics to Oncospace — a database developed by Johns Hopkins Hospitals’ Radiation Oncology Department. Oncospace has a diverse set of clinical data (Figure 2); by applying “Big Data” machine learning techniques to Oncospace we hope to develop a data-driven model for assessing toxicity risk.

- Students: Fumbeya Marungo, Hilary Paisley, John Rhee

- Mentor(s): Todd McNutt, Scott Robertson

Background, Specific Aims, and Significance

Medical physicists in oncology dosimetry design and assess treatment plans for radiation therapy. By planning the location and intensity of radiation doses, the dosimetrist’s seeks to destroy malignant cells while minimizing the risk of toxicities (side effects) from damage to healthy tissue.

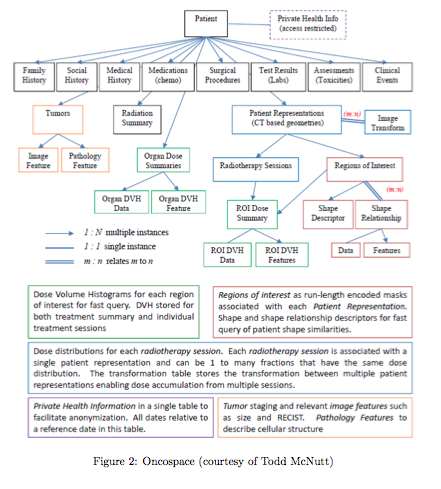

The standard approach to treatment planning uses a dose volume histogram (DVH). The left chart in Figure 1 displays a collection of DVH plans. Each curve is a different plan. Each point on the curve represents the percentage of a given organ’s volume that received at least a given amount of ionizing radiation. Curves that are towards the right have larger percentages that receive higher total doses. The right chart in Figure 1 presents the risk of a given toxicity derived from the DVH outcomes.

Kutcher et al. (1991) presents estimates of toxicity from using DVH based on survey data from Emami et al. (1991). The model underlying Kutcher et al. (1991); Emami et al. (1991) have a number of simplifying assumptions, however. Each organ or volume of interest is considered uniform; the internal structures are not a factor in risk assessment. In addition no information that may be available in the patient’s health record — such as family history, alcohol use, previous surgery, etc. — is included. While the limitations underlying the DVH approach are well recognized, they are difficult to address. Moreover, as more patients are surviving treatment, the need to address toxicity risks becomes more acute Bentzen et al. (2010).

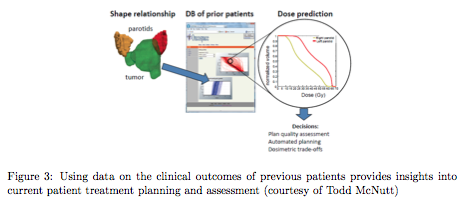

There are a number of toxicities associated with associated with radiation therapy. By mining Oncospace, knowledge learned from previous patient outcomes contributes to creating more effective and safer treatment plans (see Figure 3).

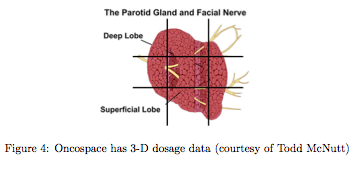

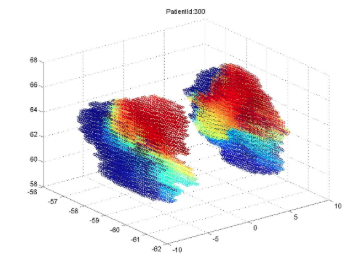

In the case of xerostomia, for example, irradiation of the parotid gland leads to severe dry mouth. Worse still xerstomia does not tend to resolve. Figure 4 illustrates that the parotid gland is highly complex. However, the gland is modeled as a single volume. This leads to the simplified, 2-D risk assessment in Figure 1.

A successful risk model offers several benefits, it can: provide guidance for dosimetrists in assessing plans; serve as a component within an automated dosage tool; and provide greater insight into the sensitivity of different regions of healthy tissue to irradiation. Ulti- mately the work can provide patients with safer effective plans.

We have successfully analyzed the database and have been able to represent the data in graphical form. We can now make a connection between the dosage values for each voxel and the corresponding patient toxicity. The image below is a graphical representation of one patient's parotid glands. The red coloring signifies higher radiation dosages and the blue represents low levels of radiation dosage.

There is a corresponding image for each of the patients, with many differing dosage grids. This locational dosage information will serve as the features for the data mining algorithm. We are at the stage to implement the data mining algorithm, specifically a Random Forest algorithm.

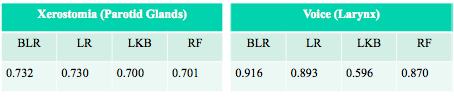

After performing the Random Forest algorithm, we were not entirely content with the results; the LKB model was performing better. Therefore, we ran Linear Regression on the data and found that this performed better than both the Random Forest and the LKB models, with and without bagging. We compared the models by calculating ROC AUC using a leave-one-out validation. We also had time to complete our maximum deliverable by testing our pipeline and data mining approach on the Larynx. The results are as follows.

Future work will include: testing our pipeline on other organs; deformable registration of the organ region to potentially get better results; further data collection to make the data mining algorithms perform better; and ultimately to create a clinical interpretation of the results for personalized patient radiation treatment.

Deliverables

- Minimum: (April 20, 2014)

- A data mining algorithm(s) that is callable from Matlab that accepts a treatment plan and additional clinical data and outputs a risk measure for a specific toxicity. ✔

- Software that cleans and transforms data from Oncospace into a format acceptable to the algorithm. ✔

- Performance assessment of the algorithm. ✔

- Expected: (April 25, 2014)

- Includes Minimum Deliverables ✔

- Algorithm meets acceptable performance levels. ✔

- Maximum: (May 5, 2014)

- Includes Expected Deliverables ✔

- Generalize process to create risk measure on one or more additional toxicities. ✔

Technical Approach

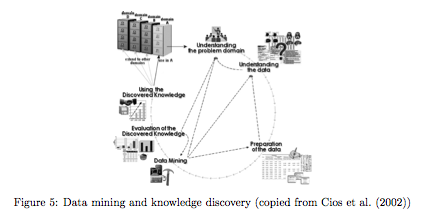

Fayyad et al. (1996) presents data mining and knowledge discovery as a nine-step process:

1. Understanding the application domain.

2. Creating a target data set.

3. Data cleansing and preprocessing

4. Data reduction and transformation.

5. Choosing a data mining task (i.e. clustering, classification, or regression).

6. Choosing an algorithm.

7. Data mining using the algorithm.

8. Evaluating the results of the data mining step (e.g. visualization).

9. Consolidating the results of the discovered knowledge.

We intend to apply this approach, with the toxicity risk model representing the final step of consolidating the results. While the Fayyad et al. (1996) appears linear, it is best visualized as an interactive process where there is a central activity stream with the process also frequently revisiting previous steps (see Figure 5).

The project plan anticipates that early and late stages will tend to require more input from the domain experts (the team’s mentors in this project). The work will tend to move between the first four steps. As comfort with the domain increase, work normally begins to cycle between data preparation and mining as the models are refined.

Dependencies

There are a number of critical dependencies that are necessary for the project’s progress and completion. These dependencies include:

• Remote read access to the Oncospace SQL database. ✔

• Remote read and write access to a separate SQL Server database and remote file directory for the Analytic Sandbox (see Section 6.1). ✔

• Access to the project’s mentors on a weekly to biweekly basis in person, and routinely via email. ✔

In addition to the critical dependencies, approved funding of up to $750 per team member can serve to increase productivity and mitigate risks. For example, if the team recognizes the need for certain books, or software licenses then the members can quickly make purchases rather than waiting for approval. Student licenses for software are often inexpensive; the low costs can lead to benefits in terms of faster results and/or further progress.

Change in Dependencies: We no longer request the need to purchase productivity enhancing software licenses. Our project has been set up with open source software and is progressing at an expected pace.

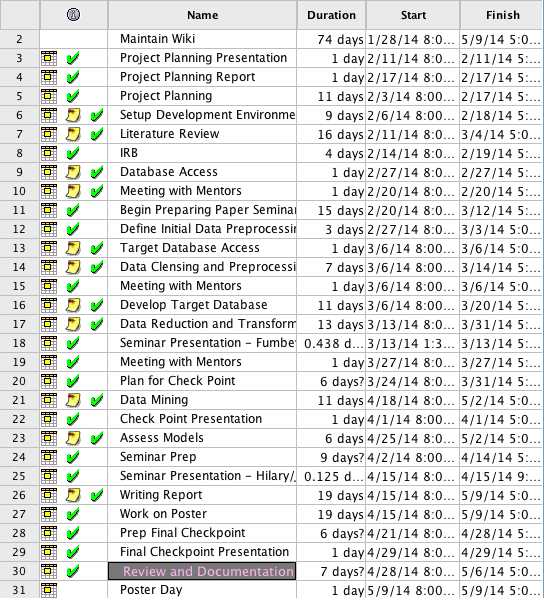

In order to avoid failure due to critical dependencies, the team maintains a project plan using ProjectLIBRE2 open-source software. During meetings, the Gantt diagrams below, provide visual guidance to the project’s current condition.

Milestones and Status

- Milestone name: Project Proposal Presentation

- Planned Date: February 11, 2014

- Expected Date: February 11, 2014

- Status: Completed

- Milestone name: Project Proposal Plan

- Planned Date: February 20, 2014

- Expected Date: February 20, 2014

- Status: Completed

- Milestone name: Paper Presentation - Fumbeya

- Planned Date: March 6, 2014

- Expected Date: March 13, 2014

- Status: Completed

- Milestone name: Checkpoint Presentation

- Planned Date: March 18, 2014

- Expected Date: April 1, 2014

- Status: Completed

- Milestone name: Paper Presentation - Hilary/John

- Planned Date: March 6, 2014

- Expected Date: April 15, 2014

- Status: Completed

- Milestone name: Mini Checkpoint Presentation

- Planned Date: April 29, 2014

- Expected Date: April 29, 2014

- Status: Completed

- Milestone name: Poster Session

- Planned Date: May 9, 2014

- Expected Date: May 9, 2014

- Status: Completed

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Fumbeya Marungo fumbeyareport.pdf fumbeyaslides.pdf

- Hilary Paisley seminar_paper.pdf seminar_presentation.pdf

- John Rhee

- Paper presentation: https://jshare.johnshopkins.edu/jrhee17/public_html/jrhee17_papersummary.pptx

- Project Mini Checkpoint

- Project Final Presentation

- Project Final Report

Project Bibliography

Bentzen, S. M., Constine, L. S., Deasy, J. O., Eisbrunch, A., Jackson, A., Marks, L. B., Haken, R. K. T., & Yorke, E. D. (2010). Quantitative analysis of normal tissue effects in the clinic (QUANTEC): An introduction to the scientific issues. International Journal of Radiation Oncology Biology Physics, 76(3), S3–S9.

Breiman, Leo. (2001), Random Forests.

Brooks Jr, F. P. (1995). The Mythical Man-Month, Anniversary Edition: Essays on Software Engineering. Pearson Education.

Burman, C., Kutcher, G., Emami, B., & Goitein, M. (1991). Fitting of normal tissue tolerance data to an analytic function. International Journal of Radiation Oncology* Biology* Physics, 21(1), 123–135. Cios, K. J., Kagadis, G. C., & Moore, G. W. (2002). Uniqueness of medical data mining. Artificial Intelligenc in Medicine, 26, 1–24.

Emami, B., Lyman, J., Brown, A., Cola, L., Goitein, M., Munzenrider, J., Shank, B., Solin, L., & Wesson, M. (1991). Tolerance of normal tissue to therapeutic irradiation. International Journal of Radiation Oncology* Biology* Physics, 21(1), 109–122.

Fayyad, U., Piatetsky-Shapiro, G., & Smyth, P. (1996). From data mining to knowledge discovery in databases. AI magazine, 17(3), 37.

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., & Witten, I. H. (2009). The weka data mining software: an update. ACM SIGKDD explorations newsletter, 11(1), 10–18.

Kazhdan, M., Simari, P., McNutt, T., Wu, B., Jacques, R., Chuang, M., & Taylor, R. (2009). A shape relationship descriptor for radiation therapy planning. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2009 , (pp. 100–108). Springer.

Kutcher, G., Burman, C., Brewster, L., Goitein, M., & Mohan, R. (1991). Histogram re- duction method for calculating complication probabilities for three-dimensional treatment planning evaluations. International Journal of Radiation Oncology* Biology* Physics, 21(1), 137–146.

Lyman, J. T. (1985). Complication probability as assessed from dose-volume histograms.

Schmarzo, B. (2013). Big Data: Understanding how Data Powers Big Business. John Wiley & Sons.

Tolosi et al. (2011), Classification with correlated features: unreliability of feature ranking and solutions.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space.