Table of Contents

Microsurgical Assistant System

Overview of Research

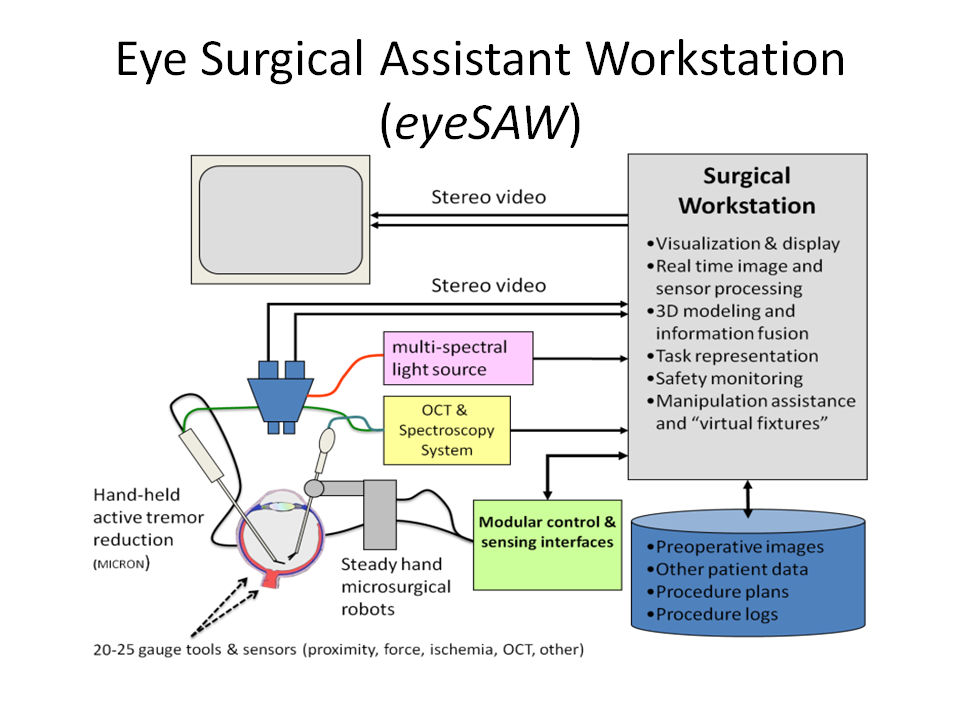

Our research aims to develop techniques to enhance the surgeon's ability to perform surgeries safely and effectively. We mainly focus on combining the various functions of microsensors, high precision robots, software design, and novel surgical techniques with the ordinary tools and equipment used by surgeons to perform new and innovative surgeries that will help to revolutionize medicine.

Target Applications

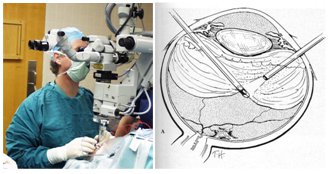

We chose to focus on vitreoretinal eye surgery as our target research area. Vitreoretinal surgery addresses prevalent sight-threatening conditions such as retinal detachment, macular pucker, macular holes, and conditions in which epiretinal scar tissue is removed. In current practice, retinal surgery is performed under an operating microscope with free-hand 20-25 (0.5-0.9mm) gage instrumentation. The patient is often awake and locally anesthetized. In most cases, three incisions in the sclera (sclerotomy) are required: one for infusion to control the intraocular pressure, and two for working instruments. Generally, there are two illumination sources: one is coupled into the microscope optical path, and another is delivered through intraocular fiber-optic “light pipe”. The surgeons often operate in bimanual fashion with a “light pipe” in one hand and a forceps, laser, vitreous-cutting probe, fragmenter, aspiration, or another type of tool in the other hand. These independent devices are often integrated into a surgical system, and are controlled through a common user interface.

In current practice, retinal surgery is performed under an operating microscope with free-hand 20-25 (0.5-0.9mm) gage instrumentation. The patient is often awake and locally anesthetized. In most cases, three incisions in the sclera (sclerotomy) are required: one for infusion to control the intraocular pressure, and two for working instruments. Generally, there are two illumination sources: one is coupled into the microscope optical path, and another is delivered through intraocular fiber-optic “light pipe”. The surgeons often operate in bimanual fashion with a “light pipe” in one hand and a forceps, laser, vitreous-cutting probe, fragmenter, aspiration, or another type of tool in the other hand. These independent devices are often integrated into a surgical system, and are controlled through a common user interface.

dqKYF3E_sfQ Often, in order to access the retina, the vitreous, a gel-like substance in the center of the eye, is slowly removed with a cutting-suction instrument in a procedure called vitrectomy. This is a difficult task because the vitreous may be attached to the delicate retina. Once the vitreous is removed, the surgeons proceed to treat any of a variety of possible retinal dysfunctions. A laser coagulation device may be used to “tack” down a detached retina or to isolate a retinal tear preventing further tear development. The surgeon may also need to peel scar tissue from the surface of the retina to remove tension that is responsible for macular pucker (retinal “wrinkles”). The surgeon may need to perform a more difficult maneuver and peel a finer internal limiting membrane which is 2-3µm thick and nearly invisible.

Another task is retinal vain cannulation where the surgeon delivers a medication directly into a blood vessel inside of the eye. This task has the potential of addressing sight threatening blood clots and providing localized future treatments. However this maneuver is extremely difficult and to risky at the moment.

System Architecture

Robotic Assistants

Eye Robots

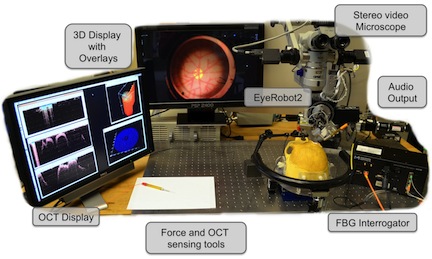

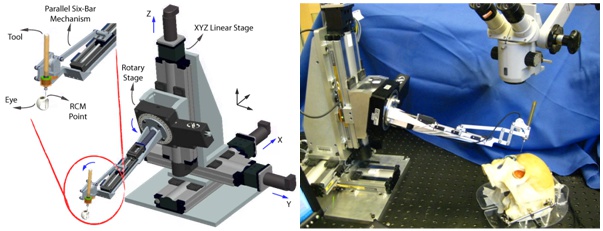

The Steady-Hand Eye Robot is a cooperatively controlled robotic assistant. In cooperative control, the surgeon and the robot both hold the surgical tool; the robot senses forces exerted by the surgeon on the tool handle and moves to comply with very high precision, inherently filtering out physiological hand tremor. For retinal microsurgery, the tools typically pivot at the sclera insertion point, unless the surgeon wants to move the eyeball. This pivot point may be enforced by either a mechanically constrained remote center-of-motion (RCM) in the case of EyeRobot2 or software, implemented in EyeRobot1.

The Steady-Hand Eye Robot is a cooperatively controlled robotic assistant. In cooperative control, the surgeon and the robot both hold the surgical tool; the robot senses forces exerted by the surgeon on the tool handle and moves to comply with very high precision, inherently filtering out physiological hand tremor. For retinal microsurgery, the tools typically pivot at the sclera insertion point, unless the surgeon wants to move the eyeball. This pivot point may be enforced by either a mechanically constrained remote center-of-motion (RCM) in the case of EyeRobot2 or software, implemented in EyeRobot1.

MICRON

The MICRON system is developed at Carnegie Mellon University and is designed to actively remove involuntary hand tremor but can also be used as a precise, high-speed, hand-held robotic manipulator. The manipulator has a ~1cm diameter tubular handle easily grasped by a human operator. The distal end has a transversely mounted triangular plane that holds three piezoelectric stacks actuating the end-effector platform. The actuation of each stack is mechanically amplified to increase the range of motion and also increase of the end effector velocities. The end-effector probe is 30 mm in length and is mounted to the platform and aligned coaxially with the handle. The position of the handle and the end-effector platform is tracked by an optical position measuring system (ASAP) at 2kHz with 4um accuracy. It uses active LEDs, three on the end-effector platform and 1 on the handle. ASAP provides full 6DOF tracking of the tool pose and handle location for feedback control. The configuration allows for very fast three-degree-of-freedom (3DOF) positioning of the probe tip relative to the handle. It has 1 N force capability and 100Hz bandwidth in a work volume of approximately 1000 x 1000 x 400 µm. The location of the probe tip is established using a standard pivot calibration. It operates at 2kHz with insignificant latency. A CISST based API has been developed for standardized system interoperability.

The MICRON system is developed at Carnegie Mellon University and is designed to actively remove involuntary hand tremor but can also be used as a precise, high-speed, hand-held robotic manipulator. The manipulator has a ~1cm diameter tubular handle easily grasped by a human operator. The distal end has a transversely mounted triangular plane that holds three piezoelectric stacks actuating the end-effector platform. The actuation of each stack is mechanically amplified to increase the range of motion and also increase of the end effector velocities. The end-effector probe is 30 mm in length and is mounted to the platform and aligned coaxially with the handle. The position of the handle and the end-effector platform is tracked by an optical position measuring system (ASAP) at 2kHz with 4um accuracy. It uses active LEDs, three on the end-effector platform and 1 on the handle. ASAP provides full 6DOF tracking of the tool pose and handle location for feedback control. The configuration allows for very fast three-degree-of-freedom (3DOF) positioning of the probe tip relative to the handle. It has 1 N force capability and 100Hz bandwidth in a work volume of approximately 1000 x 1000 x 400 µm. The location of the probe tip is established using a standard pivot calibration. It operates at 2kHz with insignificant latency. A CISST based API has been developed for standardized system interoperability.

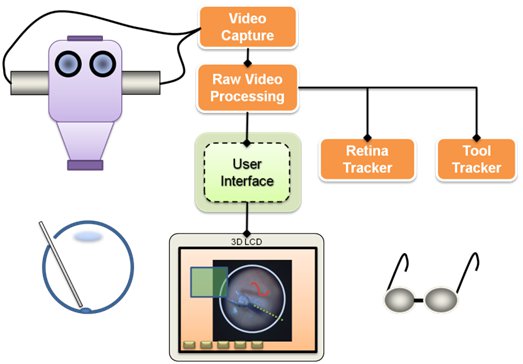

Visualization

Visualization is one of the main components of the system. It relies on video microscopy, 3D Display technology, and our own high performance stereo video software library CISST Stereoevision library. We are able to process and display full frame rate video with minimal latency. The research in this area includes tool tracking, background tracking, information fusion via overlays, and integration with other devices in the eye surgery system.

Visualization is one of the main components of the system. It relies on video microscopy, 3D Display technology, and our own high performance stereo video software library CISST Stereoevision library. We are able to process and display full frame rate video with minimal latency. The research in this area includes tool tracking, background tracking, information fusion via overlays, and integration with other devices in the eye surgery system.

Smart Instruments

The are developing multi-functional surgical instruments that are able to sense their environment in real time. This information can be used for diagnostic purposes, communicated to the surgeon visually or aurally, and/or integrated into control algorithms of our robotic assistants.

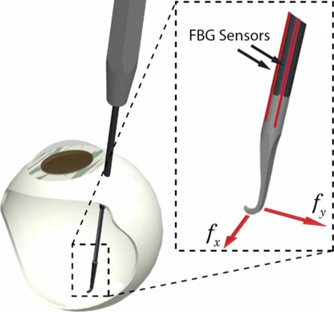

Micro-Force Sensing

One of the great engineering challenges of vitreoretinal surgery is the 20-25 gauge access ports and relatively distant location of the retina from those ports (~23mm). To deliver micro-force sensing inside of the eye, Fiber Bragg grating (FBG) sensors were chosen to achieve high resolution force measurements at the tip of a long thin tube. Bragg sensors consist of a grating formed inside of a photosensitive optical fiber by exposure to an intense optical interference pattern, which effectively creates a wavelength specific dielectric mirror inside of the fiber core. This characteristic Bragg wavelength shifts due to modal index or grating pitch change from physical deformation caused by strain or temperature change. FBGs can be incorporated into deformable structures to sense changes in force, pressure, and acceleration, with extremely high sensitivity. The fibers themselves have very small diameters (<160 μm), are immune to electrical or radio frequency noise, can be sterilized in various ways, and have excellent biocompatibility characteristics.

Our team, led by Dr. Iordachita, has built a 2-axis hooked instrument that incorporates three optical fibers in a 0.5mm diameter wire with a hooked tip. Based on the axial strain due to tool bending, the instrument senses forces at the tip in the transverse plane, with a sensitivity of 0.25mN in the range of 0mN to 60mN. The design is theoretically temperature insensitive, but due to fabrication imperfections, temperature effects may be a factor. In such a case it can be minimized by proper calibration and biasing. The instrument can be used in freehand fashion or rigidly attached to the handle of the robot (e.g. Eye Robot2). Samples are acquired from the FBG interrogator at 2 kHz over a TCP/IP local network, and processed using custom software application (C++) based on the SAW framework.

One of the great engineering challenges of vitreoretinal surgery is the 20-25 gauge access ports and relatively distant location of the retina from those ports (~23mm). To deliver micro-force sensing inside of the eye, Fiber Bragg grating (FBG) sensors were chosen to achieve high resolution force measurements at the tip of a long thin tube. Bragg sensors consist of a grating formed inside of a photosensitive optical fiber by exposure to an intense optical interference pattern, which effectively creates a wavelength specific dielectric mirror inside of the fiber core. This characteristic Bragg wavelength shifts due to modal index or grating pitch change from physical deformation caused by strain or temperature change. FBGs can be incorporated into deformable structures to sense changes in force, pressure, and acceleration, with extremely high sensitivity. The fibers themselves have very small diameters (<160 μm), are immune to electrical or radio frequency noise, can be sterilized in various ways, and have excellent biocompatibility characteristics.

Our team, led by Dr. Iordachita, has built a 2-axis hooked instrument that incorporates three optical fibers in a 0.5mm diameter wire with a hooked tip. Based on the axial strain due to tool bending, the instrument senses forces at the tip in the transverse plane, with a sensitivity of 0.25mN in the range of 0mN to 60mN. The design is theoretically temperature insensitive, but due to fabrication imperfections, temperature effects may be a factor. In such a case it can be minimized by proper calibration and biasing. The instrument can be used in freehand fashion or rigidly attached to the handle of the robot (e.g. Eye Robot2). Samples are acquired from the FBG interrogator at 2 kHz over a TCP/IP local network, and processed using custom software application (C++) based on the SAW framework.

We are further developing a line of microsurigcal instruments capable of measuring 1D,2D, and 3D forces with sub-milliNewtown sensitivity. More on Force Instruments

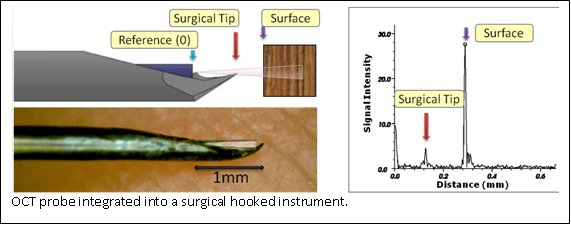

Optical Coherence Tomography

Optical Coherence Tomography (OCT) provides very high resolution (micron scale) images of anatomical structures within the tissue. Within Ophthalmology, OCT systems typically perform imaging through microscope optics to provide preoperative 2D cross-sectional images (“B-mode”) of the retina. These systems are predominantly used for diagnosis, treatment planning, and in a few cases, for optical biopsy and image guided laser surgery.

OCT imaging can be used as a range finder by extracting the distance from the probe’s reference to the first large peak in the A-Scan, which generally represents the surface of the sample. Resulting real-time range information is used as a feedback parameter in a virtual fixture framework for cooperative robot control.

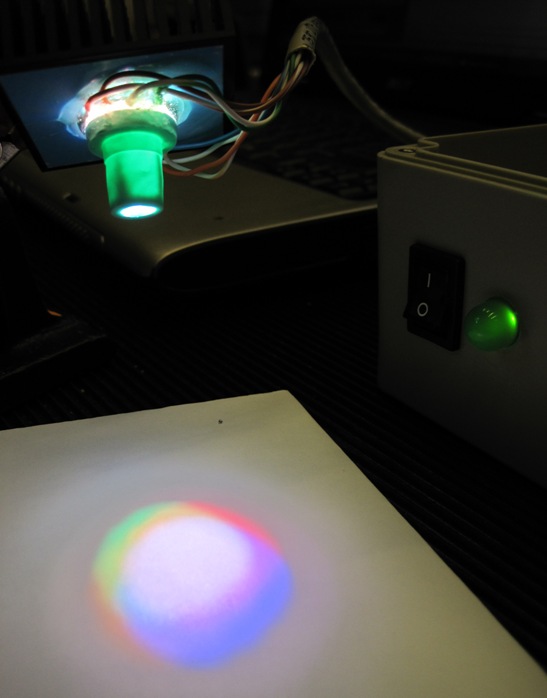

LED Light Source

It has been shown that retinal surgical procedures often incur phototoxicity trauma of the retina as a result of illuminators used in surgery. In answer to this problem, we have developed a computer-controlled multispectral LED light source that drastically reduces retinal exposure to toxic white light during surgery. The system achieves low phototoxic illumination by rapid illumination switching and by biasing illumination intensity towards low-phototoxic regions of the visible spectrum.

It has been shown that retinal surgical procedures often incur phototoxicity trauma of the retina as a result of illuminators used in surgery. In answer to this problem, we have developed a computer-controlled multispectral LED light source that drastically reduces retinal exposure to toxic white light during surgery. The system achieves low phototoxic illumination by rapid illumination switching and by biasing illumination intensity towards low-phototoxic regions of the visible spectrum.

Phantoms

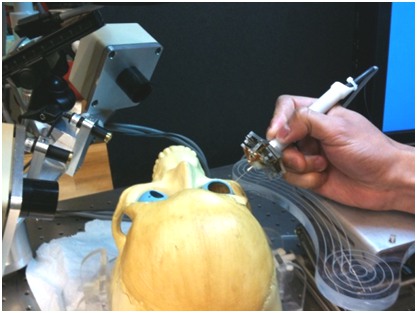

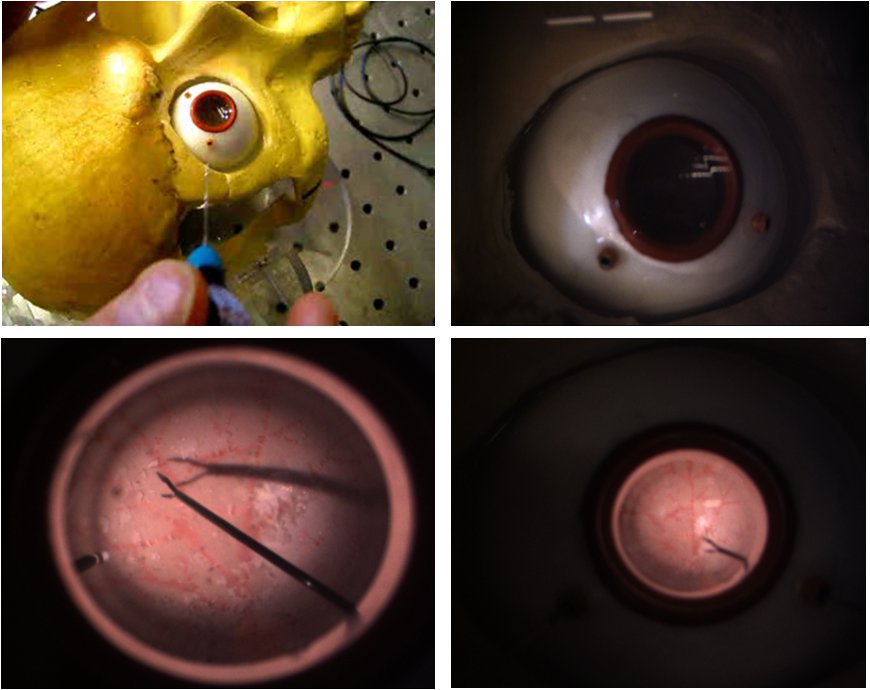

For the purpose of controlled bench-top experimentation a number of phantoms were developed to mimic the geometry and the structure of the eye, as well as the behavior of epiretinal retinal membranes (ERM).

Eye Ball Phantom

We have found that commercial eye models, although astatically pleasing, are not anatomically correct for the purpose of our experiments. We have developed a 24.5 mm diameter eye made of soft silicone rubber to mimic the sclera, and multiple inner layers with vascular patterns to resemble the retina. The inner layers are made of latex rubber and can accept Liquid Bandage coating that resembles epiretinal membranes.

LPTakuQUeo4 5vemB9c0RnE

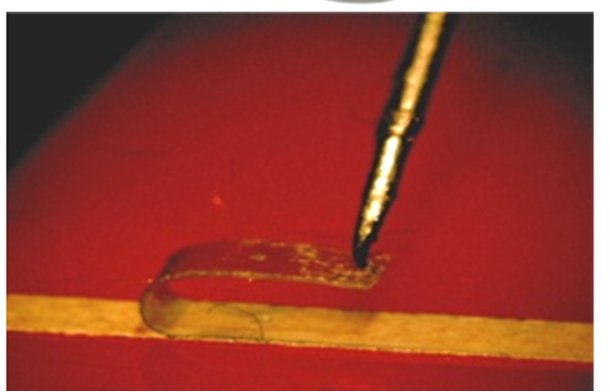

Peeling Phantom

To assess the performance of force sensing instruments we required a consistent and easily fabricated phantom model that would behave within the parameters of vitreoretinal surgery. The actual ERM peeling procedure involves grasping or hooking a tissue layer and slowly delaminating it, often in a circular pattern. To reduce the factors needed to be controlled, we simplified the target maneuver to a straight line peel. After extensive search and trial-and-error testing of many materials we identified sticky tabs from Bandages to be a suitable and repeatable phantom for delaminating. The Bandage tab was sliced to produce 2 mm wide strips that can be peeled multiple times from its backing, with predictable behavior showing increase of peeling force with increased peeling velocity.

To assess the performance of force sensing instruments we required a consistent and easily fabricated phantom model that would behave within the parameters of vitreoretinal surgery. The actual ERM peeling procedure involves grasping or hooking a tissue layer and slowly delaminating it, often in a circular pattern. To reduce the factors needed to be controlled, we simplified the target maneuver to a straight line peel. After extensive search and trial-and-error testing of many materials we identified sticky tabs from Bandages to be a suitable and repeatable phantom for delaminating. The Bandage tab was sliced to produce 2 mm wide strips that can be peeled multiple times from its backing, with predictable behavior showing increase of peeling force with increased peeling velocity.

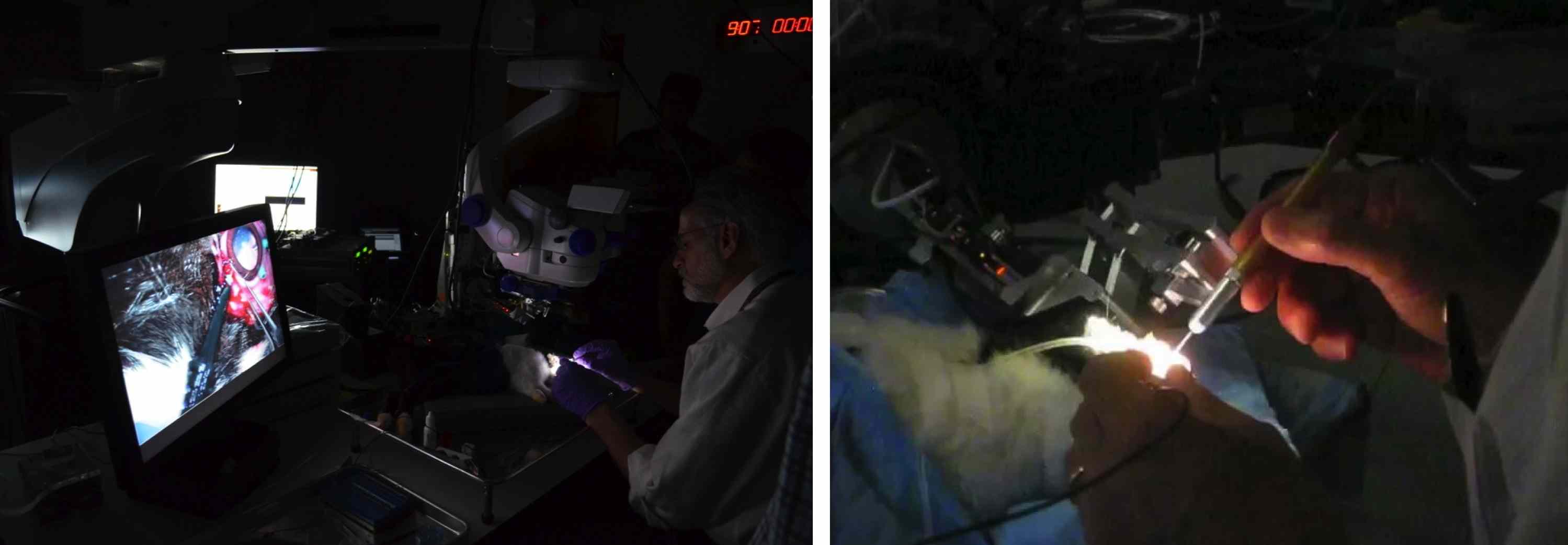

Status

We have a functional integrated system that includes Visualization, Robotic Assistants, Smart Surgical instruments and a centralized control application that configures and monitors the distributed system. We have tested individual components of the system on our new realistic eye phantom. Recently were were able to use our system in-vivo.

Here are some examples of system behaviors that we have created: …

Future

- Ultimately we would like to use the whole system in a patient.

- Transfer the technologies to other microsurgical applications such as ear surgery.

Project Personnel

| JHU Whiting School | JHU Hospital, Wilmer Eye Institute | Carnegie Mellon University |

|---|---|---|

| Dr. Russell Taylor | Dr. James Handa | Dr. Cameron Riviere |

| Dr. Greg Hager | Dr. Peter Gehlbach | Brian Becker |

| Dr. Peter Kazanzides | Dr. Emily Gower | Robert MacLachlan |

| Dr. Jin Kang | ||

| Dr. Iulian Iordachita | ||

| Balazs Vagvolgyi | ||

| Anton Deguet | ||

| Marcin Balicki | ||

| Raphael Sznitman | ||

| Kevin Olds | ||

| Xuan Liu | ||

| Dr. Rogerio Richa | ||

| Min Yang Jung | ||

| Eric Meisner | ||

| Seth Billings | ||

| Xingchi He | ||

| Ali Uneri | ||

| Yi Yang |

Group Alumni

- Dr. Jae-Ho Han

- Dr. Laura Pinni

- Dan Mirota

- Dr. Sandrine Voros

- Haoxin Sun

- Zhenglong Sun (From Nanyang University, Singapore)

- Kang Zhang

- Daniel Roppenecker (From TUM, Germany)

- Dominik Gierlach (From TUM, Germany)

Funding

- NIH - 1 R01 EB 007969-01 A1

- NSF - EEC9731748

- JHU - Internal funds

Affiliated labs

Publications

- Xuan Liu, Marcin Balicki, Russell H. Taylor, and Jin U. Kang. “Automatic online spectral calibration of Fourier-domain OCT for robot-assisted vitreoretinal surgery” , in SPIE Advanced Biomedical and Clinical Diagnostic Systems IX,25 January 2011.

- Xuan Liu, Marcin Balicki, Russell H. Taylor, and Jin U. Kang. “Towards Automatic Online Calibration of Fourier-Domain OCT for Robot-Assisted Vitreoretinal Surgery” Optics Express Journal 2010. Also appeared in Virtual Journal for Biomedical Optics V.6, I.1, 1/2011.

- Marcin Balicki, Ali Uneri, Iulian Iordachita, James Handa, Peter Gehlbach, Russell Taylor. “Micro-Force Sensing in Robot Assisted Membrane Peeling for Vitreoretinal Surgery”,Proceedings of the MICCAI Conference, 2010. Runner-up for Best Paper Award in Computer Assisted Intervention Systems and Medical Robotics

- Ali Uneri, Marcin A. Balicki, James Handa, Peter Gehlbach, Russell H. Taylor, and Iulian Iordachita. “New Steady-Hand Eye Robot with Micro-Force Sensing for Vitreoretinal Surgery” Proceedings of the BIOROB Conference, 2010.

- Raphael Sznitman, Seth Billings, Diego Rother, Daniel Mirotam, Yi Yang, James Handa, Peter Gehlbach, Jin U. Kang, Gregory D. Hager, Russell H. Taylor. “Active Multispectral Illumination and Image Fusion for Retinal Microsurgery”. IPCAI 2010, pp. 12-22. Link

- Marcin Balicki, Jae-Ho Han, Iulian Iordachita, Peter Gehlbach, James Handa, Jin Kang, Russell Taylor. “Single Fiber Optical Coherence Tomography Microsurgical Instruments for Computer and Robot-Assisted Retinal Surgery” Proceedings of the MICCAI Conference (Oral Presentation, 5% acceptance rate), March 2009. Best Paper Award in Computer Assisted Intervention Systems and Medical Robotics (September 2009)

- Iulian Iordachita, Zhenglong Sun, Marcin Balicki, Jin Kang, James Handa, Peter Gehlbach, Russell Taylor. “A Sub-Millemetric, 0.25 mN Resolution Fully Integrated Fiber-Optic Force Sensing Tool for Retinal Microsurgery” Journal of Computer Assisted Radiology and Surgery,May 2009

- Jae-Ho Han, Marcin Balicki, Kang Zhang, Jae-Ho Han, Marcin Balicki, Kang Zhang, Xuan Liu, James Handa, Russell Taylor, and Jin U. Kang. “Common-path Fourier-domain Optical Coherence Tomography with a Fiber Optic Probe Integrated Into a Surgical Needle” Proceedings of CLEO Conference, May 2009

- Sun Z., Balicki M., Kang J., Handa J., Taylor R., Iordachita I. “Development and Preliminary Data of Novel Integrated Optical Micro-Force Sensing Tools for Retinal Microsurgery” 2009 IEEE International Conference on Robotics and Automation - ICRA 2009, Kobe, Japan, May 2009

- Fleming I., Balicki M., Koo J., Iordachita I., Mitchell B., Handa J., Hager G and Taylor R. “Cooperative Robot Assistant for Retinal Microsurgery” - Proceedings of the MICCAI Conference Poster, September 2008

- Mitchell B., Koo J., Iordachita I., Kazanzides P., Kapoor A., Handa J., Taylor R., Hager G., “Development and Application of a New Steady-Hand Manipulator for Retinal Surgery”, Proc. IEEE Intl. Conf. on Robotics and Automation, Rome, Italy: pp. 623-629, Apr 2007