Table of Contents

Augmented Reality Magnifying Loupe for Surgery

Last updated: 5/8/2019, 11:50PM

Summary

In this project, we present the basic design of the modified head-mounted display (HMD), and the method and results of the calibration of Magic Leap One displays to a real-world scene. We have developed a calibration method to associate the field-of-magnified-vision, the HMD screen space and the task workspace. The final calibration errors are measured both in the magnified view and regular view, respectively. The mean target augmentation error is 3.47 ± 1.03 mm in the magnified view and 2.59 ± 1.29 mm in the regular view.

- Students: Tianyu Song

- Mentor(s): Long Qian, Mathias Unberath, Peter Kazanzides

Background, Specific Aims, and Significance

Background

- A magnifying loupe is often used in surgical procedures, including neurosurgery and dentistry. There are three principal reasons for adopting magnifying loupes for operative dentistry: to enhance visualization of fine detail, to compensate for the loss of near vision (presbyopia) and to ensure maintenance of correct posture.

- Many dental practitioners use magnifying loupes routinely for clinical work, and dental undergraduates are increasingly wearing them when training.

- Augmented Reality has been used in the medical domain for treatment, education, and surgery. Useful information, measurements and assistive overlays can be provided to the clinician on a see-through display. And AR guidance in the loupe can potentially help the practitioner in navigation and operation.

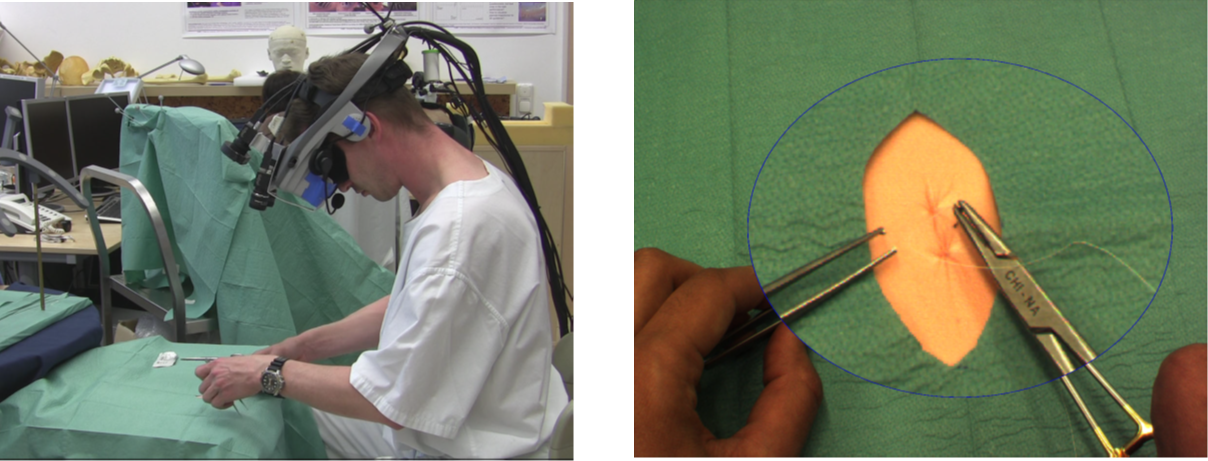

- In previous work, an augmented reality magnification system, in other words, a virtual loupe, was implemented on a head- mounted display for surgical applications. The system was evaluated by measuring the completion time of a suturing task performed by surgeons. Although it was widely accepted by surgeons as a useful functionality, surgeons were not satisfied with the video quality.

Aims

- Design a surgical loupe mount for optical see-through head-mounted display (HMD)

- Develop a calibration method to associate the field-of-magnified-vision, the HMD screen space and the task workspace

- Evaluate the proposed system

Significance

The success of the project can potentially increase the clinical acceptance of AR and the proposed system can be used to provide accurate guidance and navigation in a wide range of computer-aided surgery.

Deliverables

- Minimum: (Completed)

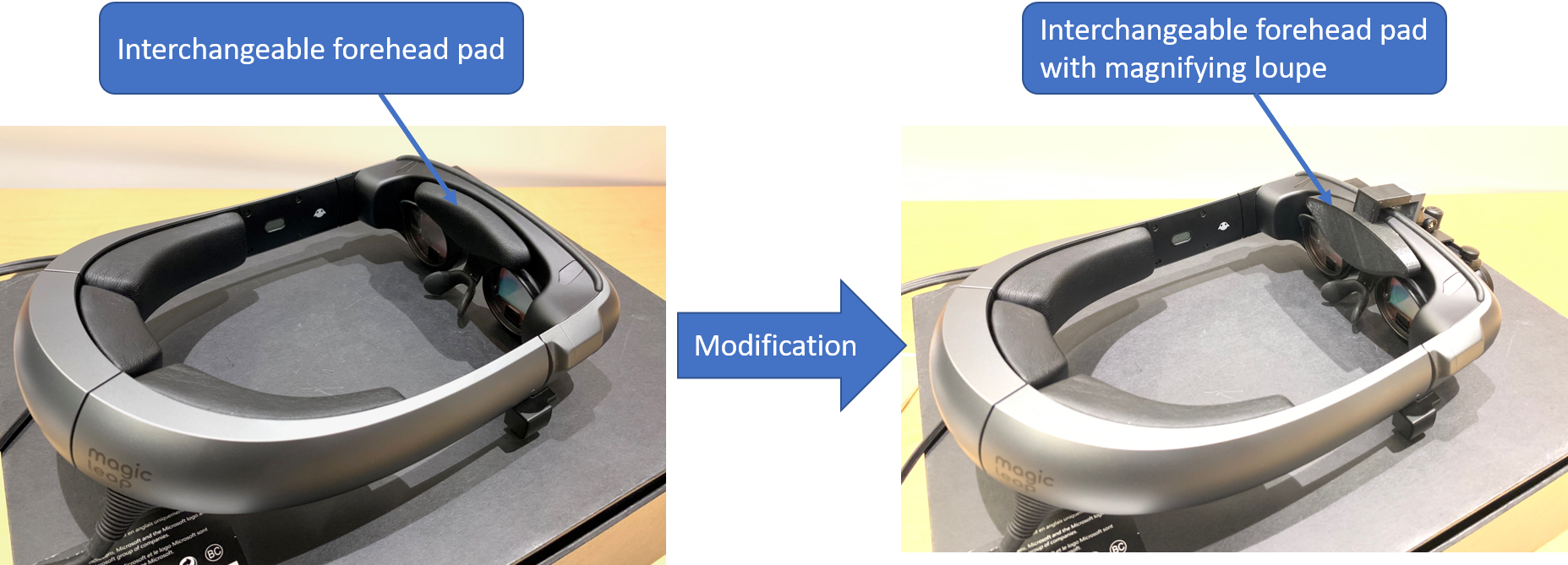

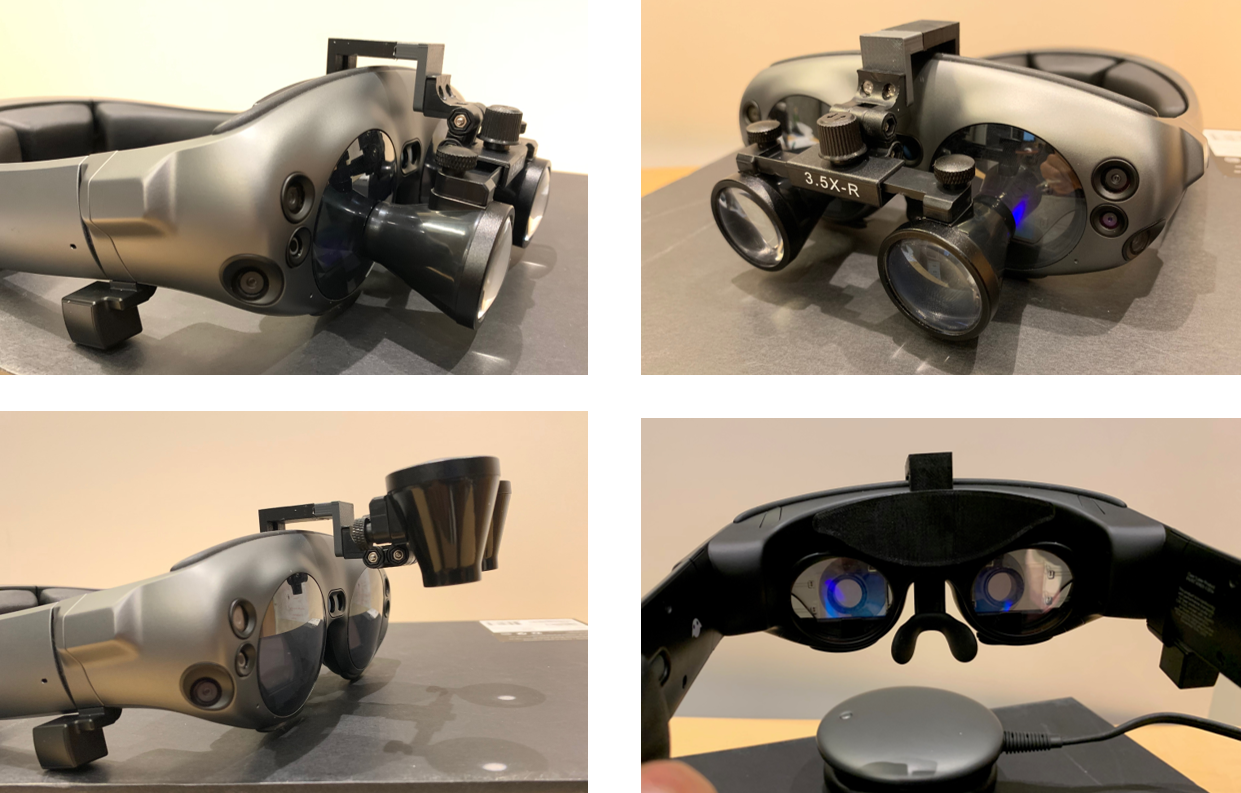

- A hardware prototype to integrate Magic Leap One with magnifying loupe

- A calibration process for single eye

- Expected: (Completed)

- A user-friendly stereo calibration process to associate the field-of-magnified-vision, the HMD screen space and the task workspace

- Maximum: (Expected by May 5)

- Evaluation results of proposed system with a comparative phantom study

Technical Approach

HMD Choice

There are three common Optical See-through HMD, Microsoft holoLens, Magic leap One and Epson BT-300. For this project, I will use Magic Leap One or BT-300 instead of HoloLens, because HoloLens‘ bulky and round-shaped design makes it hard to attach a loupe in front of the display. And, Magic Leap One or BT-300 have a light-weighted design on the head. And they both have a flat surface in front of the display, making them easier to attach a loupe.

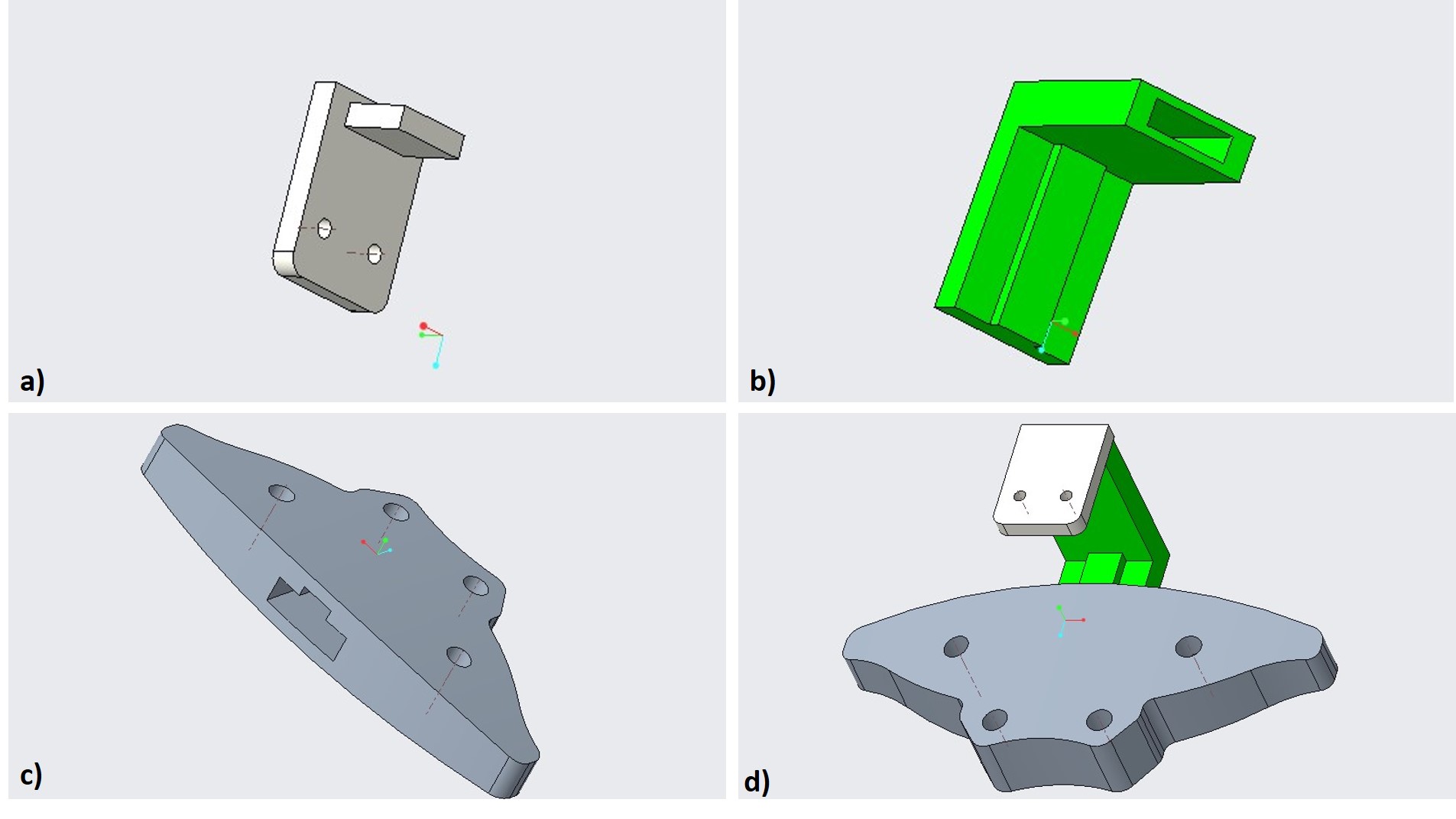

Mechanical Design

- Interchangeable lens for different magnification (x2 – x3.5)

- Able to flip

- Adjustable distance for different users

HMD Calibration

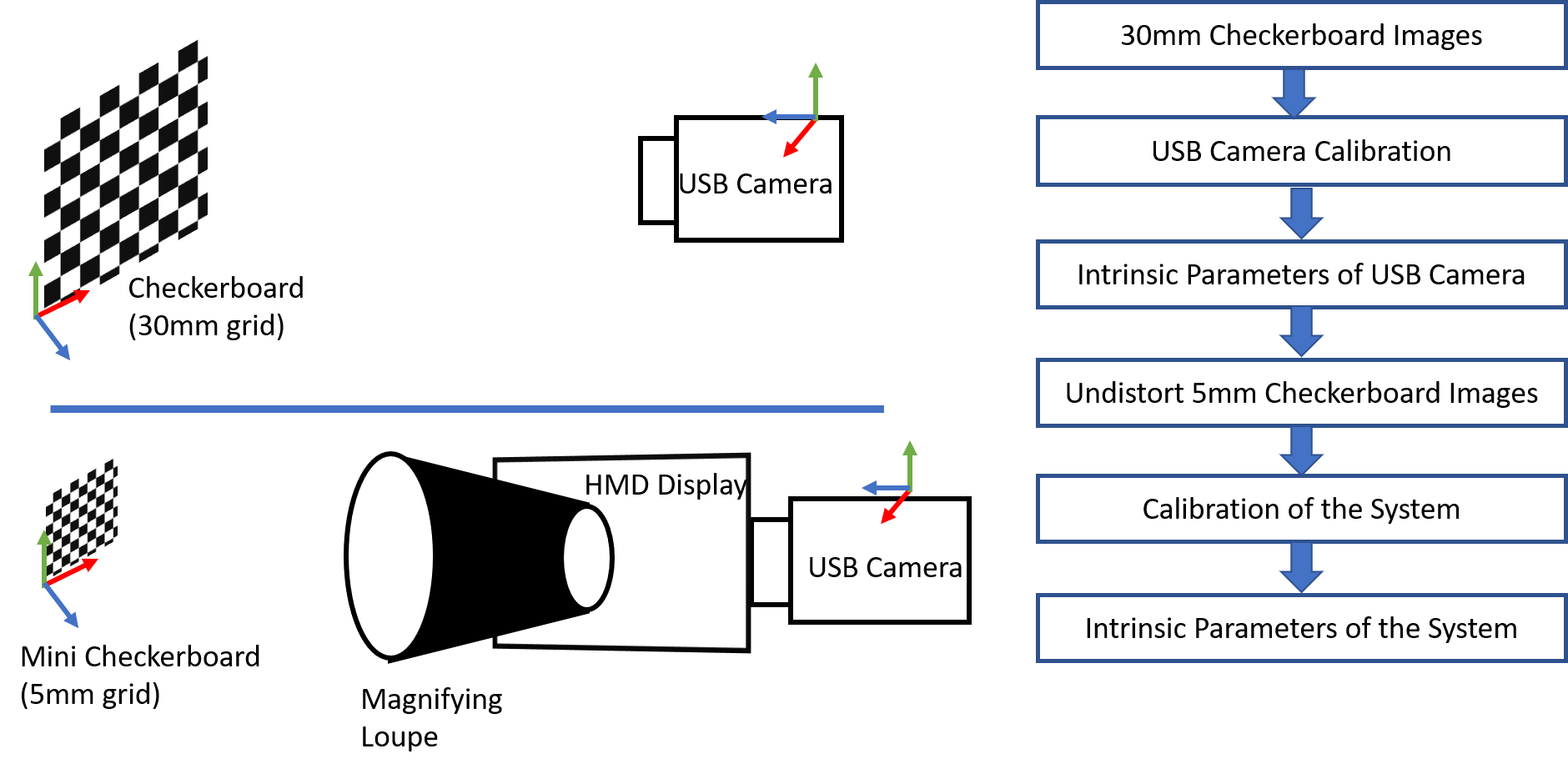

In order to determine the distortion parameters of the loupe and the intrinsic parameters of the system, a system calibration process is needed. A mini USB camera module with 1080P resolution and field-of-view of 100 degree is used. The camera module was first calibrated using Zhang’s method [10] with a 30mm checkerboard consisting of 8×6 vertices and 9×7 squares. We then attach the camera module to the back of the HMD display to capture 30 images from different poses of a 5mm checkerboard consisting of 8×6 vertices and 9×7 squares. During the photo capturing process, the checkerboard is placed in the range of the working distance of the loupe, and the whole checkerboard is visible in the magnified view.

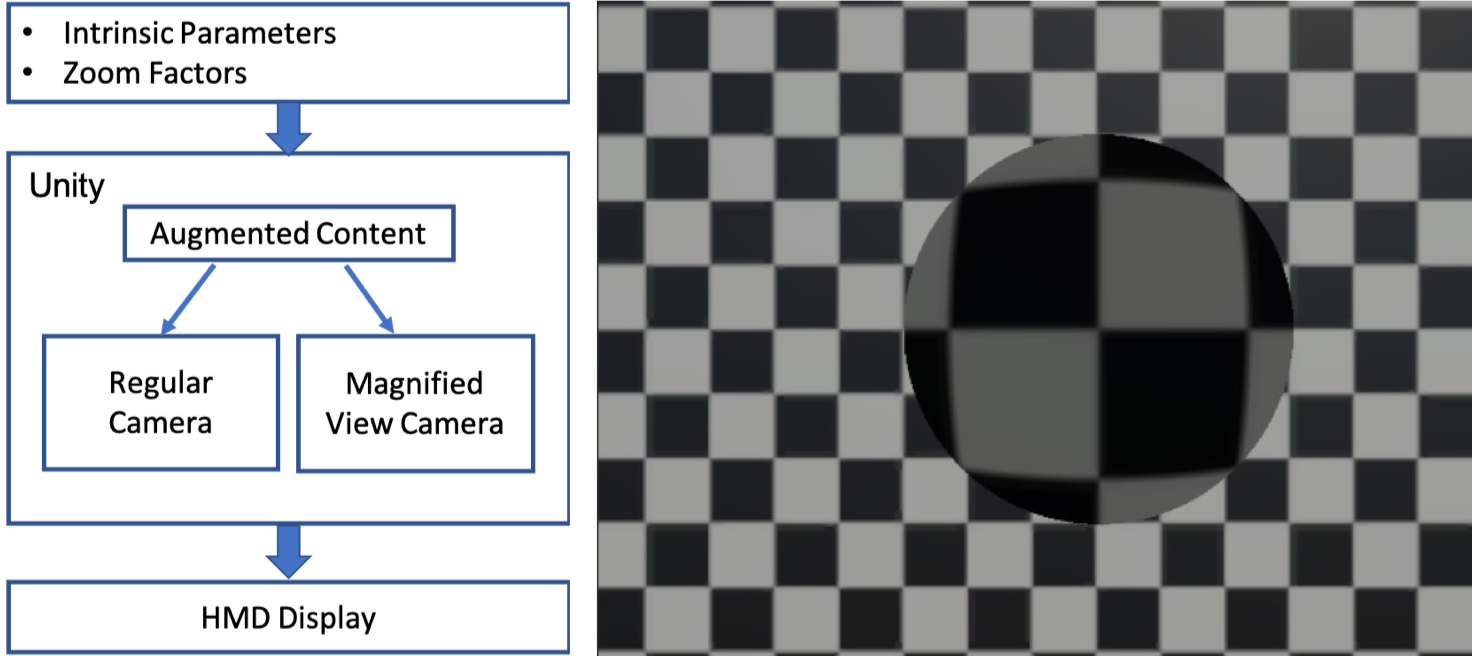

AR Rendering Pipeline

In the Unity scene, each eye is assigned with two cameras for rendering. The regular camera is used to render a non-magnified view of the augmented content directly to the display and the other camera is used to render the distorted magnified view of any AR content to a texture. The texture is then displayed to the 2D screen in a constrained domain.

Tracking

[1] Wang, Junchen & Suenaga, Hideyuki & Hoshi, Kazuto & Yang, Liangjing & Kobayashi, Etsuko & Sakuma, Ichiro & Liao, Hongen. (2014). Augmented Reality Navigation With Automatic Marker-Free Image Registration Using 3-D Image Overlay for Dental Surgery. IEEE transactions on bio-medical engineering. 61. 1295-304.

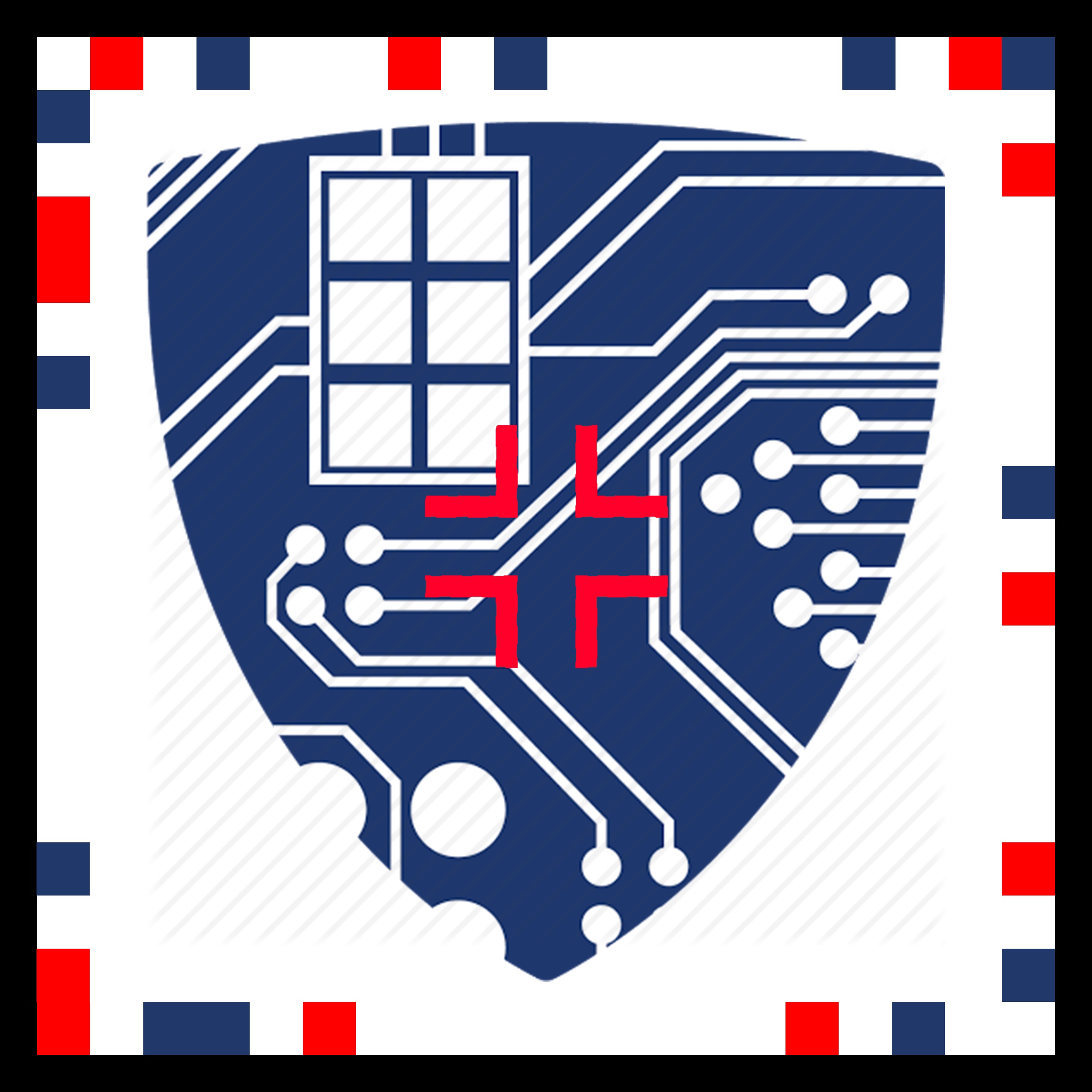

To enable AR, a tracking system with good accuracy is necessary. Although the HMDs chosen for the project have their own visual-SLAM based tracking system works off-the-shelf, they usually have errors of a few centimeters. It is not accurate enough for surgery. So I will be using marker tracking for this project.

Marker designed for the project: 15cm planar marker:

Dependencies

| Dependencies | Solution | Alternative | Estimated Date |

|---|---|---|---|

| Access to Magic Leap One | Ask Dr. Navab for access | Ask Ehsan for Epson BT-300 | Resolved |

| Access to surgical loupe | Ask Long for access | Resolved | |

| Access to CAD Software (SolidWorks or PTC Creo) | Download from JHU software catalog | Resolved | |

| Access to 3D printer | Access to LCSR 3D printer | Use DMC 3D printer | Resolved |

| Access to USB Camera | Ask Long for access | Buy one from Amazon | Resolved |

Milestones and Status

- Milestone name: Finish Hardware prototype, begin calibration

- Planned Date: Feb 18

- Expected Date: Mar 4

- Status: Finished

- Milestone name: Finish calibration for single eye

- Planned Date: Feb 18

- Expected Date: Mar 25

- Status: Finished

- Milestone name: Finish stereo calibration, begin evaluation

- Planned Date: Feb 18

- Expected Date: Apr 8

- Status: Finished

- Milestone name: Finish evaluation

- Planned Date: Feb 18

- Expected Date: May 6

- Status: Finished

- Milestone name: Finish project report

- Planned Date: Feb 18

- Expected Date: May 9

- Status: Finished

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Presentation

- Project Final Report

- links to any appendices or other material

Project Bibliography

- James, Teresa, and Alan SM Gilmour. “Magnifying loupes in modern dental practice: an update.” Dental update 37.9 (2010): 633-636.

- Martin-Gonzalez, Anabel, et al. “Head-Mounted Virtual Loupe with Sight-Based Activation for Surgical Applications.” 2009 8th IEEE International Symposium on Mixed and Augmented Reality, 2009.

- Tuceryan, Mihran, Yakup Genc, and Nassir Navab. “Single-Point active alignment method (SPAAM) for optical see-through HMD calibration for augmented reality.” Presence: Teleoperators & Virtual Environments 11.3 (2002): 259-276.

- L. Qian, A. Winkler, B. Fuerst, P. Kazanzides and N. Navab, “Reduction of Interaction Space in Single Point Active Alignment Method for Optical See-Through Head-Mounted Display Calibration,” 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Merida, 2016, pp. 156-157.

- L. Qian, E. Azimi, P. Kazanzides et al., “Comprehensive tracker based display calibration for holographic optical see-through head-mounted display”, 2017.

- C. B. Owen, Ji Zhou, A. Tang and Fan Xiao, “Display-relative calibration for optical see-through head-mounted displays,” Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 2004, pp. 70-78.

- Y. Itoh and G. Klinker, “Interaction-free calibration for optical see-through head-mounted displays based on 3D Eye localization,” 2014 IEEE Symposium on 3D User Interfaces (3DUI), Minneapolis, MN, 2014, pp. - 75-82.

- E. Azimi, L. Qian, P. Kazanzides, and N. Navab. Robust optical see-through head-mounted display calibration: Taking anisotropic nature of user interaction errors into account. In Virtual Reality (VR). IEEE, 2017.

- Figl M., Birkfellner, W., Hummel, J., et. al. “Current Status of the Varioscope AR, a Head-Mounted Operating Microscope for Computer-Aided Surgery”. Proc. of IEEE and ACM International Symposium on Augmented Reality (ISAR'01). New York. 2001.

- W. Birkfellner, M. Figl, K. Huber, F. Watzinger, F. Wanschitz, J. Hummel, R. Hanel, W. Greimel, P. Homolka, R. Ewers, and H. Bergmann. A Head-Mounted Operating Binocular for Augmented Reality Visualization in Medicine – Design and Initial Evaluation. IEEE Trans Med Imaging, 21(8), 2002.

- Figl M, Ede C, Birkfellner W, Hummel J, Seemann R, Bergmann H, “Automatic Calibration of an Optical See Through Head Mounted Display for Augmented Reality Applications in Computer Assisted Interventions,” AMI-ARCS 2004.

- Kuchta J, Simons P, “Spinal Neurosurgery with the Head-mounted “Varioscope” Microscope”, Technical Note, Cent Eur Neurosurg, 70:98-100, 2009.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project.

- Demo video for proof of concept: https://jh.box.com/s/2ugihw467w7v0k1ds3alz6nvo6lvrswy

- Private GitHub repository for source code: https://github.com/stytim/Magic_Leap_Magnifying_Loupe.git