Table of Contents

Augmentation of Haptic Guidance into Virtual-Reality Surgical Simulators

Last updated: 05/09/2019 - 10:30 AM

Summary

We would like to investigate the effect of haptic guidance and brain stimulation on motor learning in Virtual Reality surgical simulators.

- Students: Eric Cao, Brett Wolfinger, Vipul Bhat

- Mentor(s): Dr. Jeremy Brown, Dr. Mahya Shahbazi, Guido Caccianiga

Background, Specific Aims, and Significance

The number of robotic-assisted minimally invasive surgeries (RMIS) performed annually is rapidly increasing, and new surgeons must be trained to meet this demand. In the current standard of training, novices often spend many hours completing practice tasks which are graded with observational feedback. Getting this feedback requires trained surgeons to spend time going through the videos or watching in real-time, leading to high operational costs and low efficiency. Furthermore, if not corrected early trainees can develop poor habits that will take longer to break after being ingrained over several days of training.

There is a need for new technologies that can lower these mentorship barriers to and speed up training. Current work being done in the space involves building Virtual Reality simulators on daVinci Research Kits (dVRK) for basic tasks such as suturing (see Figure 1). While these tasks are able to provide real-time feedback visually (in the example, the green colored ring indicates a proficient needle entry), they do not provide any corrective guidance for wrong motions. The goal of this project is to incorporate real-time corrective guidance to these virtual simulators with reference to the optimal path for the task. Coupled with the visual feedback, we will be able to work to determine the efficacy of haptic feedback in teaching RAMIS principles.

Deliverables

- Minimum: (Expected by 4/19)

- Code for computing and applying haptic feedback to dVRK manipulators stored in GitLab

- Code for computing and displaying visual feedback on dVRK stereoscopic viewer stored in GitLab

- Documentation of environment including operation, maintenance, and future

- Expected: (Expected by 4/26)

- Documentation of study protocol for evaluating implemented feedback stored on Google Drive

- Scripts for data collection and data analysis stored in GitLab

- Report on user study evaluating the effects of real-time feedback and our chosen approach(es) (Goal n = 15)

- Maximum: (Expected by 5/09)

- Documentation of study protocol for using brain stimulation stored on Google Drive.

- Scripts for data collection and data analysis for brain stimulation stored in GitLab

- Report on user study evaluating the effects of brain stimulation

Technical Approach

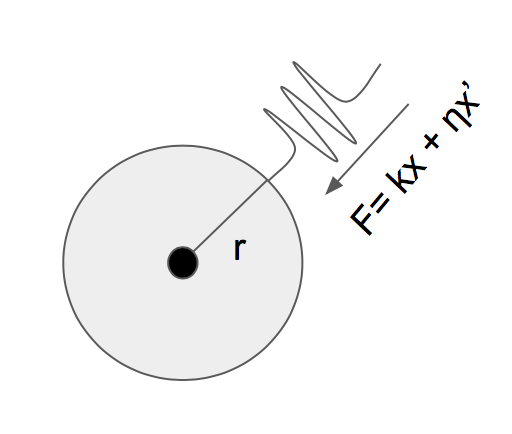

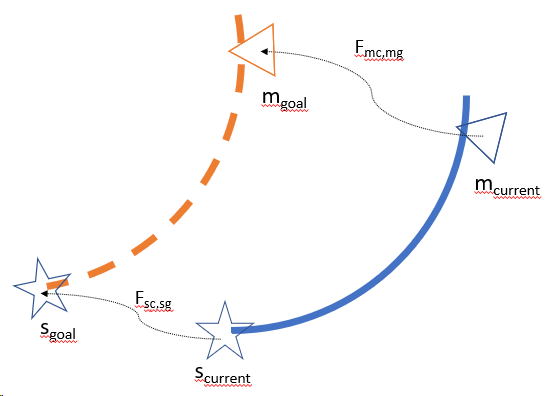

The first phase of this project will include the development and implementation of models for force feedback systems. Previous work has led us to consider two methods, forbidden region (Figure 2) and guidance along the optimal path (Figure 3). Using virtual fixtures, the optimal path will be bounded with an allowable region tunnel that will apply a spring force to the dVRK manipulators should the virtual needle deviate from this region. With guidance, forces will be applied to the dVRK manipulators under certain conditions to encourage users to the optimal path based on the task-space error. Defining the conditions of these forces and tuning the parameters will require significant experimentation and testing.

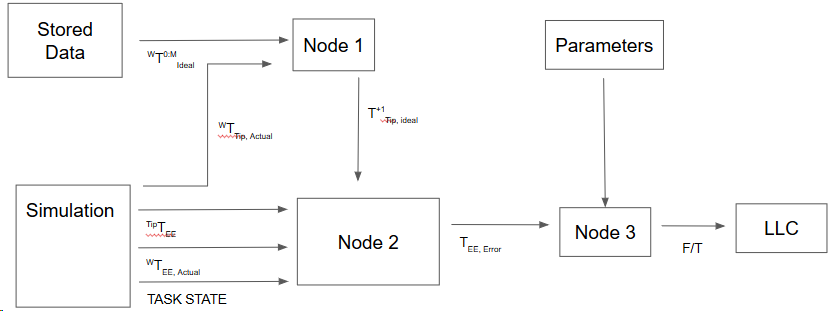

Node 1: Inputs are list of poses on optimal trajectory and current pose of suturing tip. Outputs a goal pose of suturing tip based on desired velocity.

Node 2: Inputs are transformation from current pose of suturing tip to current pose of end effector, current pose of end effector, task state, and goal pose. Task state is current action of the suture, i.e. insertion, handoff. Calculates the goal pose of the end effector. Outputs the task space error as a transformation between current pose of end effector and goal pose of end effector.

Node 3: Inputs are task space error and parameters about force generation (visco-elastic, non-energy-storing, etc). Outputs forces and torques which are sent to a low level controller.

After these models have been tested and tuned, the second phase of the project can begin (it will have been set up in parallel with the first phase). This phase will involve a pilot user study on fellow LCSR members and other novices on the dVRK. Metrics such as time to completion, accuracy and smoothness will be used to compare the different feedback systems (none, forbidden region, guidance) and their effects on performance and learning. This study can be further extended after to include a study evaluating the effect of brain stimulation on robotic surgery training.

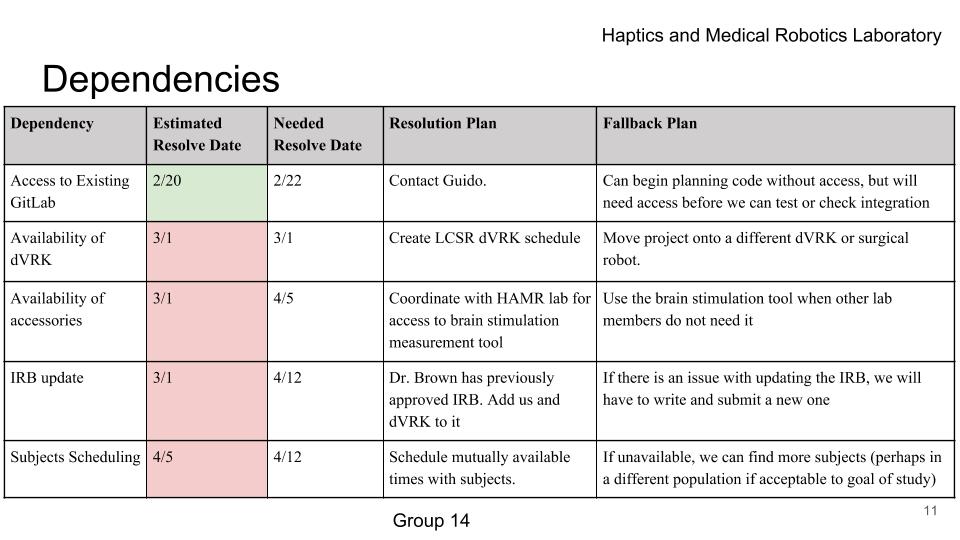

Dependencies

- Dependency name: Access to existing GitLab

- Expected Date: 02/20

- Needed Date: 02/22

- Status: Complete

- Dependency name: Availability of dVRK

- Expected Date: 03/01

- Needed Date: 03/10

- Status: Complete (Switched dVRK systems)

- Dependency name: Availability of brain stimulation tool

- Planned Date: 03/01

- Needed Date: N/A

- Status: Fallback. Brain stimulation tool is not available. Focus on other deliverables.

- Dependency name: IRB Update

- Planned Date: 03/01

- Needed Date: 04/12

- Status: Complete

- Dependency name: Subjects Scheduling

- Planned Date: 04/19

- Expected Date: N/A

- Status: Ongoing. User studies delayed. Conducted Pilot testing instead

Milestones and Status

- Milestone name: Complete ROS Tutorials from Clearpath Robotics

- Expected Date: 02/22

- Status: Complete

- Milestone name: Generate a movement on the existing dVRK setup

- Expected Date: 03/01

- Planned Date: 03/10

- Status: Complete

- Milestone name: Complete architecture and models for guidance implementation

- Planned Date: 03/08

- Expected Date: 03/08

- Status: Complete

- Milestone name: Complete code implementation and documentation of guidance model and develop architecture for forbidden region model

- Planned Date: 04/12

- Expected Date: 04/12

- Status: Complete

- Milestone name: Complete code implementation and documentation of forbidden region model

- Planned Date: 04/19

- Expected Date: 04/26

- Status: Complete

- Milestone name: Finalize documentation. Create documentation write up

- Planned Date: 04/26

- Expected Date: 04/26

- Status: Complete

- Milestone name: Create procedures for conducting study and create data collection code

- Planned Date: 04/12

- Expected Date: 04/12

- Status: Complete

- Milestone name: Add Visual Feedback methods

- Planned Date: 04/26

- Expected Date: 04/30

- Status: Complete

- Milestone name: Complete Pilot testing and report

- Planned Date: 05/06

- Expected Date: 05/07

- Status: Complete

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- here provide links to all seminar presentations

- Project Final Presentation

- Project Teaser

- Project Final Report

Project Bibliography

* here list references and reading material

- Bowyer, S. A., Davies, B. L. & Baena, F. R. Y. Active Constraints/Virtual Fixtures: A Survey. IEEE Transactions on Robotics 30, 138–157 (2014).

- Coad, M. M. et al. Training in divergent and convergent force fields during 6-DOF teleoperation with a robot-assisted surgical system. 2017 IEEE World Haptics Conference (WHC) (2017). doi:10.1109/whc.2017.7989900

- Enayati, Nima, et al. “Robotic Assistance-as-Needed for Enhanced Visuomotor Learning in Surgical Robotics Training: An Experimental Study.” 2018 IEEE International Conference on Robotics and Automation (ICRA), May 2018, doi:10.1109/icra.2018.8463168.

- N. Enayati, E. C. Alves Costa, G. Ferrigno, and E. De Momi, “A Dynamic Non-Energy-Storing Guidance Constraint with Motion Redirection for Robot-Assisted Surgery” in IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS, 2016

- Jantscher, W. H. et al. Toward improved surgical training: Delivering smoothness feedback using haptic cues. 2018 IEEE Haptics Symposium (HAPTICS) (2018). doi:10.1109/haptics.2018.8357183

- Kuiper, Roel J., et al. “Evaluation of Haptic and Visual Cues for Repulsive or Attractive Guidance in Nonholonomic Steering Tasks.” IEEE Transactions on Human-Machine Systems, vol. 46, no. 5, Oct. 2016, pp. 672–683., doi:10.1109/thms.2016.2561625.

- Pavlidis, I. et al. Absence of Stressful Conditions Accelerates Dexterous Skill Acquisition in Surgery. Scientific Reports 9, (2019).

- Ström, P. et al. Early exposure to haptic feedback enhances performance in surgical simulator training: a prospective randomized crossover study in surgical residents. Surgical Endoscopy 20, 1383–1388 (2006).

- Shahbazi M, Atashzar SF, Ward C, Talebi HA, Patel RV. Multimodal Sensorimotor Integration for Expert-in-the-Loop Telerobotic Surgical Training. IEEE Transactions on Robotics. 2018 Aug 27(99):1-6.

- Shahbazi M, Atashzar SF, Talebi HA, Patel RV. An expertise-oriented training framework for robotics-assisted surgery. 2014 IEEE International Conference on Robotics and Automation (ICRA) 2014 May 31 (pp. 5902-5907). IEEE.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (2019-09).

GitLab Repo: https://git.lcsr.jhu.edu/atar_cis2

Google Drive: https://drive.google.com/drive/folders/14SCRv1vqzIYPqj8pMy0QRrX2cFIgayOF?usp=sharing

Documentation: https://drive.google.com/drive/folders/1EVcFGfJDSnpfe2z_TSQZeiI6QWZ7xP48?usp=sharing