Table of Contents

Mixed Reality for Biopsy Site Localization

Last updated: May 5, 2021

Summary

Skin biopsies are used by dermatologists to diagnose cutaneous ailments, including tumors and rashes. However, if a surgery becomes necessary after a biopsy, determining the original site of the biopsy can be difficult due to various factors including the skin healing, biopsy depth, and background skin disease. This difficulty can lead to wrong site surgery, which is a never event - an error that is preventable and should never occur.

This project aims to create a mobile augmented reality application (to be deployed on a phone or tablet) that can register biopsy images to surgery images and subsequently overlay the biopsy site on live camera images taken by the mobile device. This would provide dermatologists with guidance sufficient to locate the biopsy site on the patient at the time of surgery.

- Students:

- Ruby Liu: Undergraduate student, Department of Biomedical Engineering, Senior

- Liam Wang: Undergraduate student, Department of Biomedical Engineering, Freshman (external to CIS II)

- Primary Mentors:

- Dr. Peter Kazanzides: Research Professor, Department of Computer Science

- Dr. Ashley Antony: Resident Doctor, Dermatology

- Other Mentors:

- Dr. Jeffrey Scott: Assistant Professor, Dermatology

- Dr. Kristin Bibee: Assistant Professor, Dermatology

- Dr. Elise Ng: Assistant Professor, Dermatology

Background, Specific Aims, and Significance

Dermatologists commonly use photography to locate biopsy sites, but this is prone to human error and could lead to wrong-site surgery or having to redo the biopsy. Others have attempted to address this need using various methods and tools, including a UV-fluorescent tattoo [2][3], a transparent grid [4], confocal microscopy [5], and “selfies” [6][7][8][9].

However, none of these have been incorporated into general practice yet, possibly due to cost, insufficient reliability, or excessive disruption to the typical workflow. Our specific aim is to create a mobile augmented reality application (to be deployed on a phone or tablet) that can register biopsy images to surgery images and subsequently overlay the biopsy site on live camera images taken by the mobile device.

Augmented reality and facial recognition have been used in conjunction to triangulate biopsy site locations on static photos [10]. However, this does not provide a live image overlay and is only effective for biopsies on the face; we intend to create an augmented reality overlay that not only provides live guidance, but also one that is effective for biopsies at any other location on the patient's skin.

If we are successful, the mobile application could be used by dermatologists to improve the accuracy of biopsy site localization, improving outcomes and reducing risks for the patient.

Deliverables

- Minimum:

- Basic placeholder application with documentation (Liam - 2/26)

- Application code and documentation is on the repository (though it is beyond the basic placeholder application now)

- The video of the placeholder application can be seen on/downloaded from the repository here.

- Algorithm to register biopsy site photos to a new photo (Ruby - 3/5)

- Code is siteRegistration.py in the PythonDraft folder of the repository.

- Documentation is in the README of the PythonDraft folder.

- Expected:

- Algorithm to track markers and overlay biopsy site to live video and code documentation (Ruby - 4/2)

- Code is markerTracking.py in the PythonDraft folder of the repository.

- Documentation is in the README of the PythonDraft folder.

- Error metrics to quantify accuracy of the live overlay (Ruby - 4/9)

- These can be seen in the Results section of the final report.

- Basic working interface with calibration overlay guidance with documentation (Liam - 4/2)

- The functionality was accomplished early on, as seen in the video of the placeholder application here.

- The documentation is in iOSApp folder of the repository.

- Maximum:

- Completely functional mobile application with documentation (5/1)

- The code and documentation are in the iOSApp folder of the Gitlabs repository.

- The demo video of the completely functional application can be seen/downloaded here.

- Experimental data to quantify the geometric accuracy of our application (5/1)

- These can be seen in the Results section of the final report.

Technical Approach

Our intention is to create an application with the following UI workflow:

At the time of biopsy, the procedure does not change: the dermatologist will take two 2D color photos of the biopsy site, one close up and one at some distance so as to capture anatomical landmarks.

When the patient comes in for surgery, the dermatologist will import the biopsy image from their photo library on their mobile device. They will also place computer vision tracking markers on the patient near the biopsy site.

Then, the application will provide an edge overlay using the biopsy photo in order to assist in taking the surgery photo, so that the two images can be as similar as possible. The user will then manually label the biopsy site and anatomical features.

After that, the software will internally register the biopsy site to the markers and then overlay the biopsy site on the live camera feed.

Broadly speaking, our application has three parts: the registration algorithm, the live marker tracking, and the mobile augmented reality application.

Registration Algorithm

Ruby will implement the registration algorithm using Python on Windows 10 with OpenCV packages. This can be prototyped with GRIP, an application typically used for rapid prototyping of computer vision algorithms.

The program will input user clicks as pixel coordinates in both biopsy and surgery photos for the biopsy site and tracking points. If the surgery and biopsy site photos are sufficiently similar, labeling on only one photo may be sufficient to reduce human inconsistency.

Feature detection, possibly corner detection, can be implemented to find precise tracking points near the input points. The program will then find a 2D-to-2D homographic transformation and create a circle or dot at the predicted biopsy site.

To test this, we can start by registering a biopsy photo to itself to check that the marked position is the same as the actual biopsy site position. Then, we can move on to testing our algorithm with proper photo pairs at various locations on the patient.

Live Marker Tracking

For live marker tracking, we have decided to use colored stickers as markers, which will be placed near the presumed biopsy location. This will also be implemented with OpenCV packages.

The markers can be found using hue/saturation/value thresholding, and then their contours can be found and filtered so that they can be used to find the marker centroids. These centroid points will be used to calculate the 2D transformation of the biopsy site for each frame.

We can also calibrate for different lighting conditions - the dermatologist should take a picture from the live feed and select a marker, and the pixel color of the marker will be used to adjust the HSV threshold.

Application Development

For an XCode approach, we can create a Swift or Objective-C application with CocoaPods OpenCV dependency, using XCode storyboards and CocoaTouch for the UI layout. OpenCV also has an iOS library that we can use for live AR tracking and overlay within the app.

Alternatively, we may look into Unity, which is better for cross-platform development. XCode only works on Mac, unfortunately.

For integrating the mobile application with the registration and live marker tracking algorithms, we intend to use data structures that can be imported and exported from independent code, such as JSON or YAML. The data structure may contain information on the points such as the center and radius, or just the point.

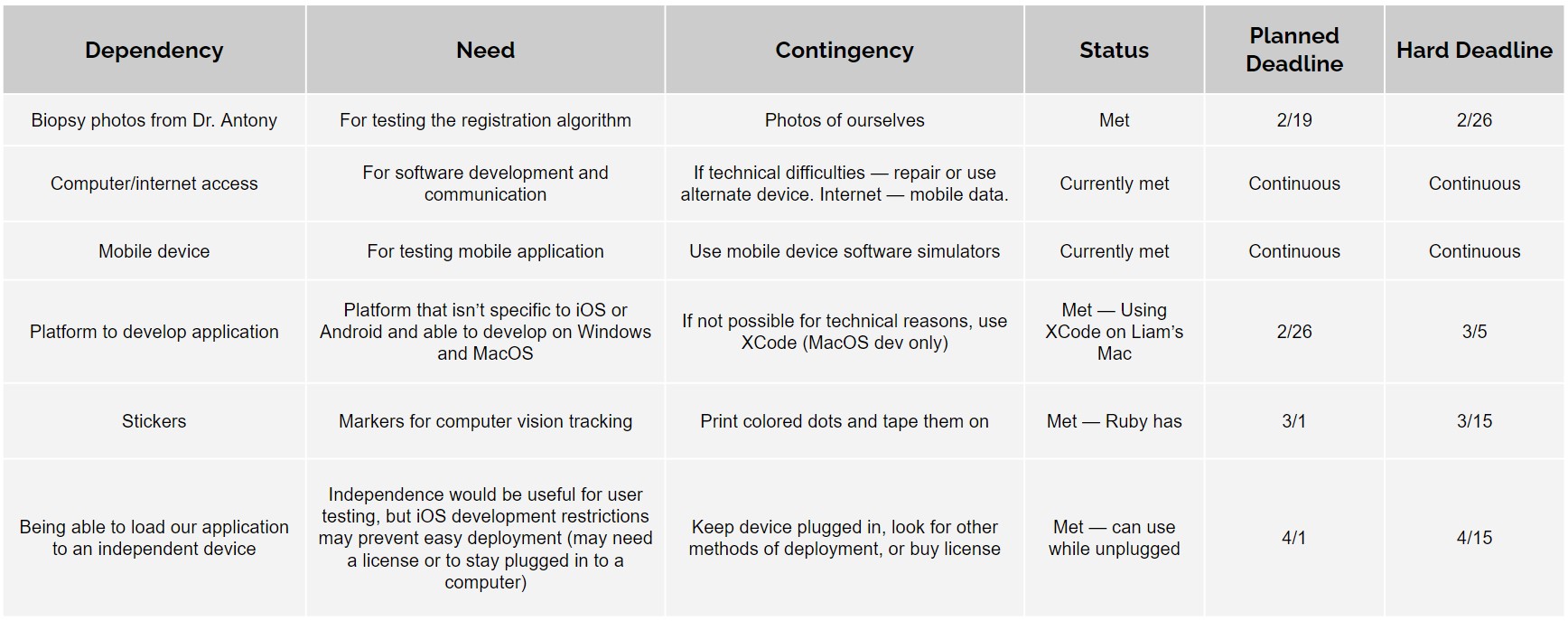

Dependencies

Milestones and Status

Registration and Tracking (Ruby)

- Milestone name: Basic I/O application to record user clicks as anatomical points on images

- Expected Date: 2/26

- Status: Finished

- Milestone name: Photo-to-photo registration algorithm

- Expected Date: 3/5

- Status: Finished algorithm

- Milestone name: Marker tracking in a video using OpenCV

- Expected Date: 3/15

- Status: Finished

- Milestone name: Live overlay of biopsy site with marker tracking and code documentation

- Planned Date: 4/2

- Expected Date: 4/2

- Status: Finished

- Milestone name: Error metrics for live overlay

- Planned Date: 4/9

- Expected Date: 4/9

- Status: Finished

- Milestone name: Experimental data / error metrics for live overlay — real units

- Planned Date: 5/1

- Expected Date: 5/1

- Status: Finished

Application Development (Liam)

- Milestone name: Basic placeholder application + determine how programs will interface

- Expected Date: 2/26

- Status: Finished

- Milestone name: Edge detection overlay

- Expected Date: 3/5

- Status: Finished

- Milestone name: Working UI to select points on image

- Expected Date: 3/15

- Status: Finished

- Milestone name: Add photo registration and marker tracking into app

- Planned Date: 4/2

- Expected Date: 4/2

- Status: Finished

- Milestone name: Complete and deploy final application

- Planned Date: 5/1

- Expected Date: 5/1

- Status: Finished application, only deployed on Liam's iPhone

Reports and presentations

- Proposal Slides

- Project Plan

- Project plan: Google Slides, PDF

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Project checkpoint presentation: Google Slides, PDF

- Paper Seminar Presentations

- Paper seminar presentation: Google Slides, PDF

- Project Final Presentation

- Final Presentation: Google Slides, PDF

- Project Final Report

-

- iOS app documentation (Note: made by Liam, external to CIS II)

Project Bibliography

- Zhang J, Rosen A, Orenstein L, et al. Factors associated with biopsy site identification, postponement of surgery, and patient confidence in a dermatologic surgery practice. J Am Acad Dermatol. 2016; 74:1185-1193.

- Chuang GS, Gilchrest BA. Ultraviolet-fluorescent tattoo location of cutaneous biopsy site. Dermatol Surg. 2012 Mar;38(3):479-83. doi: 10.1111/j.1524-4725.2011.02238.x. Epub 2011 Dec 15. PMID: 22171575.

- Russell K, Schleichert R, Baum B, Villacorta M, Hardigan P, Thomas J, Weiss E. Ultraviolet-Fluorescent Tattoo Facilitates Accurate Identification of Biopsy Sites. Dermatol Surg. 2015 Nov;41(11):1249-56. doi: 10.1097/DSS.0000000000000511. PMID: 26445291.

- Rajput V. Transparent grid system as a novel tool to prevent wrong-site skin surgery on the back. J Am Acad Dermatol. 2019 Nov 8:S0190-9622(19)33013-0. doi: 10.1016/j.jaad.2019.11.011. Epub ahead of print. PMID: 31712173.

- Navarrete-Dechent C, Mori S, Cordova M, Nehal KS. Reflectance confocal microscopy as a novel tool for presurgical identification of basal cell carcinoma biopsy site. J Am Acad Dermatol. 2019 Jan;80(1):e7-e8. doi: 10.1016/j.jaad.2018.08.058. Epub 2018 Sep 20. PMID: 30244067.

- Lichtman MK, Countryman NB. Cell phone assisted identification of surgery site. Dermatol Surg. 2013;39(3 Pt 1):491–2.

- Nijhawan RI, Lee EH, Nehal KS. Biopsy site selfies—a quality improvement pilot study to assist with correct surgical site identification. Dermatol Surg. 2015;41(4):499–504.

- Highsmith JT, Weinstein DA, Highsmith MJ, Etzkorn JR. BIOPSY 1-2-3 in Dermatologic Surgery: Improving Smartphone use to Avoid Wrong-Site Surgery. Technol Innov. 2016;18(2-3):203-206. doi:10.21300/18.2-3.2016.203

- DaCunha M, Habashi-Daniel A, Hanson C, Nichols E, Fraga GR. A smartphone application to improve the precision of biopsy site identification: A proof-of-concept study. Health Informatics J. 2020 Mar 16:1460458220910341. doi: 10.1177/1460458220910341. Epub ahead of print. PMID: 32175791.

- Timerman D, Antonov NK, Dana A, Gallitano SM, Lewin JM. Facial lesion triangulation using anatomic landmarks and augmented reality. J Am Acad Dermatol. 2020 Nov;83(5):1481-1483. doi: 10.1016/j.jaad.2020.03.040. Epub 2020 Mar 25. PMID: 32222445.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (2021-12).