Table of Contents

Tracking of Orthopaedic Instruments in 3D Camera Views

Last updated: Mar 8th, 2017 6:09 pm

Summary

Enter a short narrative description here

- Students: Athira Jacob and Jie Ying Wu

- Mentor(s): Dr. Bernhard Fuerst, Javad Fotouhi, Mathias Unberath, Sing Chun Lee, Dr. Nassir Navab

Background, Specific Aims, and Significance

Kirshner wires or K-wires are long, smooth stainless steel pins that are widely used in orthopedics surgery to hold bones together. The pins are driven through the skin using a power or hand drill. They can be used for temporary fixation before inserting screws or permanent fixation while the bones heal. K-wire and screw insertion is currently done with minimally invasive techniques, involving modern imaging technology and computer aided navigation systems. Correct placement requires numerous intra-operative X-ray images, and often requires multiple attempts before the surgeon achieves satisfactory placement and orientation [1]. Misplacement of the K-wire could cause severe damage to external iliac artery and vein, obturator nerve and other structures [2]. This leads to multiple entry wounds on the patient, high X-ray exposure for the patient and the surgical staff, increased OR time and frustration of the surgical team. A single K-wire insertion could take as much as 10 minutes for a single insertion[3]. The main challenge during K-wire insertion has been identified as the mental alignment of patient, medical instruments and intra-operative X-rays [4]. Recently, camera augmented solutions have been proposed to help surgeons in this mental alignment [5,6].

In this project, we aim to track the K-wire in 3D using stereo RGB cameras with convolutional neuron network.

Deliverables

- Minimum: (Expected by Apr 11th)

- Phantom to create training data

- Modular data set

- Foreground videos with K-wire against green drape

- Segmentations of the K-wire position

- CNN trained on K-wire video with plain background to segment position

- Expected: (Expected by Apr 28th)

- Realistic data set of surgical workspace by composing foreground and background videos of surgical workspace with instruments (ie. scapel)

- CNN trained with realistic data that can segment K-wire

- Algorithm to extract K-wire orientation from segmented positions in stereo pairs

- Maximum: (Expected by May 15th)

- Algorithm to estimate position of K-wire tip inside the patient/phantom

Technical Approach

Technical Approach:

We propose a real time tracking of the K-wire using on RGB data. Traditional computer vision methods face challenges in this task due to non-uniform lighting, occlusions, and the complex background. Hence deep learning is used to segment the surgical video.

The solution can be divided into three main parts:

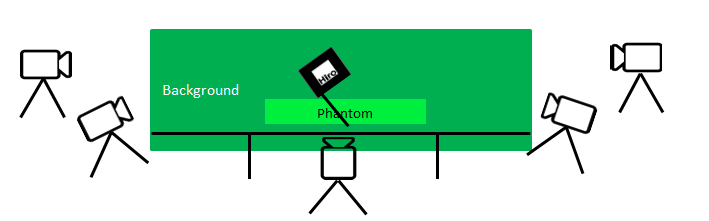

1. Data Creation

Large, quality data is essential to training a successful neural network. Since there is no data set available for K-wire tracking, our first step is to create one. We will capture the foreground (K-wire) and background (scene including drape, instruments etc.) separately and compose them in stages to generate data of varying complexity. By capturing the foreground separately on a plain background (Figure 1 a) Sample foreground shot before segmentation (left), we can easily segment the K-wire to obtain soft ground truth for position. We propose to train the network on the simplest data and increment the complexity of the data once we have a basic trained network that can distinguish the K-wire against a plain background. The final step would be to compose a dataset using actual videos from a surgery.

Figure 1 a) Sample foreground shot before segmentation (left). Sample background images (middle and right)

2. Network architecture

A few potential network architectures have been identified.

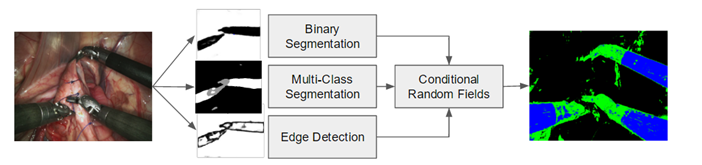

a. Holistically-nested Edge Detection) for tool segmentation [10]

Figure 3) Schematic of the network architecture

This approach uses three fully convolutional networks (FCN’s) to simultaneously perform 2D multi-class segmentation, binary segmentation and edge detection. Finally Conditional Random Fields are used to refine the mask from the multi-class using outputs from binary segmentation and edge detection.

b. U-Nets [11]

U-Nets have been shown to give good results in biomedical image segmentation. These networks rely on fully convolutional layers to combine features at different resolutions to achieve precise segmentations that retain semantic information. In addition, extensive data augmentation allows use of fewer training samples.

3. Pose Estimation

We will use epipolar geometry to do pose estimation from stereo images. 2D segmentations from the RGB images can be used to know the 3D position of the K-wire with respect to the stereo camera. The cameras will be pre-calibrated with a checkerboard pattern. In capturing our data, we will use an AR tag on the K-wire to create ground truth location for it. The AR tag will then be removed, prior to taking the video.

In our validation dataset, with X-ray and RGBD camera, as in the CamC[12] set up, we will calibrate the two with metal lined checkerboard pattern. The orientation of the K-wire is thus obtained by extending the line to infinity. The final step (maximum deliverable) would be to identify the tip of the K-wire on this infinite line, which could be potentially done by identifying markings on the K-wire.

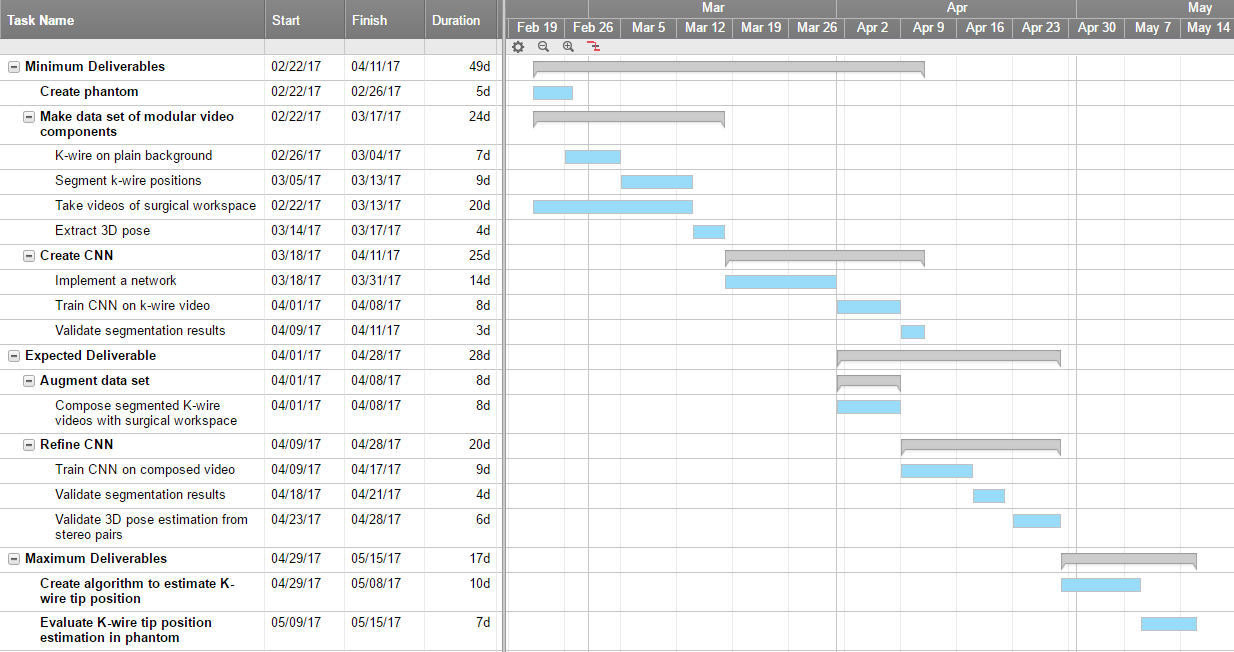

Timeline

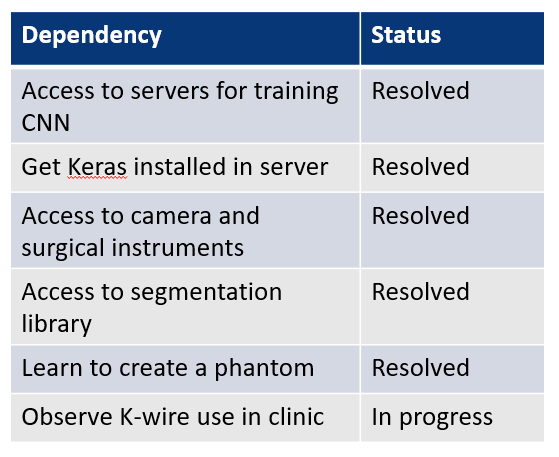

Dependencies

All except one dependency has been resolved. The remaining one “Observe K-wire use in clinic” is dependent on our surgical collaborators, Dr. Alex Johnson and Dr. Greg Osgood. They will inform us if there is an appropriate surgery using K-wires that we can observe. If this dependency is not met, we will still complete our project, but ask Dr. Johnson to set up the scene as close to the real situation as he could using our phantom in the Mock OR and fake blood.

Milestones and Status

- Milestone name: Phantom setup

- Completed Date: 2/26/17

- Status: Complete

- Milestone name: Data set

- Planned Date: 3/17/17

- Expected Date: 3/17/17

- Status: Started collecting some data. Still need to take more videos

- Milestone name: CNN initial training (Minimum deliverable)

- Planned Date: 4/11/17

- Expected Date: 4/11/17

- Status: Not started

- Milestone name: 3D Position Recovery (Expected deliverable)

- Planned Date: 4/28/17

- Expected Date: 4/28/17

- Status: Not Started

- Milestone name: Tip position estimation (Maximum deliverable)

- Planned Date: 5/15/17

- Expected Date: 5/15/17

- Status: Not Started

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Poster

- Project Final Report

- links to any appendices or other material

Project Bibliography

Reading list:

- Fischer, Marius, et al. “Preclinical usability study of multiple augmented reality concepts for K-wire placement.” International Journal of Computer Assisted Radiology and Surgery 11.6 (2016): 1007-1014.

- Jégou, S., Drozdzal, M., Vazquez, D., Romero, A., & Bengio, Y. (2016). The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation. arVix Preprint.

- Long, Jonathan, Evan Shelhamer, and Trevor Darrell. “Fully convolutional networks for semantic segmentation.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015.

- Pakhmov et. al, Semantic-boundary-driven approach to Instrument Segmentation for Robotic Surgery

- Lee et. al, Simultaneous Segmentation, Reconstruction and Tracking of Surgical Tools in Computer Assisted Orthopedics Surgery

- Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer Assisted Intervention - MICCAI 2015 (Vol. 9351, pp. 234–241). Springer, Cham. https://doi.org/10.1007/978-3-319-24574-4_28

- Szegedy, C., Reed, S., Erhan, D., Anguelov, D., & Ioffe, S. (2014). Scalable, High-Quality Object Detection. arXiv. Retrieved from http://arxiv.org/abs/1412.1441

References:

- [1] Stöckle, Ulrich, Klaus Schaser, and Benjamin König. “Image guidance in pelvic and acetabular surgery—expectations, success and limitations.” Injury 38.4 (2007): 450-462.

- [2] Guy, Pierre, et al. “The ‘safe zone’ for extra-articular screw placement during intra-pelvic acetabular surgery.” Journal of orthopaedic trauma 24.5 (2010): 279-283.

- [3] Starr, Adam J., Charles M. Reinert, and Alan L. Jones. “Percutaneous fixation of the columns of the acetabulum: a new technique.” Journal of orthopaedic trauma 12.1 (1998): 51-58.

- [4] Starr, A. J., et al. “Preliminary results and complications following limited open reduction and percutaneous screw fixation of displaced fractures of the acetabulum.” Injury 32 (2001): 45-50.

- [5] Navab, Nassir, Sandro-Michael Heining, and Joerg Traub. “Camera augmented mobile C-arm (CAMC): calibration, accuracy study, and clinical applications.” IEEE transactions on medical imaging 29.7 (2010): 1412-1423.

- [6] Habert, Séverine, et al. “Rgbdx: First design and experimental validation of a mirror-based rgbd X-ray imaging system.” Mixed and Augmented Reality (ISMAR), 2015 IEEE International Symposium on. IEEE, 2015.

- [7] Fischer, Marius, et al. “Preclinical usability study of multiple augmented reality concepts for K-wire placement.” International Journal of Computer Assisted Radiology and Surgery 11.6 (2016): 1007-1014.

- [8] Liu, Li, et al. “Computer assisted planning and navigation of periacetabular osteotomy with range of motion optimization.” International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer International Publishing, 2014.

- [9] Synowitz, Michael, and Juergen Kiwit. “Surgeon’s radiation exposure during percutaneous vertebroplasty.” Journal of Neurosurgery: Spine 4.2 (2006): 106-109.

- [10] Pakhmov et. al, Semantic-boundary-driven approach to Instrument Segmentation for Robotic Surgery

- [11] Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer Assisted Intervention - MICCAI 2015 (Vol. 9351, pp. 234–241). Springer, Cham. https://doi.org/10.1007/978-3-319-24574-4_28

- [12] Navab, Nassir, A. Bani-Kashemi, and Matthias Mitschke. “Merging visible and invisible: Two camera-augmented mobile C-arm (CAMC) applications.” Augmented Reality, 1999.(IWAR'99) Proceedings. 2nd IEEE and ACM International Workshop on. IEEE, 1999.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space.