Table of Contents

Robotic Operation of ICU Equipment in Contagious Environments

Last updated: 2021.May.6

Summary

Our project aims to design an integrated tele-operable robotics system based on 2D cartesian robot and object recognition algorithm to enters the ICU room on behalf of the ICU team member. This robot is designed to recognize key ICU equipment, operate them, and project key information from such equipment straight back to the operator. Thus reducing the time, protection gear, and exposure risk cost that an ICU team member faces when entering an ICU room during a COVID-19 pandemic.

- Students: Tianyu (David) Wang, Jiayin (Jaelyn) Qu

- Mentors: Dr. Axel Krieger, Dr. Balazs P. Vagvolgyi, Dr.Sajid H. Manzoor

Background, Specific Aims, and Significance

The COVID-19 pandemic has caused a tremendous global surge of ICU devices and staff demand. Since the virus is highly contagious, and directly impacts the respiratory system, it is believed that about ⅓ of the patients who have COVID-19 will require ICU admission at some point[1]. This poses great challenges towards the medical system as hospitals are overloading to take on COVID patients in ICU. As of February, the average national ICU occupation is still at 73 %[2], this is an almost 10 % raise compared to 2010 [3]. Most Significantly, given COVID’s mode of transmittance, medical workers are at risk every time they enter an ICU room to do routine adjustments and check-ups for patients. Furthermore, entering the ICU requires consuming a full set of PPE. Not to mention the amount of time it would take to properly dress in PPE.

Therefore, ICU teams need a novel way to remotely monitor and adjust settings on key ICU equipment in order to efficiently monitor and provide consistent healthcare service to all patients while reducing material cost and risk of exposure for ICU staff during routine checkup.

Therefore, our goal is to build a 2D cartesian robot that recognize the device, load its pre-configured interface layout, and manipulate the oscilloscope (model for ICU equipments) using its end-effector. This robot will be controlled with a working user interface that allows the user to remotely control the robot.

Concept Sketch for Proposed Robotics System

Technical Approach

Summary

To make our solution feasible, we modeled target devices with oscilloscopes. Simplified the problem from 6 to 3 DOF by assuming the robot has already aligned with the device at an ideal position. Then we breakdown our problem into 2 components: Identify Device, Device Operation.

For identifying the device, we trained an object recognition model to recognize the device from distance, and defined json structure to load corresponding interface configuration for device based on user input. Currently it has an classification accuracy of 86 %.

For device operation, we built a user interface based on python-ROS system that allows the user to remotely control the robot; In addition, we designed an novel end-effector to interact effectively and accurately with the target device and built a 2D cartesian for testing end-effector.

Lastly, we incorporated live camera feedback to project important information to user as well as monitor the end-effector interaction with devices. However, this portion is still under construction and not optimized for output yet.

Identify Device:

1. Trained an object recognition model to recognize the device from distance, and defined json structure to load corresponding interface configuration for device based on user input.

The overall approach is to take pictures/videos of the desired equipment and use these data to train a neural network that would be able to discern the equipment using Tensorflow. Note that due to the restriction on medical appliances, we eventually choose to use an oscilloscope in replace of a real ventilator, given that the oscilloscope also contains buttons, switches, knobs and screens.

Sample Image of Osciloscope being labeled for training.

Sample Image of Osciloscope being labeled for training.

General steps of doing object recognition include: a.Collecting and labelling the data; b.Training model; c.Testing model based on new data sets;

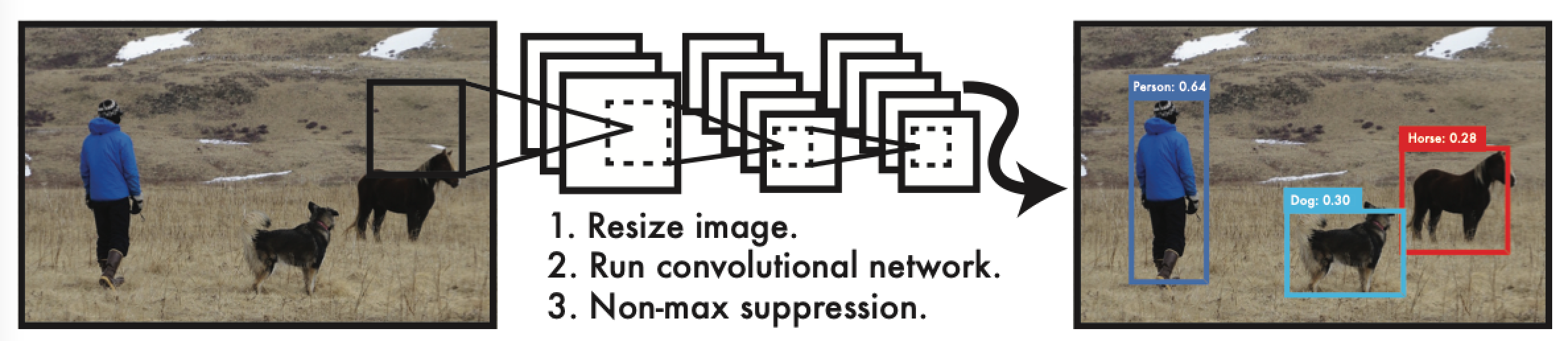

The recognition of the oscilloscope adapts the YOLO (you only look once) algorithm. The benefits of YOLO is that it can achieve relatively fast recognition speed and the learning accuracy of the general representations is also high compared with other object detection methods including DPM and R-CNN

General workflow of YOLO network

General workflow of YOLO network

Operate Device

1. Build a user interface based on python-ROS system that allows the user to remotely control the robot.

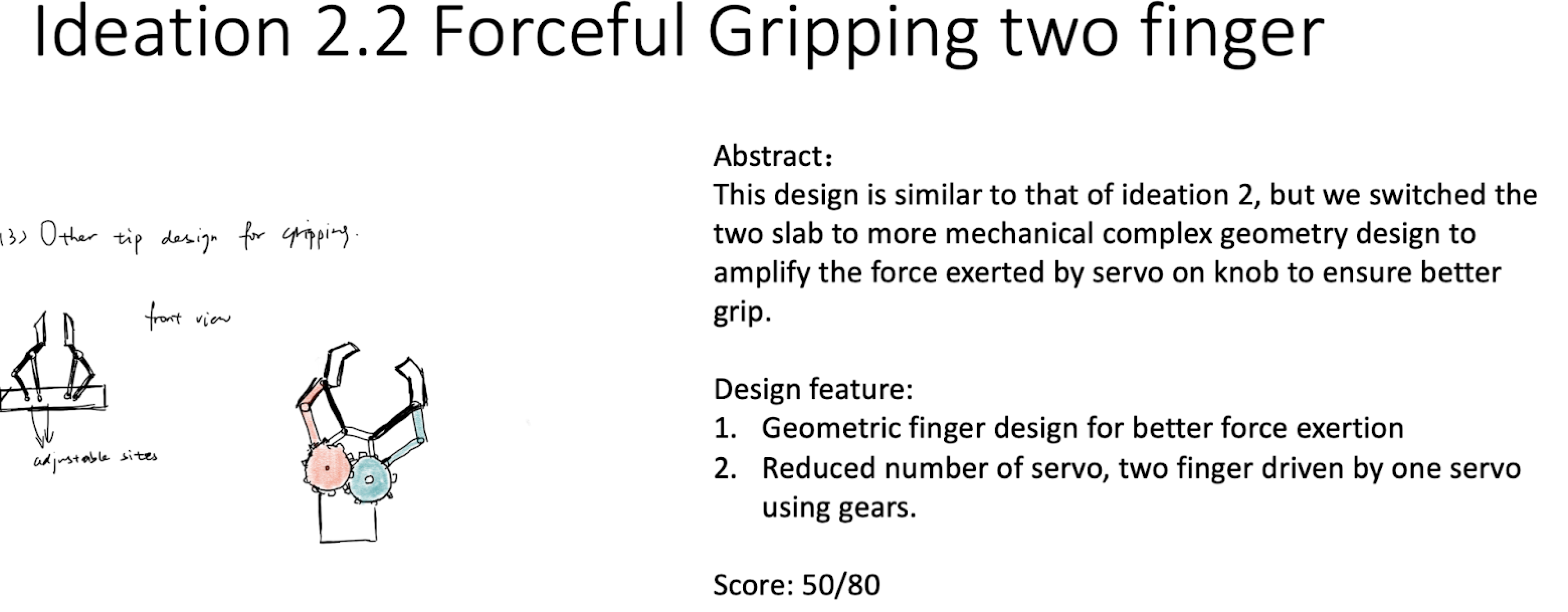

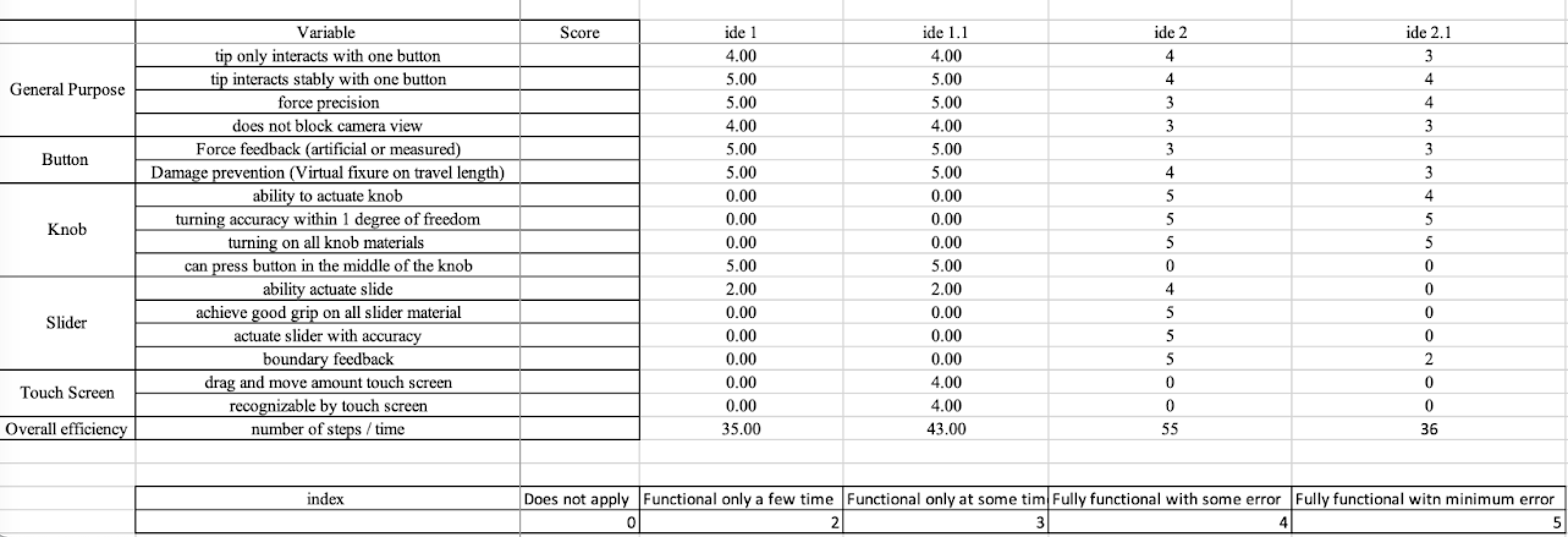

2. Design novel end-effector to interact effectively and accurately with target device.

Design Criteria used to evaluate ideation

Design Criteria used to evaluate ideation

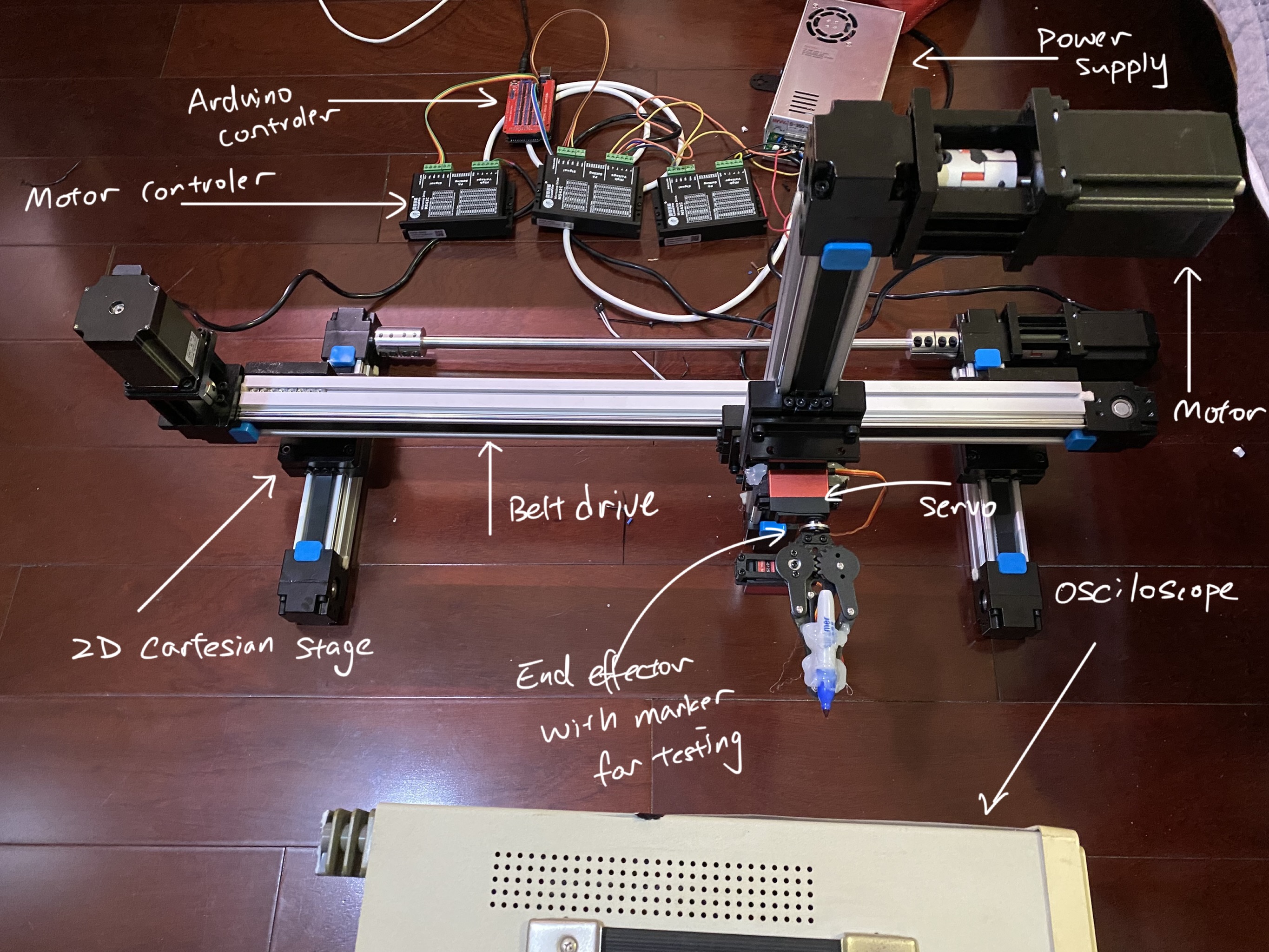

3. Built a 2D cartesian for testing end effector.

Goal is to construct a cartesian robot with an end effector optimized for turning knobs, pressing buttons and interacting with the screen, we will do the following steps. To construct a cartesian robot with an end effector optimized for turning knobs, pressing buttons and interacting with the screen, we will do the following steps.

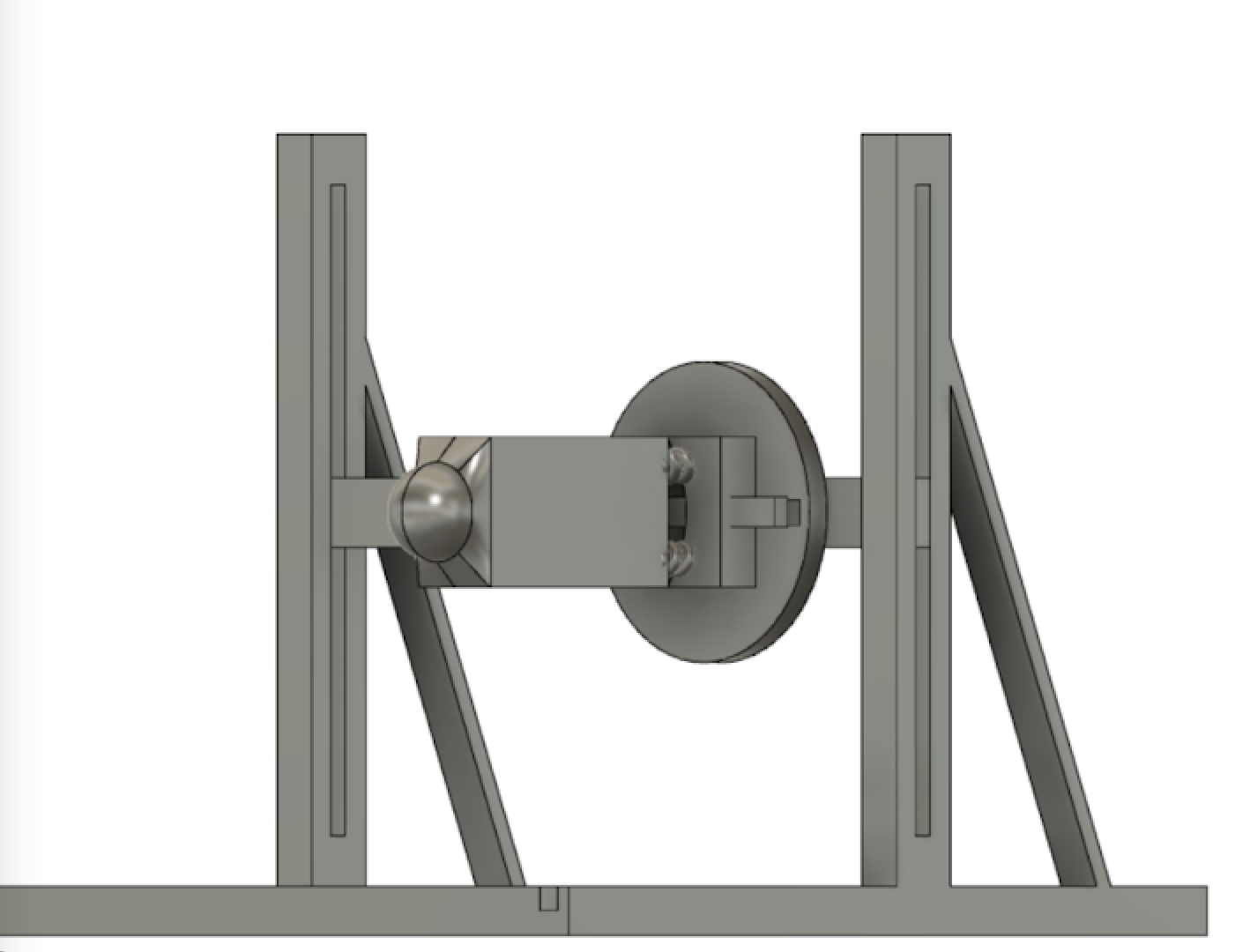

1. Finding the optimum end effector geometry

A. To find the optimum end effector geometry, we would first conduct literature research to

source current popular mechanical design based on our purpose.

B. Then, to compare selected mechanisms, we would develop Need Creterias and evaluation charts

based on the proposed end goals. A preliminary need statement can be found below.

End Effector

Turning knob within precision requirement

Press button within precision requirement

Press screen within precision requirement

Leave enough viewing angle for camera

Mechanically stable and driven by motor

Cartesian robot

Move to given point on 2D plane within precision requirement

Remain steady while end effector operates

Cartesian robot is able to operate time efficiently

With selected mechanisms, we would conduct rapid prototypes and conduct preliminary manual tests

to make sure that they pass the proof of concept.

2. Actuating end effector w/ motor

Since we need fast, accurate motions of small force, utilizing an electric stepper motor / servo

would be our optimized form of actuating our end effector. This portion of the proposal thus

focuses more on the manual assembly and testing of the end effector while being motorized.

This includes, sourcing and coming up with an plan to assemble a system to move and actuate the

end effector. Current plan is to utilize an Arduino with servos attached to breadboards powered

by triple A batteries. Detailed implementation can be sourced easily from online.

3. Assemble and test with cartesian robot

To test the accuracy and efficacy of the end effector, we utilize a cartesian motor to conduct

all the functionality and accuracy testing. The robot will be given tasks formed by a

combination of : going to a specific point, interacting with the geometry of that point,

monitoring how much the whole mount changed during operation to assess the accuracy, efficacy

and repeatability of the robot. Cartesian robot will be built from T-slots and a stepper motor

with 12 V DC battery powering it. The guide to such robots is generously provided by Dr.Krieger

and can be located in our share folder. Based on the result and analysis of the testing, we will

choose to compensate for the error physically or digitally.

Sample 2D Cartesian Belt Drive System

Sample 2D Cartesian Belt Drive System

4. Incorporated live camera feedback to project important information to user as well as monitor the end-effector interaction with device.

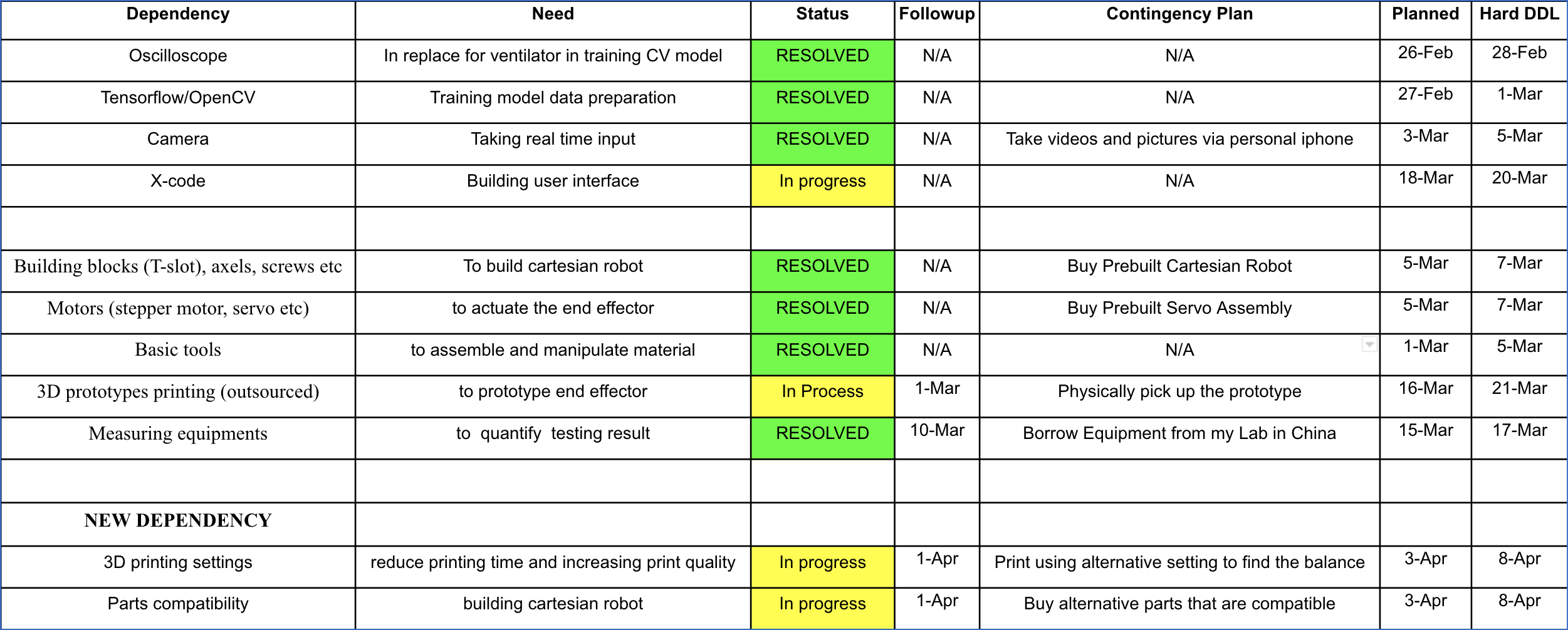

Dependencies

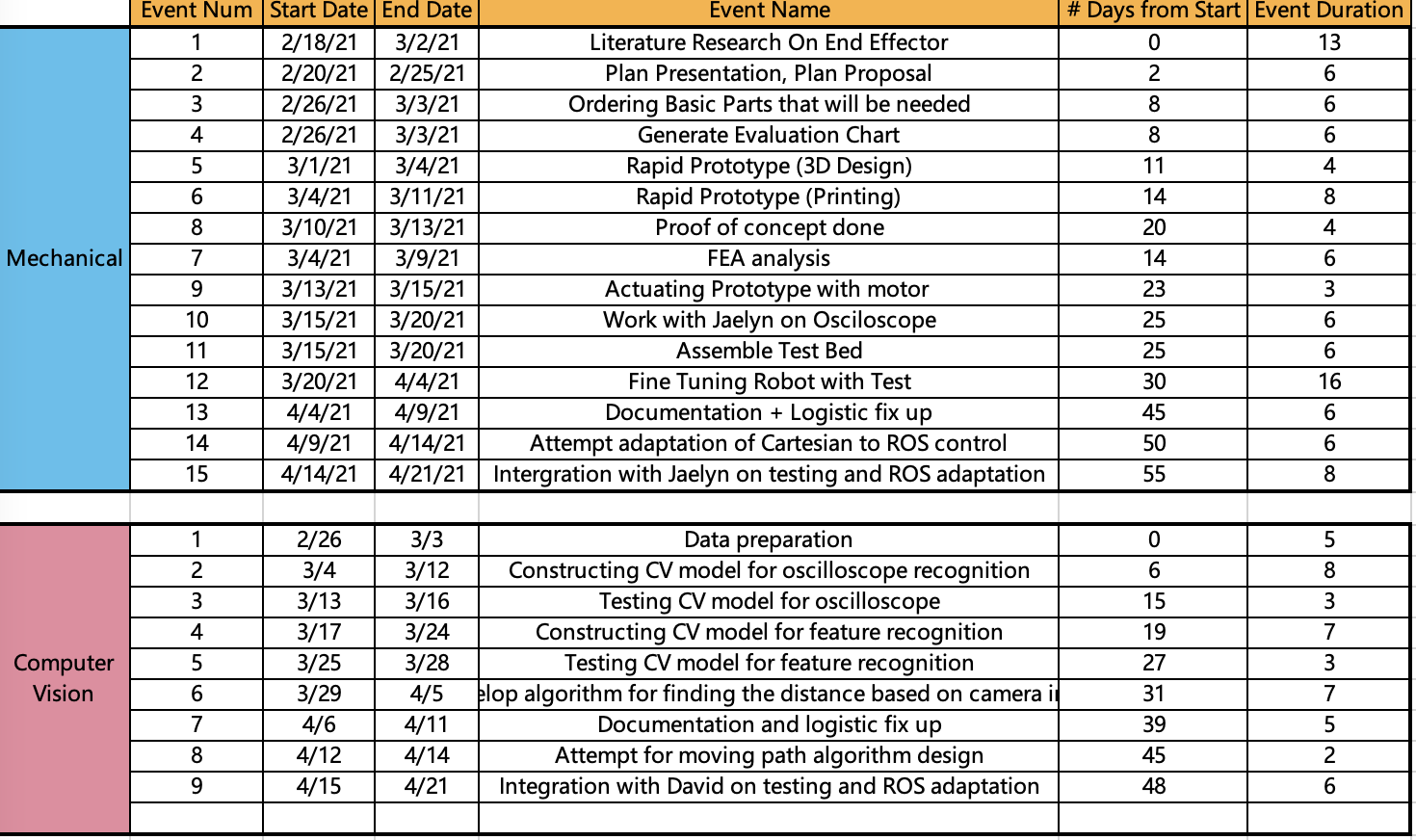

Milestones and Status

Deliverables

Responsibility:

- Mechanical: David

- Computer Vision: Jaelyn

Deliverables:

Minimum: (Expected date: March 20. Deadline: March 25)

- A user interface that simulates the panel of the oscilloscope; Done

- A functional prototype of an end effector that can interact with a knob, button and touch screen actuated using a motor. (David) Done

- Documentation for prototype end effector design and related evaluation chart. (David) DONE

Expected: (Expected date: April 10. Deadline: April 15)

- A computer vision model being able to recognize oscilloscope under various environments; Done

- A functional prototype of an end effector that can interact with a knob, button and touch screen implemented on a cartesian robot. (David) Testing knob turning accuracy

- Documentation for prototype end effector and cartesian robot regarding assembly and set up. (David)80%

Maximum:(Expected date: April 25, Deadline: April 29)

- An algorithm that is able to calculate the relative pose and position between the target machine and camera. Future work

- A functional prototype of an end effector that can interact with different interactions and is well calibrated for error.(David)Future work

- Documentation for end effector implemented on cartesian robot along with related test data and error analysis.(David)Future work

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Tianyu Original Papera_simple_active_damping_control_for_compliant_base_manipulators.pdf

- Jiayin Original Paperjiayin_original_paper.pdf

- Tianyu Paper Review cis_ii_paper_review.pdf

- Jiayin Paper Review cis_2_paper_review.pdf

- Tianyu Paper Review Slidecis_paper_review_ppt.pdf

- Jiayin Paper Presentationcis2_paper_presentation.pdf

- Project Final Presentation

- Final Presentation Teaser 1 min Slide

- Final presentation slide

- Final Postercis_2_final_poster_v2.pdf

- Project Final Report

- Final Reportcis_2_final_project_report.pdf

- User Manual For 2D Cartesian robotuser_manual_for_2d_cartesian_belt_drive.pdf

Project Bibliography

- [1] S. M. Abate, S. A. Ali, B. Mantfardo, and B. Basu, “Rate of Intensive Care Unit admission and outcomes among patients with coronavirus: A systematic review and Meta-analysis,” PLOS ONE, vol. 15, no. 7, p. e0235653, Jul. 2020, doi: 10.1371/journal.pone.0235653.

- [2] M. Conlen, J. Keefe, L. Leatherby, and C. Smart, “How Full Are Hospital I.C.U.s Near You?,” The New York Times, Dec. 16, 2020.

- [3] H. Na and P. Sm, “Critical Care Medicine Beds, Use, Occupancy, and Costs in the United States: A Methodological Review.,” Crit. Care Med., vol. 43, no. 11, pp. 2452–2459, Nov. 2015, doi: 10.1097/ccm.0000000000001227.

- [4] “Robot arm grippers and EOAT components | OnRobot.” https://onrobot.com/en/products (accessed Feb. 25, 2021).

- [5] “Home - Zimmer Group.” https://www.zimmer-group.com/en/ (accessed Feb. 25, 2021).

- [6] N. Kumar et al., “Design, development and experimental assessment of a robotic end-effector for non-standard concrete applications,” in 2017 IEEE International Conference on Robotics and Automation (ICRA), May 2017, pp. 1707–1713, doi: 10.1109/ICRA.2017.7989201.

- [7] Wilhelm Burger and Mark J. Burge: Principles of Digital Image Processing (it is a 3-volume set, comprised of: Fundamental Techniques, Core Algorithms, & Advanced methods), Springer (2009).

- [8] Rafael C. Gonzales and Richard E. Woods: Digital Image Processing, 4th edition, Pearson (2017).

- [9] G. Dougherty: Digital Image Processing for Medical Applications, 1st ed., Cambridge U. Press (2009).

- [10] R. Klette: Concise Computer Vision: An Introduction into Theory and Algorithms, Springer (2014).

- [11] R. Chitala and S. Pudipeddi: Image Processing and Acquisition using Python, CRC Press (2014).

- [12] R. Szeliski, Computer Vision: Algorithms and Applications, Springer (2011).

- [13] S.J.D. Prince, Computer Vision: Models, Learning, and Inference, Cambridge U. Press(2012).

- [14]. B. P. Vagvolgyi et al., “Telerobotic Operation of Intensive Care Unit Ventilators,” ArXiv201005247 Cs, Oct. 2020, Accessed: Apr. 12, 2021. [Online]. Available: http://arxiv.org/abs/2010.05247.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (2021-18).

Object detection: object_recognition.pdf

Feature matching: feature_matching.pdf

Meeting notes: meeting_notes.pdf

Design journal: design_journel.pdf

Evaluation chart of end effector design: evaluation_chart.xlsx

Prototype of end effector: testv4_drawing_v1.pdf