Table of Contents

Robotic Ultrasound Assistance via Hand-Over-Hand Control

Last updated: May 9th, 2019

Summary

Ultrasonographers typically experience repetitive musculoskeletal microtrauma in performing their daily occupational tasks which require holding an ultrasound (US) probe against a patient in contorted positions while simultaneously applying large forces to enhance image quality. This project aims to provide ultrasonographers with “robotic powersteering” for US, essentially consisting of a hand-guidable, US probe-wielding robot that does the strenuous probe holding for them once navigated to a point-of-interest.

While this challenge has been approached by previous work, the previously implemented hand-over-hand control algorithms were reportedly sluggish and lacked motion transparency for the user. This work aims to change these algorithms to create a smoother hand-over-hand control experience.

Precise and transparent hand-over-hand control is also an important first step to augmented and semi-autonomous US procedures such as synthetic aperture imaging and co-robotic transmission US tomography.

- Students:

- Kevin Gilboy: MSE student, Department of Electrical and Computer Engineering, first-year

- Mentor(s):

- Dr. Emad Boctor: Assistant Professor, Department of Radiology and Computer Science

- Dr. Mahya Shahbazi: Postdoctoral Fellow, LCSR

Background, Specific Aims, and Significance

Typically, ultrasound (US) guided procedures require a sonographer to hold an US probe against a patient in static, contorted positions for long periods of time while also applying large forces [Schoenfeld, et al., 1999]. As a result, 63%-91% of sonographers develop occupation-related musculoskeletal disorders compared to only about 13%-22% of the general population [Rousseau, et al., 2013].

The vision of this work is to provide sonographers with “power-steering” via a hand-guidable robot that they can maneuver to a point-of-interest and then release, having the robot do all the strenuous holding on their behalf. While previous work performed at JHU has shown promising results using a MATLAB implementation and basic filtering [Finocchi 2016; Finocchi, et al., 2017; Fang, et al., 2017], past prototypes have lacked the transparency of power-steering necessary for practical clinical usage.

Therefore, the specific aim of this work is to improve upon the previous robotic ultrasound assist prototypes via C++ implementation and adaptive Kalman filtering to create a more transparent power-steering, cooperative-control experience for sonographers. The result will be evaluated in a user study that quantitatively measures exerted effort during sonography, as well as questionnaires surveying participant-perceived operator workload.

If successful and validated, this work will be an important progression toward mitigating sonographers' susceptibility to work-related musculoskeletal disorders. It also has consequences for all procedures under the umbrella of robotic ultrasound, as the control algorithms developed for this work will underlay and improve all applications built on top of it. Some examples include enforcing virtual fixtures for synthetic aperture procedure, imaging with respiratory gating, replicating a position/force profile for repeatable biopsy procedures, and conducting co-robotic ultrasound tomography scans.

Deliverables

- Minimum: (Starting by 2/1, Expected by 2/17)

- Zipped static F/T and UR5 reading datasets for multiple poses demonstrating proper equipment connectivity (2/5)

- .zip: multiple samples of rotation matrices and F/T readings for 32 static poses

- Documented code that calculates gravity compensation parameters offline (2/12)

- Available in ./GravityCompensation on private GitHub repo (contact for access)

- Video of in-air hand-over-hand control demonstrating admittance control and gravity compensation (2/17)

- Report of gravity compensation effectiveness (2/17)

- xlsx: results and graphs of uncompensated versus compensated F/T values using parameters calculated offline

- Documented code that performs in-air hand-over-hand control with gravity compensation (2/17)

- Documentation: .pdf. Code located in ./Code on private GitHub repo

- Expected: (Starting by 2/18, Expected by 5/3)

- Video of improved hand-over-hand control demonstrating successful Kalman filtering (4/4)

- Video of pt-probe force feedback (5/1)

- Unable to complete due to malfunctioning contact force sensor

- Documented code that performs Kalman filtered hand-over-hand control and incorporates pt-probe force feedback (5/1)

- Documentation: .pdf. Code located in ./Code on private GitHub repo

- Report of V&V testing results (5/3)

- All testing results are outlined in the final report

- Maximum: (Starting by 4/15, Expected by 5/3)

- Video of virtual fixture functionality demonstrating successful implementation (5/3)

- Video hosted on Google Drive. In this video, a very basic virtual fixture is used to enforce probe contact even through the contact sensor is not working.

- Documented code that enforces virtual fixtures (5/3)

- Not completed due to limited implementation time. This will be documented in future work as the project is continued.

Technical Approach

This project will tackle improving the robot motion transparency through the following two approaches.

Control System Approach - Kalman Filtering

Previous work by Finocchi [Finocchi 2016; Finocchi, et al., 2017] and Fang [Fang, et al., 2017] used algorithms that primarily focused on filtering the F/T signals received to produce more stable velocities, namely through their use of nonlinear F/T-velocity gains and the 1€ filter used for smoothing hand-guided motion. While they still achieved an adequate result, their work does not consider data sparsity and latency which greatly contributes to the user experience in real-time robotics.

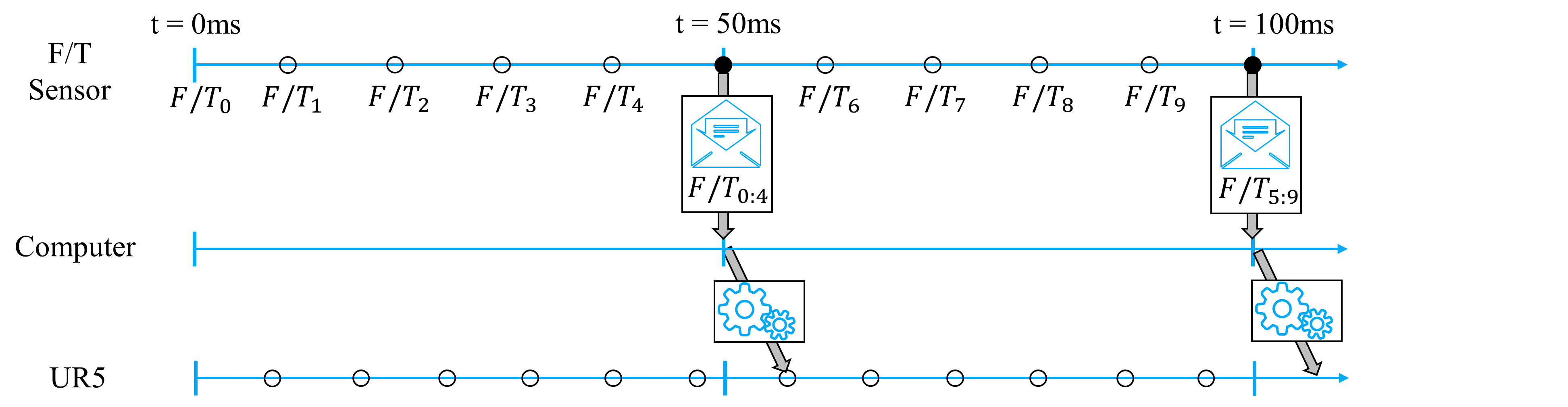

The issue with data sparsity and latency arises primarily from the 6-DoF F/T sensor which sends 100 Hz of data in TCP packets that arrive at 20 Hz (e.g. a packet arrives every 50ms containing the previous 5 samples of F/T data). A naive approach at robotic control could be developed which commands new robot velocities immediately upon receiving an incoming F/T packet, but this would mean the UR5 is commanded at 20 Hz, much lower than its maximum supported rate of 125Hz. This is shown below.

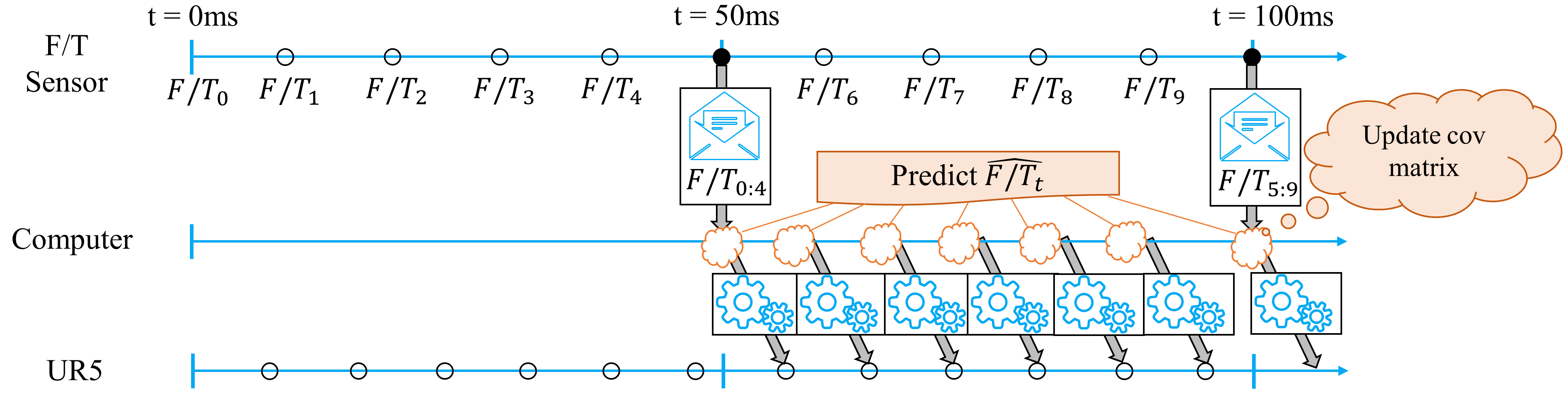

In this work, an adaptive Kalman Filter will be used to generate inter-packet F/T inferences therefore allowing the robot to be commanded at its full 125 Hz potential. It is also suspected that the Kalman Filter can be tuned to help alleviate the effects of TCP latency between when the F/T packet is sent and when the robot is commanded in response. It is worth noting that the filter will be made “adaptive” to improve future predictions by automatically updating its covariance matrices when a new F/T packet arrives based on how well its predictions matched the real measured F/T values. This is shown below.

Programmatic Approach - C++ Implementation

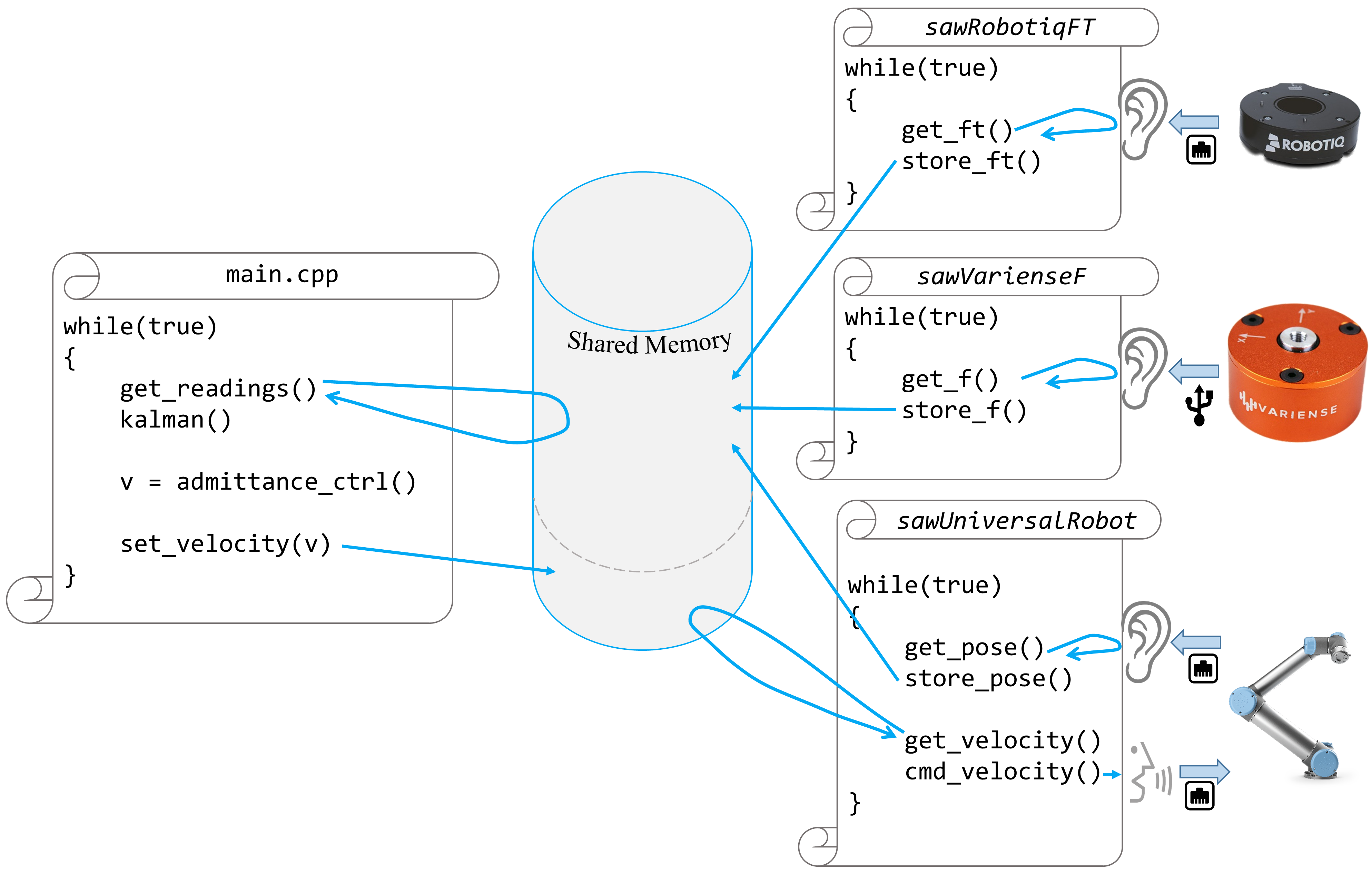

Previous work by Finocchi [Finocchi 2016; Finocchi, et al., 2017] and Fang [Fang, et al., 2017] used MATLAB programming and client-side software running on the UR5 to relay F/T values to the computer. While they still achieved an adequate result, using an interpreted language such as MATLAB and running unnecessary client-side code introduces latency and overhead which is detrimental to the user experience of any real-time system. In this work, C++ will be used in combination with the open-source CISST/SAW libraries to get data from, and command the UR5 without any client-side code. A simplified diagram is shown below.

As shown, there will be three SAW components listening for data from the robot and F/T sensors respectively and storing them in objects accessible by main.cpp. The main script, in addition to performing component initialization, will essentially be an infinite loop of fetching readings, filtering, and commanding a velocity to the UR5 SAW component. It is worth noting that the CISST/SAW libraries have native support for accessing shared data in a way that prevents race conditions, which is very useful since this program relies on asynchronous, multitask execution and is therefore prone to data corruption.

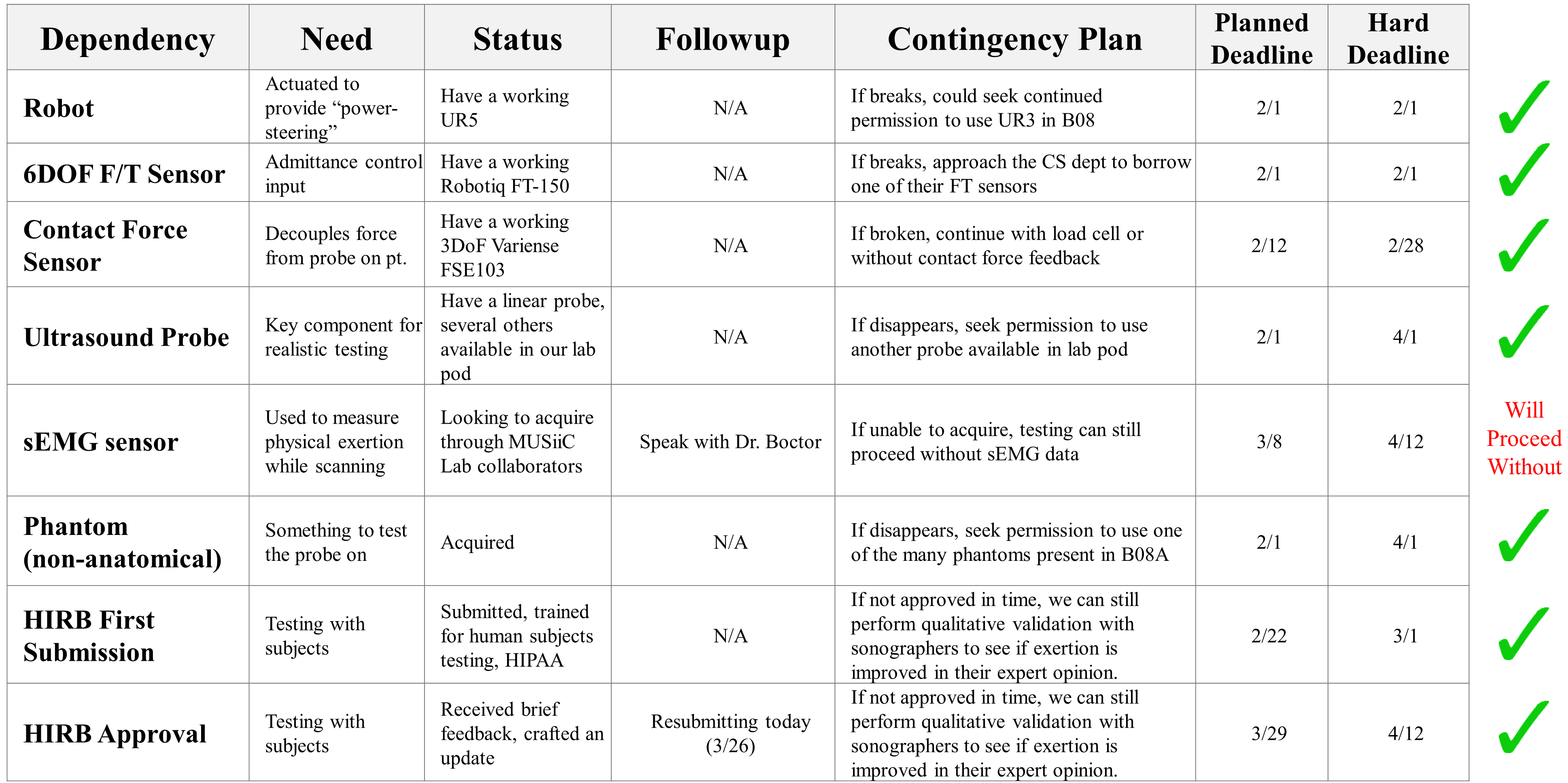

Dependencies

Milestones and Status

- Milestone name: Interfacing of components (UR5, Robotiq, computer)

- Start Date: 2/1

- Planned Date: 2/4

- Expected Date: 2/4

- Status: Completed

- Milestone name: Admittance control (rudimentary)

- Start Date: 2/6

- Planned Date: 2/10

- Expected Date: 2/10

- Status: Completed

- Milestone name: Gravity compensation

- Start Date: 2/11

- Planned Date: 2/16

- Expected Date: 2/16

- Status: Completed

- Milestone name: Kalman Filtering

- Start Date: 2/18

- Planned Date: 4/4

- Expected Date: 4/4

- Status: Completed

- Milestone name: Incorporate 3DoF contact force sensor for probe force compensation

- Start Date: 3/11

- Planned Date: 3/27

- Expected Date: 4/30

- Status: Unable to proceed, although interfacing was performed, there were unfixable issues with force sensor readings (nonlinear, stochastic relation of forces between the three measured forces)

- Milestone name: User Study

- Start Date: 4/6

- Planned Date: 4/26

- Expected Date: 5/3

- Status: HIRB Accepted, user study postponed due to malfunctioning probe contact force sensor

- Milestone name: Virtual Fixtures

- Start Date: 4/15

- Planned Date: 5/3

- Expected Date: 5/3

- Status: Started at a basic level as a “hack” way to encourage probe contact force for a demo in the absence of a working contact force sensor. More advanced functionality should certainly be implemented moving forward.

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Project Checkpoint

- Paper Seminar Presentations

- Project Final Poster

- Project Final Teaser

- Project Final Report

- Code documentation of relevant files, functions, and variables

- HIRB Application:

Project Bibliography

- A. Schoenfeld, J. Goverman, D. Weiss and I. Meizner, “Transducer user syndrome: an occupational hazard of the ultrasonographer”, European Journal of Ultrasound, vol. 10, no. 1, pp. 41-45, 1999. Available: 10.1016/s0929-8266(99)00031-2.

- T. Rousseau, N. Mottet, G. Mace, C. Franceschini and P. Sagot, “Practice Guidelines for Prevention of Musculoskeletal Disorders in Obstetric Sonography”, Journal of Ultrasound in Medicine, vol. 32, no. 1, pp. 157-164, 2013. Available: 10.7863/jum.2013.32.1.157.

- R. Finocchi, “Co-robotic ultrasound imaging: a cooperative force control approach”, The Johns Hopkins University, 2016.

- R. Finocchi, F. Aalamifar, T. Fang, R. Taylor and E. Boctor, “Co-robotic ultrasound imaging: a cooperative force control approach”, Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling, 2017. Available: 10.1117/12.2255271.

- H. K. Zhang, R. Finocchi, K. Apkarian and E. M. Boctor, “Co-robotic synthetic tracked aperture ultrasound imaging with cross-correlation based dynamic error compensation and virtual fixture control,” 2016 IEEE International Ultrasonics Symposium (IUS), Tours, 2016, pp. 1-4. Available: 10.1109/ULTSYM.2016.7728522

- T. Fang, H. Zhang, R. Finocchi, R. Taylor and E. Boctor, ``Force-assisted ultrasound imaging system through dual force sensing and admittance robot control'', International Journal of Computer Assisted Radiology and Surgery, vol. 12, no. 6, pp. 983-991, 2017. Available: 10.1007/s11548-017-1566-9.

- S. Murphey and A. Milkowski, “Surface EMG Evaluation of Sonographer Scanning Postures”, Journal of Diagnostic Medical Sonography, vol. 22, no. 5, pp. 298-305, 2006. Available: 10.1177/8756479306292683.

- S. Hart and L. Staveland, “Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research”, Advances in Psychology, pp. 139-183, 1988. Available: 10.1016/s0166-4115(08)62386-9.

Other Resources and Project Files

All individual resources have been uploaded and linked individually above (for instance, instead of giving a link to an entire shared Google Drive of videos, I have shared and linked videos individually where necessary).

A GitHub repository does exist for this project, but is being kept private until all IP considerations have been made toward the end of this project. Please personally contact kevingilboy@jhu.edu for access.