Table of Contents

Vision Guided Mosquito Dissection for the Production of Malaria Vaccine

Last updated: 5/6/2021

Confidential pending patent applications

Summary

The goal of this project is to create vision algorithms which will aid in the development and operation of a robotic mosquito dissection system for the production of malaria vaccine

- Students: Nicholas Greene

- Mentor(s): Balazs Vagvolgyi, Alan Lai, Parth Vora

Background, Specific Aims, and Significance

Background:

Malaria is a major global human health issue. In 2019, there were 229 million cases of malaria, with over four hundred thousand deaths [7]. Current measures of malaria prevention such as mosquito nets and insecticide are significantly effective, however they appear to have reached a plateau in their effectiveness.

The company Sanaria developed the first effective and practical malaria vaccine which has the potential to eradicate malaria in the long term. However, deployment of the vaccine has been hindered by a severe bottleneck in producing the vaccine which requires extraction of the salivary glands from malaria-infected mosquitos.

A robotic mosquito dissection system is in development here at JHU to automate extraction of the salivary glands.

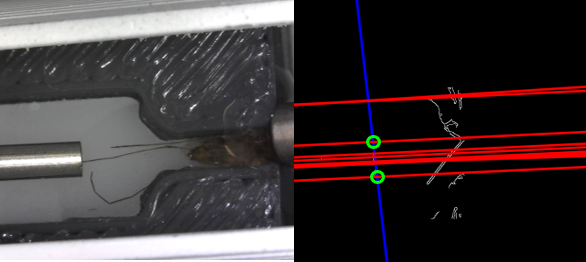

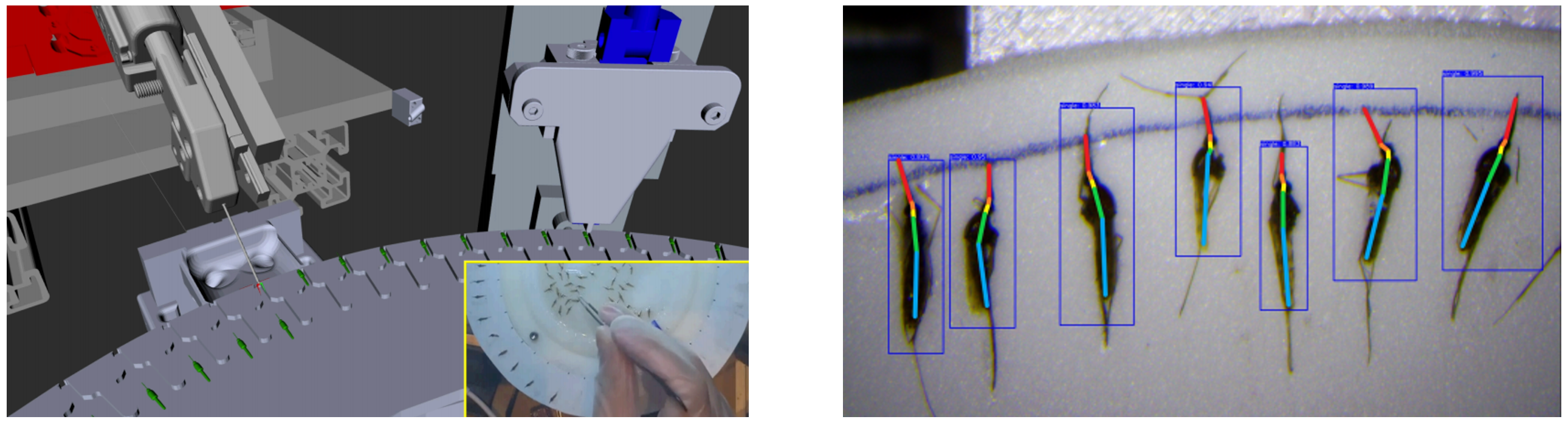

Figure: prior work including the mechanical design of the system on the left, and a vision algorithm on the right

Specific Aims:

The aim of this project is to develop vision algorithms for the robot mosquito dissection system which are necessary to further the development progress. I will be implementing 6 vision algorithms in total (including maximum deliverables). Specifically, I will be implementing an both an image processing based algorithm and a deep learning based algorithm for each of the following three tasks.

Tasks

Determine whether turntable slot cleaning attempt was successful Determine whether the gripper was successfully cleaned after a cleaning attempt Estimate the volume of mosquito exudate extracted after each dissection attempt

Significance:

The significance of this project is to further the development of an efficient robotic system for mosquito dissection. These vision algorithms will be useful for improving the efficiency of the system and monitoring the system's performance. In addition, these algorithms will also be useful for reducing the operating costs and waste during operation. The broader significance is that this project will put Sanaria one step closer to mass-producing a malaria vaccine.

Deliverables

Note that the deliverables are identical for all three tasks except that there is one additional deliverable for the exudate volume estimation task. Bolded items have been completed. Non-bolded items were not reached

- Minimum - Turntable Cleaning Station: (Delayed due to delays in image collection)

- Machine Learning Approach

- ☑ Dataset of about 100 hand annotated images

- ☑ PyTorch based python code for training a neural network for the task

- ☑ PyTorch based python function for quickly evaluating the network's prediction on an image

- ☑ ROS service python wrapper for integrating the neural network prediction with ROS

- ☑ Wiki documentation detailing the network architecture, training procedure, and ROS integration in python

- Image Processing Approach

- ☑ OpenCV based C++ code of an image processing algorithm for the task

- ☑ C++ ROS service wrapper for integrating the algorithm with ROS (in progress)

- ☑ Wiki documentation detailing the image processing algorithm and the ROS integration in C++.

- Expected - Gripper Cleaning: (Expected by 4/14) Note - Expected date is pushed back

- Machine Learning Approach

- ☑ Dataset of about 300 hand annotated images (annotations in progress)

- ☑ PyTorch based python code for training a neural network for the task

- ☑ PyTorch based python function for quickly evaluating the network's prediction on an image

- ☑ ROS service python wrapper for integrating the neural network predition with ROS

- ☑ Wiki documentation detailing the network architecture, training procedure, and ROS integration in python

- Image Processing Approach

- □ OpenCV based C++ code of an image processing algorithm for the task

- □ C++ ROS service wrapper for integrating the algorithm with ROS

- □ Wiki documentation detailing the image processing algorithm and the ROS integration in C++.

- Maximum - Exudate Volume Estimation: (Expected by 5/1)

- Machine Learning Approach

- ☑ Plan for collecting exudate volume ground truth data

- □ Dataset of about 300 hand annotated images

- □ PyTorch based python code for training a neural network for the task

- □ PyTorch based python function for quickly evaluating the network's prediction on an image

- □ ROS service python wrapper for integrating the neural network predition with ROS

- □ Wiki documentation detailing the network architecture, training procedure, and ROS integration in python

- Image Processing Approach

- ☑ OpenCV based C++ code of an image processing algorithm for the task

- ☑ C++ ROS service wrapper for integrating the algorithm with ROS

- ☑ Wiki documentation detailing the image processing algorithm and the ROS integration in C++.

Technical Approach

This section outlines the technical approach. An important consideration is that the cleaner classification tasks each will receive an image from a single mono RGB camera. The regression task will receive two mono RGB images from cameras which are positioned to provide approximately orthogonal views.

Image Processing Approach

Cleaner Classification Tasks

A combination of image filtering and thresholding will be used to determine if a mosquito body or some other debris is located in the image or not. This approach is very likely to succeed due to the consistency of the lighting and the fixed camera position in the robot setup.

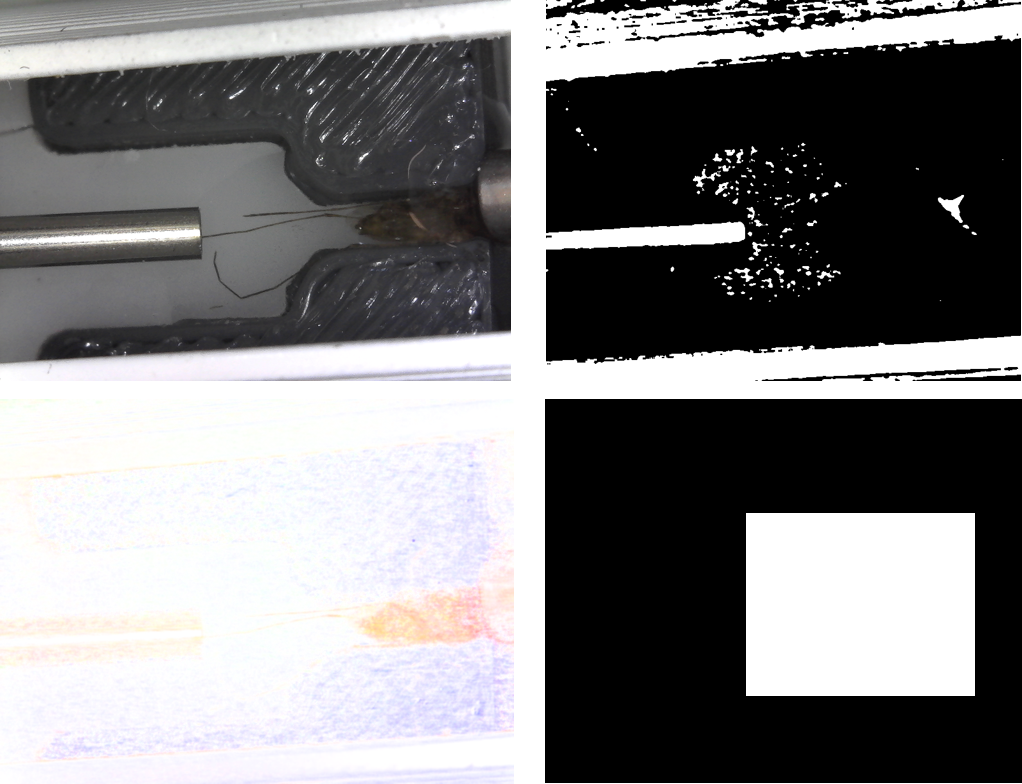

Segmentation Results

Image Processing method is 98% accurate on the dataset

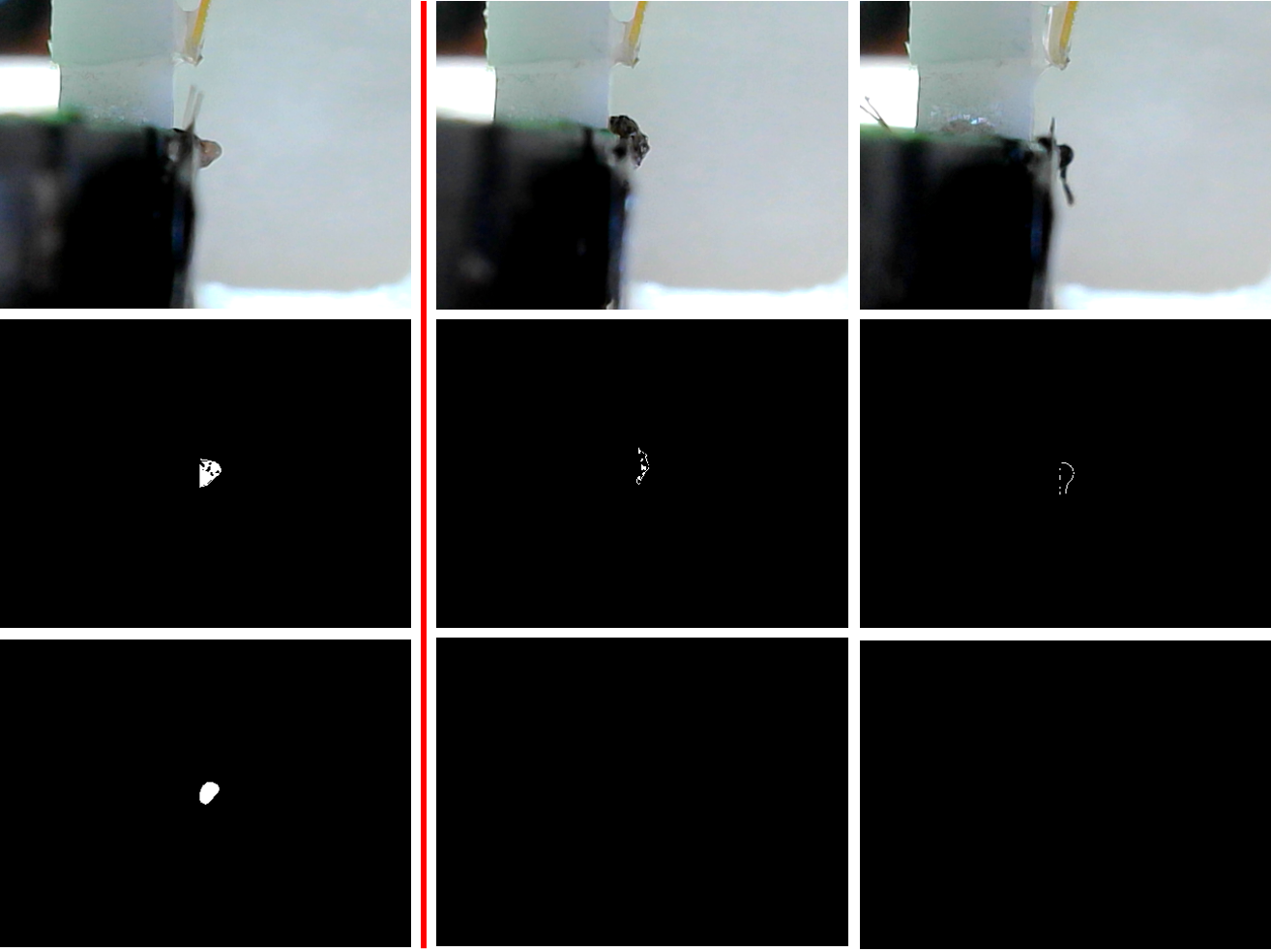

Figure: Segmentation of mosquitos using image processing

The process uses multiple thresholding and filtering steps

Exudate Volume Regression Task: (Not Started Yet) Filtering and thresholding will be used to segment the blob of exudate in the two provided images. Subsequently, the volume estimation will consist of two different methods. The first will perform an elliptical Hough transform in each view and then use the approximately orthogonal geometry of the views to estimate an ellipsoid which approximates the blob. The second method will involve counting the number of pixels of exudate in each view and then scaling that value appropriately.

Registration (Not Used) Some work was done to create a registration method to accurately align the camera to the cleaning station. This would help in case the camera's position shifted slightly during the system's operation. The most successful method involved detecting the lines around the water pipe in order to find it's corners. In the end, it was decided that the mechanical design would be more robust against movement of the camera in the end. And image showing this corner detection can be seen below

Figure: Detection of pipe corners

Extrudate Volume Estimation Tasks

Classification of successful extrudate extraction: To classify whether extrudate was successfully extracted, first a color based thresholding is done. Then, a median filter with a very large kernel is applied. If the remaining pixels are above a threshold percent, the extraction is classified as a success. This method is robust against falsely classifying mosquito body parts as shown in the figure below. Each column shows the sequential operations for this classification on an image. Only the left most column should be classified as a success. In the second row, the results of the color thresholding are shown. The third row shows the results after the median filter. Only the image on the left has any pixels remaining, and so it is the only one classified as a success.

Figure: Classification processing steps for the squeezer. The leftmost column was a successful squeeze and the right two columns are failed squeezes. The classifier responds appropriately (click on the image to zoom in and get a better look)

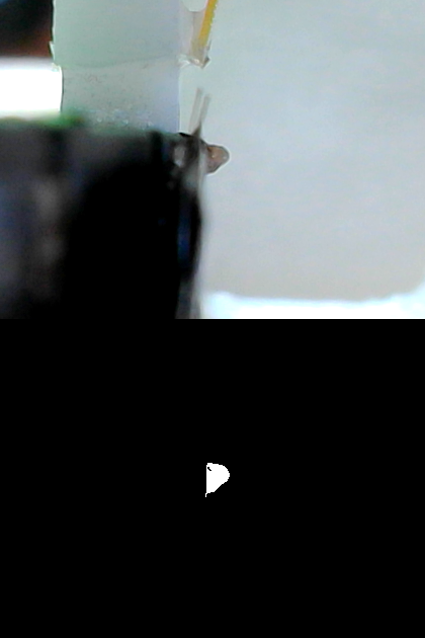

If the squeeze was determined to be successful, a less aggressive threshold is performed to segment the extrudate. A small median filter is used to fill any small gaps. The volume is assumed to be proportional to the area of the segmentation

Figure: Segmentation of successful squeeze.

Machine Learning Approach

Considering the small amount of ground truth data (only 300 images) that will be available, transfer learning is the most feasible approach to creating machine learning models.

Turntable Cleaning Classification

Initial thoughts: Multiple starting models and weights for transfer learning will be attempted. I plan to try pre-trained ResNet [3] and/or YOLO [2] networks to start. All but the last few initialized layers will be frozen during training.

Updated Aproach - Upon further reading on transfer learning, I decided to try the smallest network possible due to the extremely small size of the dataset. Using transfer learning with VGG16 pretrained on Image Net weights where only the fully connected layers at the end are fine-tuned, the performance achieved was 100% on the Test dataset and 98.6% over the entire dataset, including the test, train, and validation splits. The false positive rate was 0.6% and the false negative rate was 0.6% over the whole dataset. I compared this to transfer learning with ResNet50 and achieved about 10% better accuracy with VGG16 transfer learning.

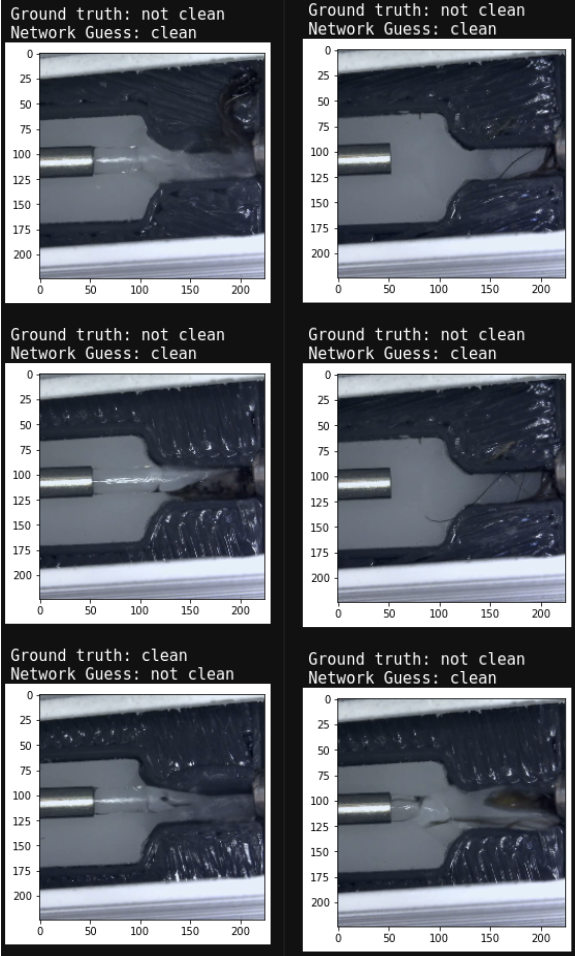

Figure: Here are 6 of the 9 the failures of the network. The water jet seemed to cause problems

Gripper Cleaning Classification

In a very similar manner to previous method, I trained transfer learning model to detect whether the gripper had mosquito debris on it. I again also had the most success with VGG16. I reached 98.3% accuracy in classification with a 0.6% false positive rate and a 1.05% false negative rate. Below is an example of the gripper images

Figure: Dirty gripper on the left, clean gripper on the right

System Integration

In the current layout of the system, there are two computers. The main robot computer interfaces with the actuators and cameras of the robot, while the vision computer, which contains a GPU and which performs the vision tasks, is remote. Integration was completed for all of the algorithms by creating a ROS Service wrapper around each image processing and machine learning function. This allows the robot controller computer to call all of the remote vision functions as needed.

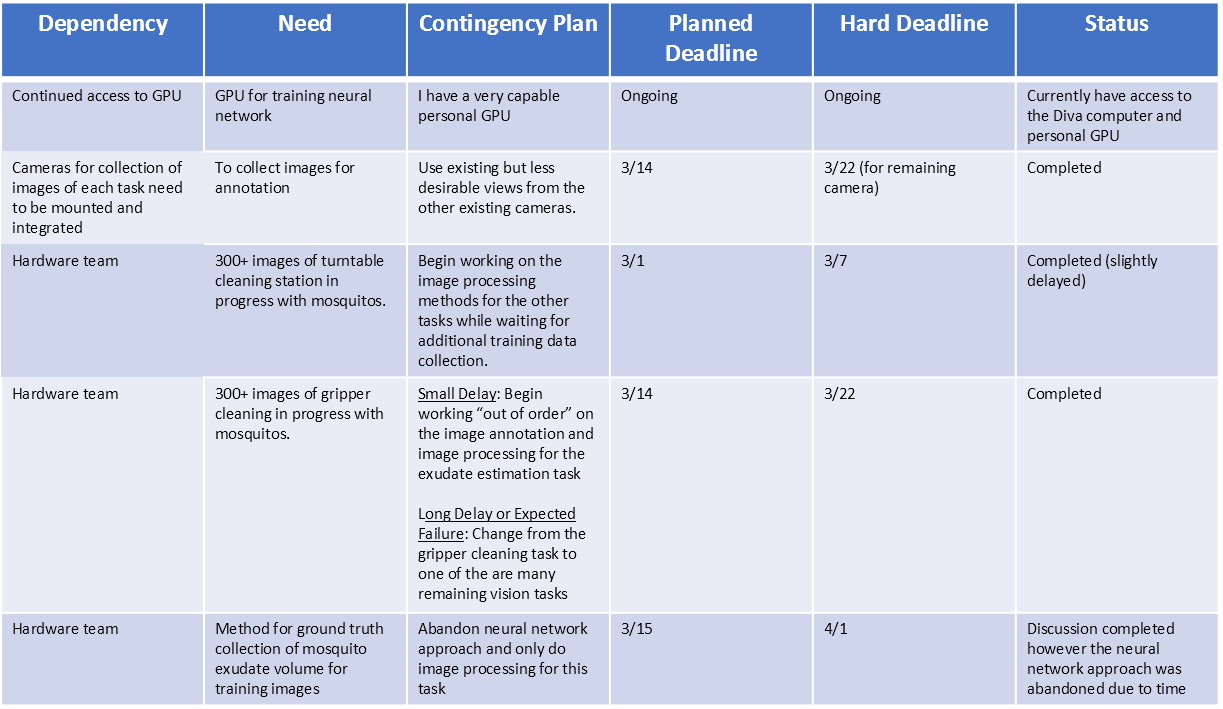

Dependencies

Note: The text is slightly blurry due to downscaling, but if you view the full size image it is clear.

Milestones and Status

- Milestone name: Turntable Cleaning Station (All Deliverables)

- Planned Date: 3/22

- Expected Date: 4/5

- Status: Completed

- Milestone name: Gripper Cleaning (All Deliverables)

- Planned Date: 4/7

- Expected Date: 4/14

- Status: Completed (Deep learning approach only)

- Milestone name: Exudate Volume Estimation (All Deliverables)

- Planned Date: 5/1

- Expected Date: 5/1

- Status: Completed (Image Processing approach only)

Reports and presentations

- Project Plan

- Project Background Reading

- See Bibliography below for links.

- Paper Seminar Presentations

- Project Checkpoint

- Project Final Presentation

- Project Final Report

- links to any appendices or other material

Project Bibliography

[1] Badrinarayanan, V., A. Kendall, and R. Cipolla (2017). Segnet: A deep convolutionalencoder-decoder architecture for image segmentation.IEEE transactions on pattern anal-ysis and machine intelligence 39(12), 2481–2495.

[2] Bochkovskiy, A., C.-Y. Wang, and H.-Y. M. Liao (2020). Yolov4: Optimal speed andaccuracy of object detection.arXiv preprint arXiv:2004.10934.

[3] He, K., X. Zhang, S. Ren, and J. Sun (2016). Deep residual learning for image recogni-tion. InProceedings of the IEEE conference on computer vision and pattern recognition,pp. 770–778.

[4] Jongo, S. A., S. A. Shekalaghe, L. P. Church, A. J. Ruben, T. Schindler, I. Zenklusen,T. Rutishauser, J. Rothen, A. Tumbo, C. Mkindi, et al. (2018). Safety, immunogenicity,and protective efficacy against controlled human malaria infection of plasmodium falci-parum sporozoite vaccine in tanzanian adults.The American journal of tropical medicineand hygiene 99(2), 338–349.

[5] Li, W., Z. He, P. Vora, Y. Wang, B. Vagvolgyi, S. Leonard, A. Goodridge, I. Iordachita,S. L. Hoffman, S. Chakravarty, and R. H. Taylor (2021). Automated mosquito salivarygland extractor for pfspz-based malaria vaccine production.preprint.

[6] US Centers for Disease Control and Prevention (2021).“Essential Servicesfor Malaria”. [Online]. Available:https://www.cdc.gov/coronavirus/2019-ncov/global-covid-19/maintain-essential-services-malaria.html.

[7] World Health Organization (2020). “World Malaria Report 2020”. [Online]. Available:https://www.who.int/publications/i/item/9789240015791.

[8] Russakovsky, O., J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy,A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei (2015). ImageNet Large Scale VisualRecognition Challenge.International Journal of Computer Vision (IJCV) 115(3), 211–252.

[9] Simonyan, K. and A. Zisserman (2014). Very deep convolutional networks for large-scaleimage recognition.arXiv preprint arXiv:1409.1556.

Reading List

- Belagiannis, Vasileios, Christian Rupprecht, Gustavo Carneiro, and Nassir Navab. ”Robustoptimization for deep regression.” In Proceedings of the IEEE international conference oncomputer vision, pp. 2830-2838. 2015.

- Chandrarathne, Gayani, Kokul Thanikasalam, and Amalka Pinidiyaarachchi. ”A compre-hensive study on deep image classification with small datasets.” In Advances in ElectronicsEngineering, pp. 93-106. Springer, Singapore, 2020.

- H. Phalen, P. Vagdargi, M. Schrum, S. Chakravarty, A. Canezin,M. Pozin, S. Coemert, I. Iordachita, S. Hoffman, G. Chirikjian, andR. Taylor, “A mosquito pick-and-place system for pfspz-based malariavaccine production,” IEEE Transactions on Automation Science andEngineering, 2020, issn:1545-5955, doi:10.1109/TASE.2020.2992131.

- Hurtik, Petr, Vojtech Molek, Jan Hula, Marek Vajgl, Pavel Vlasanek, and Tomas Ne-jezchleba. ”Poly-YOLO: higher speed, more precise detection and instance segmentationfor YOLOv3.” arXiv preprint arXiv:2005.13243 (2020).

- Lo, Frank Po Wen, Yingnan Sun, Jianing Qiu, and Benny Lo. ”Image-Based Food Clas-sification and Volume Estimation for Dietary Assessment: A Review.” IEEE journal ofbiomedical and health informatics 24, no. 7 (2020): 1926-1939.

- S. Lathuili`ere, P. Mesejo, X. Alameda-Pineda and R. Horaud, ”A Comprehensive Analysisof Deep Regression,” in IEEE Transactions on Pattern Analysis and Machine Intelligence,vol. 42, no. 9, pp. 2065-2081, 1 Sept. 2020, doi: 10.1109/TPAMI.2019.2910523.

Other Resources and Project Files

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (2021-05).

Code Repositories: Note, these repositories are private. Only those with permission can view the contents.

Documentation: View the wiki inside of each repository to see the corresponding documentation.

- sanaria_cv_algorithms_lib - Image processing algorithm implementations

- sanaria_cv_service - System integration of image processing algorithms

- sanaria_dl_nick - Deep Learning algorithm implementations

- sanaria_dl_service - System integration of deep learning algorithms