Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Surgical navigation is widely used in clinical application to provide accuracy and dexterity for surgeons to handle tools. There are two kinds of methods of surgical navigation, which are marker based and vision based method. The marker based method is the traditional one. For example, in sinus surgery, we put marker on the endoscope and on patient’s head. CT registration, external tracking information. The defect of marker based navigation method is that it’s an indirect registration, which result in a bigger error, over 2mm. However, in sinus surgery, there are many small tissue structures which are less than 1mm. As you can see, the traditional marker-based navigation system may not be accurate enough for surgeons to avoid critical structure on patient’s body like artery, eye balls, brain, neural etc.

Vision-based method is developed in order to achieve higher accuracy in surgical navigation, by using the direct video information to recover the relative pose of endoscope with regard to patient’s body. A common intermediate step in this procedure is called SLAM (Simultaneously Localization and Mapping), which is to compute the relative camera pose and a sparse point cloud representing the structure of the surrounding environment from a video sequence. It first detects feature points from every video frame and tries to find a transformation to match feature points between two continuous frames together, from which the camera pose and 3D point cloud can be computed.

In conclusion, SLAM system is the key element of achieving high accuracy in (vision-based) surgical navigation. And since feature extraction is at the beginning of the SLAM pipeline, how well this procedure can be done has a significant influence on all other procedures and final results. Therefore, finding robust feature descriptor, from which feature points are extracted, is a key to the SLAM system. Many feature descriptors have been proposed such as SIFT, SURF, BRIEF, ORB, etc. All the traditional feature desciptors uses merely local texture information, which can be good enough in most indoor or outdoor navigation scenes. However, in surgical navigation scene, we are often dealing with images of tissue surfaces which are smooth and don’t contain very much local texture information, causing the traditional feature descriptors to perform not well enough. Feature points extracted can be very sparse and repetitive, which makes the following feature matching procedure not very robust and causing the whole system to be error prone.

With the rise of deep learning, there’s a chance to design a new kind of feature descriptor, we call it dense learning descriptor, by training a nerual network. It combines the global anatomical information with the local texture information and it computes a feature description for every pixel of the image. we can get a more robust feature matching performance in the endoscope scene and this can result in a better overall performance of surgical navigation.

Therefore this project's goal is to create a SLAM task demo for endoscopic surgical navigation in nasal cavity.

The approach is to integrate the dense descriptor developed by Xingtong with the ORB SLAM framework.

Following picture shows the structure of the descriptor.

The computation of dense matching can be parallelized on modern GPU by treating all sampled source descriptors as a kernel with the size of N × L × 1 × 1; N is the number of query source keypoint locations used as the output channel dimension; L is the length of the feature descriptor used as the input channel dimension of a standard 2D convolution operation.

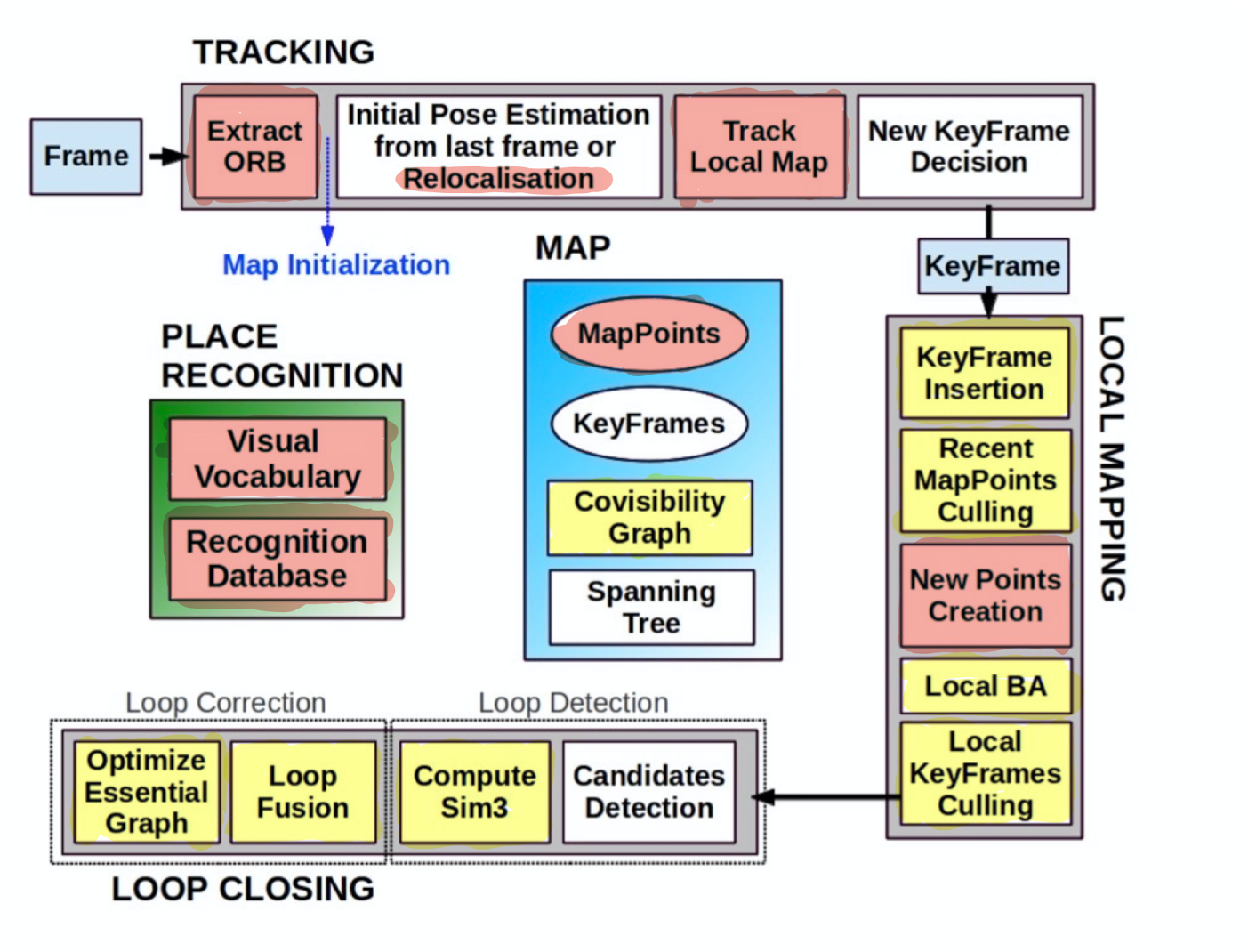

Following picture shows the structure of the ORB SLAM.

Red color - Modules to be rewritten; Yellow color - Modules to be modified

Red color - Modules to be rewritten; Yellow color - Modules to be modified

Phase 1: Getting Started (1 day)

Phase 2: Code Writing (30 days)

Phase 3: Testing (10 days)

Phase 4: Tuning (10 days)

Phase 5: Evaluating I (10 days)

Phase 6: Evaluating II (10 days)

Phase 7: Summary (20 days)

* here list references and reading material

1. S. Leonard, A. Sinha, A. Reiter, M. Ishii, G. L. Gallia, R. H. Taylor, et al. Evaluation and stability analysis of video-based navigation system for functional endoscopic sinus surgery on in vivo clinical data. 37(10):2185–2195, Oct. 2018

2. A. R. Widya, Y. Monno, K. Imahori, M. Okutomi, S. Suzuki, T. Gotoda, and K. Miki. 3D reconstruction of whole stomach from endoscope video using structure-from-motion. 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pages 3900– 3904, 2019 Abdomen SLAM

3. O. G. Grasa, E. Bernal, S. Casado, I. Gil, and J. Montiel. Visual slam for handheld monocular endoscope. IEEE transactions on medical imaging, 33(1):135–146, 2013.

4. N. Mahmoud, I. Cirauqui, A. Hostettler, C. Doignon, L. Soler, J. Marescaux, and J. M. M. Montiel. Orbslam-based endoscope tracking and 3d reconstruction. In CARE@MICCAI, 2016. Oral Cavity SLAM

5. L. Qiu and H. Ren. Endoscope navigation and 3D reconstruction of oral cavity by visual slam with mitigated data scarcity. In Proceedings of the IEEE Conference on Computer Vi- sion and Pattern Recognition Workshops, pages 2197–2204, 2018. ORB SLAM

6. Ra´ul Mur-Artal*, J. M. M. Montiel, Member, IEEE, and Juan D. Tard´os, ORB-SLAM: a Versatile and Accurate Monocular SLAM System. Member, IEEE, 2016

7. Xingtong Liu, Yiping Zheng, Russ Taylor, Mathias etc., Extremely Dense Point Correspondences in Multi-view Stereo using a Learned Feature Descriptor. CVPR 2020

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (456-2020-07).