Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

We view the basic paradigm of patient-specific interventional medicine as a closed loop process, consisting of 1) combining specific information about the patient with the physician’s general knowledge to determine the patient’s condition; 2) formulating a plan of action; 3) carrying out this plan; and 4) evaluating the results. Further, the experience gathered over many patients may be combined to improve treatment plans and protocols for future patients. This process has existed since ancient times. Traditionally, all these steps have taken place in the physicians head. The ability of modern computer-based technology to assist humans in processing and acting on complex information will profoundly enhance this process in the 21st Century.

The CiiS Lab focuses broadly on all aspects of the systems and technologies associated with this basic process, including innovative algorithms for image processing and modeling, medical robots, imaging & sensing systems and devices, software, and human-machine cooperative systems. Our basic strategy involves working closely with clinicians, Industry, and other collaborators on system-oriented, problem-driven engineering research.

Note: CiiS and related labs grew out of the NSF Engineering Research Center for Computer-Integrated Surgical Systems and Technology (CISST ERC). The ERC remains active, although the CISST web site has only been sporadically maintained since the $33M NSF seed money grant was spent out in 2009. Volume 1 of the ERC Final Report contains an excellent summary of the ERC progress up to that time.

Short summaries of the various research activities associated with CiiS are below, together with links to project summary pages. In cases where the project's “home” is more closely associated with another lab that cooperates with CiiS, then the project page may be found on that lab's Wiki.

Note: We are still populating the project summary list and associated web links. Click Here for the TODO List.

This Bioengineering Research Partnership (BRP) focuses the efforts of highly qualified engineers and scientists from JHU (lead institution), Columbia and CMU, as well as surgeons from the JHU School of Medicine, to overcome human limitations in surgical practice. This project proposes to develop novel core technology and microsurgical tools with unique capability, as well as integrate computer assist systems. The effort will generate a computer assisted human user with enhanced microsurgical ability.

Microsurgical Assistant System Project Page

See also Eye Robots, Force Sensing Instruments, Optical Sensing Instruments, Multispectral Light Source, Microsurgery BRP Intranet Home Page (login required)

The Steady-Hand Eye Robot is a cooperatively-controlled robot assistant designed for retinal microsurgery. Cooperative control allows the surgeon to have full control of the robot, with his hand movements dictating exactly the movements of the robot. The robot can also be a valuable assistant during the procedure, by setting up virtual fixtures to help protect the patient, and by eliminating physiological tremor in the surgeon's hand during surgery.

Retinal microsurgery requires extremely delicate manipulation of retinal tissue. One of the main technical limitations in vitreoretinal surgery is lack of force sensing since the movements required for dissection are below the surgeon’s sensory threshold.

Retinal microsurgery requires extremely delicate manipulation of retinal tissue. One of the main technical limitations in vitreoretinal surgery is lack of force sensing since the movements required for dissection are below the surgeon’s sensory threshold.

We are developing force sensing instruments to measure very delicate forces exerted on eye tissue. Fiber optic sensors are incorporated into the tool shaft to sense forces distal to the sclera, to avoid the masking effect of forces between the tools and sclerotomy. We have built 2DoF hook and forceps tools with force resolution of 0.25 mN. 3DoF force sensing instruments are under development.

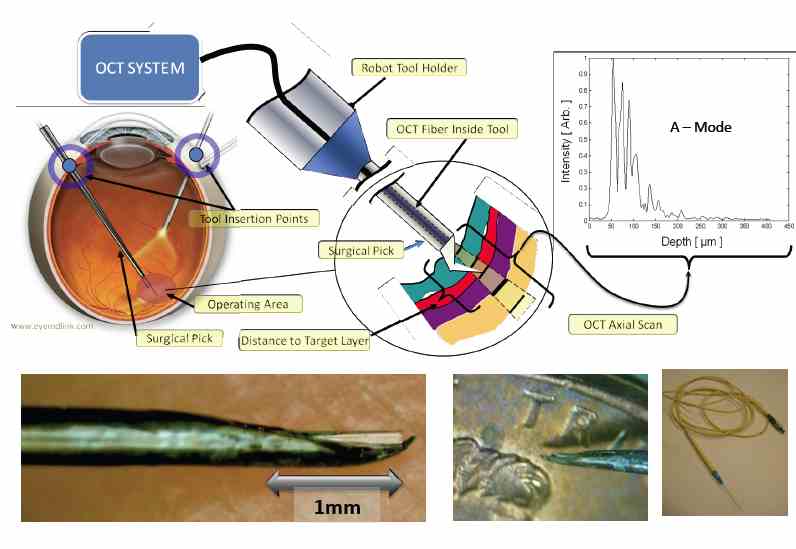

OCT provides very high resolution (micron scale) images of anatomical structures within the tissue. Within Ophthalmology, OCT systems typically perform imaging through microscope optics to provide 2D cross-sectional images (“B-mode”) of the retina. These systems are predominantly used for diagnosis, treatment planning, and in a few cases, for optical biopsy and image guided laser surgery. We are developing intra-ocular instruments that combine surgical function with OCT imaging capability in a very small form factor.

OCT provides very high resolution (micron scale) images of anatomical structures within the tissue. Within Ophthalmology, OCT systems typically perform imaging through microscope optics to provide 2D cross-sectional images (“B-mode”) of the retina. These systems are predominantly used for diagnosis, treatment planning, and in a few cases, for optical biopsy and image guided laser surgery. We are developing intra-ocular instruments that combine surgical function with OCT imaging capability in a very small form factor.

It has been shown that retinal surgical procedures often incur phototoxicity trauma of the retina as a result of illuminators used in surgery. In answer to this problem, we have developed a computer-controlled multispectral light source that drastically reduces retinal exposure to toxic white light during surgery.

The JHU Snake Robot is a telerobotic surgical assistant designed for minimally invasive surgery of the throat and upper airways. It consists of two miniature manipulators with distal dexterity inserted through a single entry port (a laryngoscope). Each dexterous manipulator is capable of motion in four degrees of freedom (dof) and contains a gripping device as the end effector. Three additional dof allow for coarse positioning in the x, y, and z directions, and a shaft rotation dof provides the surgeon the ability to roll the gripper while the snake is bent. Please visit the Snake Robot project page for further information.

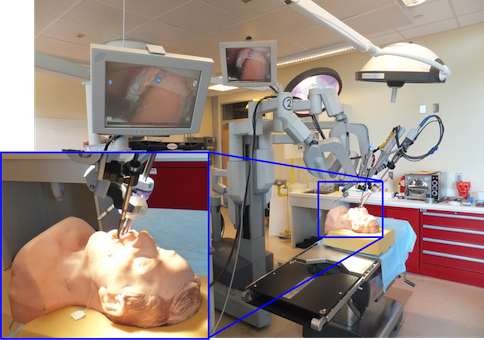

One major focus of research within our Center has focused on exploiting the fact that

telesurgical systems such as Intuitive Surgical's da Vinci robot

essentially put a computer between the surgeon and the patient. Examples include the

development of virtual fixtures and cooperative manipulation methods, the integration

of advanced sensing methods and technology with robotic devices, and augmented reality information

overlay.

The goal of this project is to provide information assistance to a physician placing a biopsy or therapy needle into a patient's anatomy in an online intraoperative imaging environment. The project started within the CISST ERC at Johns Hopkins, as a collaboration between JHU and Dr. Ken Masamune, who was visiting from the University of Tokyo. It is now a collaborative project between JHU and the Laboratory for Percutaneous Surgery at Queen's University. The system combines a display and a semi-transparent mirror so arranged that the virtual image of a cross-sectional image on the display is aligned to the corresponding cross-section through the patient's body. The physician uses his or her natural eye-hand coordination to place the needle on the target. Although the initial development was done with CT scanners, more recently we have been developing an MR-compatible version.

The goal of this project is to provide information assistance to a physician placing a biopsy or therapy needle into a patient's anatomy in an online intraoperative imaging environment. The project started within the CISST ERC at Johns Hopkins, as a collaboration between JHU and Dr. Ken Masamune, who was visiting from the University of Tokyo. It is now a collaborative project between JHU and the Laboratory for Percutaneous Surgery at Queen's University. The system combines a display and a semi-transparent mirror so arranged that the virtual image of a cross-sectional image on the display is aligned to the corresponding cross-section through the patient's body. The physician uses his or her natural eye-hand coordination to place the needle on the target. Although the initial development was done with CT scanners, more recently we have been developing an MR-compatible version.

The goal of this project is the development of stereoscopic video augmentation on the da Vinci S System for intraoperative image-guided base of tongue tumor resection in transOral robotic surgery (TORS). We propose using cone-beam computed tomography (CBCT) to capture intraoperative patient deformation from which critical structures (e.g., the tumor and adjacent arteries), segmented from preoperative CT/MR, can be deformably registered to the intraoperative scene. Augmentation of TORS endoscopic video with surgical planning data defining the target and critical structures offers the potential to improve navigation, spatial orientation, confidence, and tissue resection, especially in the hands of less experienced surgeons.

The goal of this project is the development of stereoscopic video augmentation on the da Vinci S System for intraoperative image-guided base of tongue tumor resection in transOral robotic surgery (TORS). We propose using cone-beam computed tomography (CBCT) to capture intraoperative patient deformation from which critical structures (e.g., the tumor and adjacent arteries), segmented from preoperative CT/MR, can be deformably registered to the intraoperative scene. Augmentation of TORS endoscopic video with surgical planning data defining the target and critical structures offers the potential to improve navigation, spatial orientation, confidence, and tissue resection, especially in the hands of less experienced surgeons.

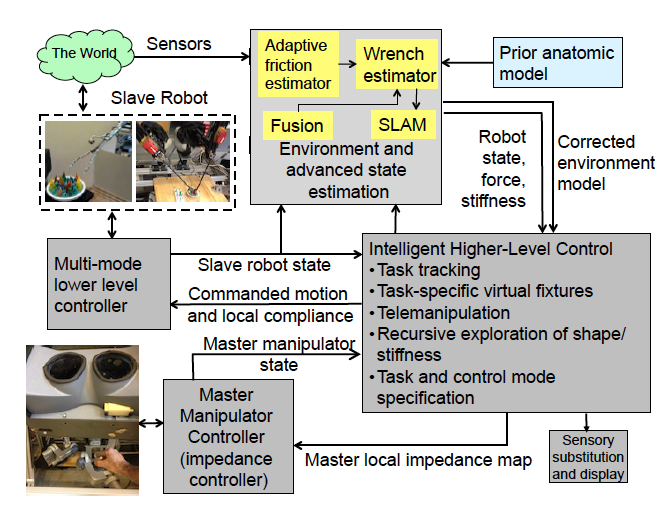

NRI-CSA stands for National Robotics Initiative - Complementary Situational Awareness for Human-Robot Partnerships.

NRI-CSA stands for National Robotics Initiative - Complementary Situational Awareness for Human-Robot Partnerships.

This is a new 5-year collaborative project involving three collborative teams at Vanderbilt, Carnegie Mellon, and Johns Hopkins University. The Principal investigators on this grant are Dr. Nabil Simaan (Vanderbilt), Dr. Howie Choset (CMU) and Dr. Russell H. Taylor (JHU). The main research goals of this collaborative project remain unchanged relative to the funded proposal. The main goal of this research is to establish the foundations for Computational Situational Awareness (CSA) to enable multifaceted human-robot partnerships. The following three main research objectives guide our effort:

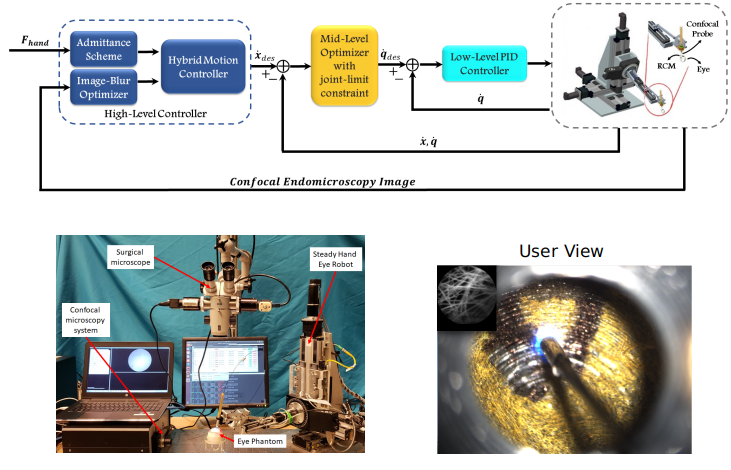

This is a joint work between Johns Hopkins University and Imperial College London. In this project, a novel sensorless framework is proposed for real-time semi-autonomous endomicroscopy scanning during retinal surgery. Through a hybrid motion control strategy, the system autonomously controls the confocal probe to optimize the sharpness and quality of the pCLE images, while providing the surgeon with the ability to scan the tissue in a tremor-free manner.

This is a joint work between Johns Hopkins University and Imperial College London. In this project, a novel sensorless framework is proposed for real-time semi-autonomous endomicroscopy scanning during retinal surgery. Through a hybrid motion control strategy, the system autonomously controls the confocal probe to optimize the sharpness and quality of the pCLE images, while providing the surgeon with the ability to scan the tissue in a tremor-free manner.

For more details, please

Follow this link

or see the publication:

Z. Li, M. A. Shahbazi, N. Patel, E. O. Sullivan, H. Zhang, K. Vyas, P. Chalasanim, P. L. Gehlbach, I. Iordachita, G.-Z. Yang, and R. H. Taylor, “A novel semi-autonomous control framework for retina confocal endomicroscopy scanning,” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2019.

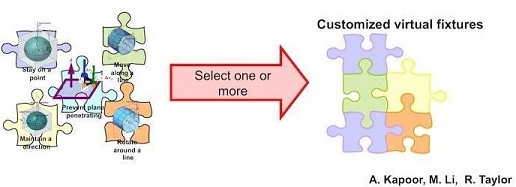

“Virtual fixtures” constrain the motion of the robot in various ways to assist the surgeon in carrying out surgical tasks. Finding computationally efficient and flexible means for specifying robot behavior is an important challenge in medical robotics, especially in cases where high dexterity is needed in confined spaces and in proximity to delicate anatomy. Our technical approach is based on the constrained optimization framework

originally proposed by Funda and Taylor and subsequently extended to support

a library of virtual fixture primitives.

“Virtual fixtures” constrain the motion of the robot in various ways to assist the surgeon in carrying out surgical tasks. Finding computationally efficient and flexible means for specifying robot behavior is an important challenge in medical robotics, especially in cases where high dexterity is needed in confined spaces and in proximity to delicate anatomy. Our technical approach is based on the constrained optimization framework

originally proposed by Funda and Taylor and subsequently extended to support

a library of virtual fixture primitives.

We are developing an image-guided robot system to provide mechanical assistance for skull base drilling, which is performed to gain access for some neurosurgical interventions, such as tumor resection. The motivation for introducing this robot is to improve safety by preventing the surgeon from accidentally damaging critical neurovascular structures during the drilling procedure. This system includes a NeuroMate robot, a StealthStation Navigation system and the Slicer3 visualization software.

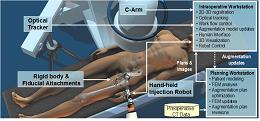

We are developing a software workstation to assist in performing femoral augmentation for osteoporotic patients. The surgical procedure consists of a series of bone cement injections into the femur. The bone augmentation system features preoperative biomechanical planning to determine the most advantageous cement injection site(s) and injection protocol to increase the strength of the femur. During the surgery, we will navigate the injection device and intraoperatively reconstruct virtual models of the injected cement.

We are developing a software workstation to assist in performing femoral augmentation for osteoporotic patients. The surgical procedure consists of a series of bone cement injections into the femur. The bone augmentation system features preoperative biomechanical planning to determine the most advantageous cement injection site(s) and injection protocol to increase the strength of the femur. During the surgery, we will navigate the injection device and intraoperatively reconstruct virtual models of the injected cement.

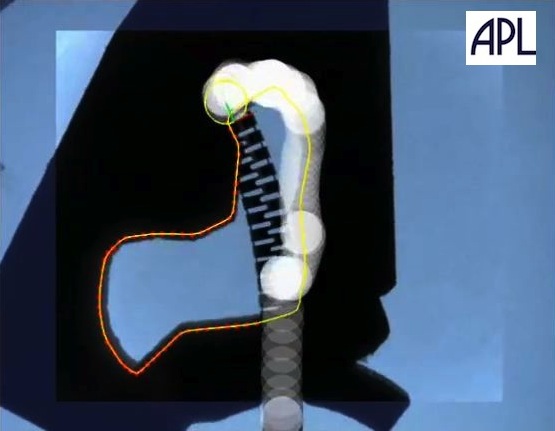

We have an active collaboration with the Johns Hopkins Applied Physics Laboratory

to develop a robotic system for minimally-invasive curettage of osteolytic

lesions in bone. One novel aspect of the system is a 6 mm diameter nitinol steerable “snake” end

effector with a 4 mm lumen which may be used to deploy a variety of tools into the cavity. Although

the initial focusing application is osteolysis associated with wear particles from orthopaedic implants,

other potential applications include curettage of other osteolytic lesions such as bone metastases,

intra-cardiac applications, and other high-dexterity MIS applications.

We have an active collaboration with the Johns Hopkins Applied Physics Laboratory

to develop a robotic system for minimally-invasive curettage of osteolytic

lesions in bone. One novel aspect of the system is a 6 mm diameter nitinol steerable “snake” end

effector with a 4 mm lumen which may be used to deploy a variety of tools into the cavity. Although

the initial focusing application is osteolysis associated with wear particles from orthopaedic implants,

other potential applications include curettage of other osteolytic lesions such as bone metastases,

intra-cardiac applications, and other high-dexterity MIS applications.

Osteolytic Lesion Curettage of Osteolytic Lesions

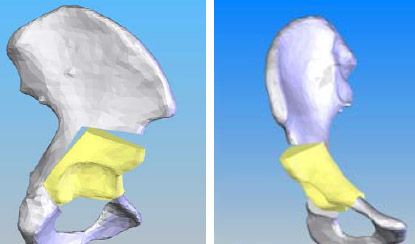

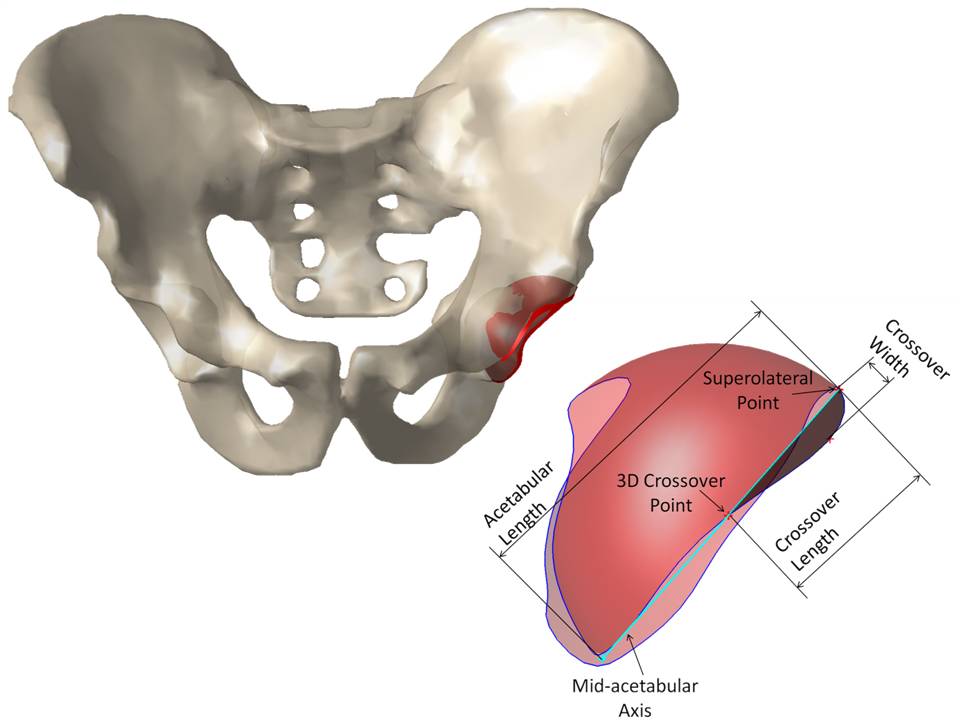

Periacetabular osteotomy (PAO) is a corrective surgery for patients exhibiting developmental dysplasia of the hip (DDH). We are interested in developing a computer-assisted surgical system with modules for preoperative planning and intraoperative guidance. To this end, we employ preoperative image processing to segment bony anatomy and discrete element analysis (DEA) to analyze the contact pressure distribution on the hip. Intraoperatively, we navigate using an infrared tracking system and provide real-time updates (both visual and quantitative) to the surgeon regarding the acetabular alignment.

Periacetabular osteotomy (PAO) is a corrective surgery for patients exhibiting developmental dysplasia of the hip (DDH). We are interested in developing a computer-assisted surgical system with modules for preoperative planning and intraoperative guidance. To this end, we employ preoperative image processing to segment bony anatomy and discrete element analysis (DEA) to analyze the contact pressure distribution on the hip. Intraoperatively, we navigate using an infrared tracking system and provide real-time updates (both visual and quantitative) to the surgeon regarding the acetabular alignment.

Femoroacetabular impingement (FAI) is a condition in which there is abnormal contact between the proximal femur and acetabular rim leading to impingement. Two specific types of impingement have been identified: cam (related to the femoral neck) and pincer (related to the acetabulum). This condition can cause pain, limit range of motion, and lead to labral tears and/or hip arthritis. We are interested in developing computer-assisted diagnostic and treatment options for FAI, with the current focus being pincer impingement.

Femoroacetabular impingement (FAI) is a condition in which there is abnormal contact between the proximal femur and acetabular rim leading to impingement. Two specific types of impingement have been identified: cam (related to the femoral neck) and pincer (related to the acetabulum). This condition can cause pain, limit range of motion, and lead to labral tears and/or hip arthritis. We are interested in developing computer-assisted diagnostic and treatment options for FAI, with the current focus being pincer impingement.

3D Ultrasound-Based System for Nimble, Autonomous Retrieval of Foreign Bodies from a Beating Heart

Explosions and similar incidents generate fragmented debris such as shrapnel that, via direct penetration or the venous system, can become trapped in a person's heart and disrupt cardiac function. This project focuses on the development of a minimally invasive surgical system for the retrieval of foreign bodies from a beating heart. A minimally invasive approach can significantly improve the management of cardiac foreign bodies by reducing risk and mortality, improving postoperative recovery, and potentially reducing operating room times associated with conventional open surgery. The system utilizes streaming 3D transesophageal echocardiography (TEE) images to track the foreign body as it travels about a heart chamber, and uses the information to steer a dexterous robotic end effector to capture the target. A system of this nature poses a number of interesting engineering challenges, including the design of an effective retrieval device, development of a small, dexterous, agile robot, and real-time tracking of an erratic projectile using 3D ultrasound, a noisy, low resolution imaging modality with artifacts.

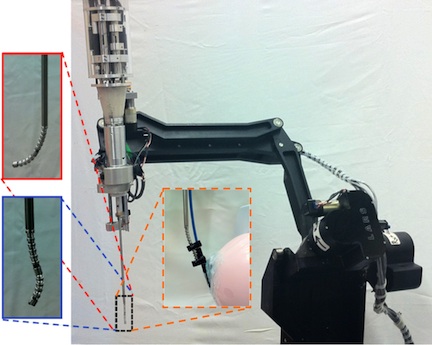

The aim of this project is to control the dexterous snake-like robot under ultrasound imaging guidance for ultrasound elastography. The image guidance is through an ultrasonic micro-array attached at the tip of the snake-like robot.In the robotic system developed the snake-like unit is attached to the tip of IBM Laparoscopic Assistant for Robotic Surgery (LARS) system [A] which can also be seen in the Figure on the right.

The aim of this project is to control the dexterous snake-like robot under ultrasound imaging guidance for ultrasound elastography. The image guidance is through an ultrasonic micro-array attached at the tip of the snake-like robot.In the robotic system developed the snake-like unit is attached to the tip of IBM Laparoscopic Assistant for Robotic Surgery (LARS) system [A] which can also be seen in the Figure on the right.

The main role of the LARSnake system in this project is to generate precise palpation motions along the imaging plane of the ultrasound array. With an ultrasonic micro-array B-mode images of a tissue can be obtained however,if at hand compressed and uncompressed images of the tissue are present, than one can obtain the elastgraphy images(strain images) of the malignant tissue which is the main purpose of this project.

The goal of this project is to facilitate robot-assisted minimally invasive ultrasound elastography (USE) for surgeries involving the daVinci surgical robot. Information from USE images will extend the range of applicable procedures and increase the robot’s capabilities. The daVinci system brings many surgical advantages, including minimal invasiveness, precise and dexterous motion control, and an excellent visual field. These advantages come at the cost of surrendering the surgeon’s sense of feel and touch. Haptic information is significant for procedures such as organ-sparing resections that target removal of hidden tumors, for example. In open surgery, a surgeon may locate an embedded tumor by manual palpation of tissue. Without haptic sensory information, robotic surgery requires other means to locate such tumors. USE imaging is a promising method to alleviate the impact of haptic sensory loss, because it recovers the stiffness properties of imaged tissue. USE peers deep into tissue layers, providing localized stiffness information superior to that of human touch.

Robot Assisted Laparoscopic Ultrasound Elastography (RALUSE)

Magnetic Resonance Imaging (MRI) guided robotic approach has been introduced to improve prostate interventions procedure in terms of accuracy, time and ease of operation. However, the robotic systems introduced so far have shortcomings preventing them from clinical use mainly due to insufficient system integration with MRI and exclusion of clinicians from the procedure by taking autonomous approach in robot design. To overcome problems of such robotic systems, a 4-DOF pneumatically actuated robot was developed for transperineal prostate intervention under MRI guidance in a collaborative project by SPL at Harvard Medical School, Johns Hopkins University, AIM Lab at Worcester Polytechnic Institute, and Laboratory for Percutaneous Surgery at Queens University, Canada. At the moment, we are finalizing pre-clinical experiments toward real patient experiment(s) which will be coming up soon. Also, we are granted funding for another 5 years (till 2015) to develop the third version of the robot which not only addresses shortcomings of the previous versions, but also provides the ability to perform teleoperated needle maneuvering under real-time MRI guidance with haptic feedback. Please keep visiting this website for the latest update!

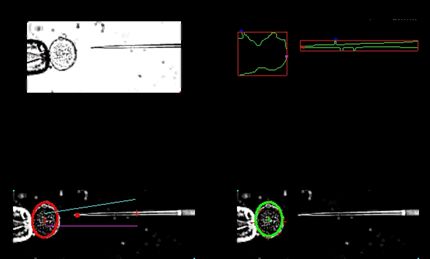

Bio-manipulation tasks find wide applications in transgenic, biomedical and pharmaceutical research, including common biomedical laboratory tasks such as manipulating cells. The objective of this research is to develop and evaluate methods for performing micrometer scale laboratory bio-manipulation tasks. These tasks form basic enabling components of methods for cell manipulation and cellular microinjections in the development of transgenic models, cancer research, fertilization research, cytology, developmental biology and other basic biological sciences research. This research will create infrastructure for performing these bio-manipulation tasks with force and vision augmentation. Our preliminary results promise significant improvement in the performance of these tasks with augmentation. Specifically, we will evaluate comparative performance of tele-operated and direct manipulation methods with and without augmentation in these tasks.

Bio-manipulation tasks find wide applications in transgenic, biomedical and pharmaceutical research, including common biomedical laboratory tasks such as manipulating cells. The objective of this research is to develop and evaluate methods for performing micrometer scale laboratory bio-manipulation tasks. These tasks form basic enabling components of methods for cell manipulation and cellular microinjections in the development of transgenic models, cancer research, fertilization research, cytology, developmental biology and other basic biological sciences research. This research will create infrastructure for performing these bio-manipulation tasks with force and vision augmentation. Our preliminary results promise significant improvement in the performance of these tasks with augmentation. Specifically, we will evaluate comparative performance of tele-operated and direct manipulation methods with and without augmentation in these tasks.

This project aims to design a robotic system to drive a flexible laryngoscope such that it can be used intra-operatively for evaluation and resection of lesions within the upper aerodigestive system (larynx, hypopharynx, oropharynx). The end goal is to produce a robotically controlled distal-chip flexible laryngoscope with a working port such that all movements of the distal tip can be controlled by a single remote control or joystick. Using a robotic system to manipulate the laryngoscope has several distinct advantages over manual manipulation: 1) a robotic manipulator allows for greater accuracy than can be achieved manipulating the scope by hand, 2) since the robot is supporting the weight of the scope, surgeon fatigue is reduced, 3) since the scope can be completely manipulated with one hand, the number of personnel needed for the operation is reduced, 4) since the robot is in between the surgeon and the scope, active software and control features like motion compensation and virtual fixtures can be introduced. This project also has a significant clinical component, since the end goal is a clinically usable system meeting all clinical regulatory requirements.

The goal of this project is to develop a general purpose cooperatively controlled robotic system to improve surgical performance in head and neck microsurgery. The REMS is based on the technology developed for the Eye Surgical Assistant Workstation at JHU, holding surgical instruments together with the surgeon and sensing the surgeon's intent through a force sensor. However, since the robot has control over the instrument, it can filter out undesired motions like hand tremor, or constrain motions with virtual fixtures. Validation experiments in several representative areas of head and neck surgery, including stapedotomy, microlaryngeal phonosurgery, sinus surgery, and microvascular surgery are underway.

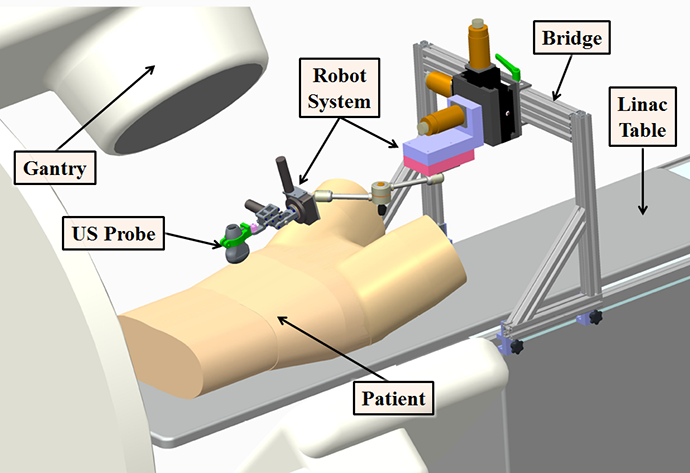

The goal of this project is to construct a robotically-controlled, integrated 3D x-ray and ultrasound imaging system to guide radiation treatment of soft-tissue targets. We are especially interested

in registration between the ultrasound images and the CT images (from both the simulator and accelerator), becausethis enables the treatment plan and overall anatomy to be fused with the ultrasound image. However, ultrasound image acquision requires relatively large contact forces between the probe and patient, which leads to tissue deformation. One approach is to apply a deformable (nonrigid) registration between the ultrasound and CT, but this is technically challenging. Our approach is to apply the same tissue deformation during CT image acquisition, thereby removing the need for a non-rigid registration method. We use a model (fake) ultrasound probe to avoid the CT image artifacts that would result from using a real probe. Thus, the requirement for our robotic system is to enable an expert ultrasonographer to place the probe during simulation, record the relevant information (e.g., position and force), and then allow a less experienced person to use the robot system to reproduce this placement (and tissue deformation) during the subsequent fractionated radiotherapy sessions.

Robotic Assisted Image-Guided Radiation Therapy

The goal of this project is to construct a robotically-controlled, integrated 3D x-ray and ultrasound imaging system to guide radiation treatment of soft-tissue targets. We are especially interested

in registration between the ultrasound images and the CT images (from both the simulator and accelerator), becausethis enables the treatment plan and overall anatomy to be fused with the ultrasound image. However, ultrasound image acquision requires relatively large contact forces between the probe and patient, which leads to tissue deformation. One approach is to apply a deformable (nonrigid) registration between the ultrasound and CT, but this is technically challenging. Our approach is to apply the same tissue deformation during CT image acquisition, thereby removing the need for a non-rigid registration method. We use a model (fake) ultrasound probe to avoid the CT image artifacts that would result from using a real probe. Thus, the requirement for our robotic system is to enable an expert ultrasonographer to place the probe during simulation, record the relevant information (e.g., position and force), and then allow a less experienced person to use the robot system to reproduce this placement (and tissue deformation) during the subsequent fractionated radiotherapy sessions.

Robotic Assisted Image-Guided Radiation Therapy

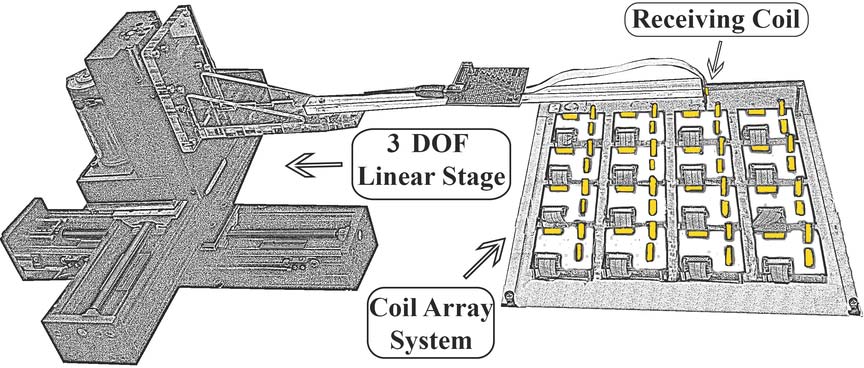

This is a joint project with the Fraunhofer IPA in Stuttgart, Germany, the goal is to develop surgical tracking technology that is accurate, robust against environmental disturbances, and does not require line-of-sight. In this project, we are developing a hybrid tracking approach, using EMT and inertial sensing, to create a tracking system that has high accuracy, no line-of-sight requirement, and minimal susceptibility to environmental effects such as nearby metal objects. Our initial experiments utilized an off-the-shelf EMT and inertial measurement unit (IMU), but this introduced two severe limitations in our sensor fusion approach: (1) the EMT and IMU readings were not well synchronized, and (2) we only had access to the final results from each system (e.g., the position/orientation from the EMT) and thus could not consider sensor fusion of the raw signals. We therefore developed custom hardware to overcome these limitations.

This is a joint project with the Fraunhofer IPA in Stuttgart, Germany, the goal is to develop surgical tracking technology that is accurate, robust against environmental disturbances, and does not require line-of-sight. In this project, we are developing a hybrid tracking approach, using EMT and inertial sensing, to create a tracking system that has high accuracy, no line-of-sight requirement, and minimal susceptibility to environmental effects such as nearby metal objects. Our initial experiments utilized an off-the-shelf EMT and inertial measurement unit (IMU), but this introduced two severe limitations in our sensor fusion approach: (1) the EMT and IMU readings were not well synchronized, and (2) we only had access to the final results from each system (e.g., the position/orientation from the EMT) and thus could not consider sensor fusion of the raw signals. We therefore developed custom hardware to overcome these limitations.

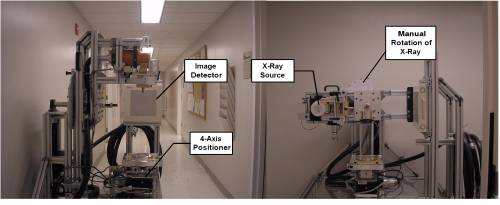

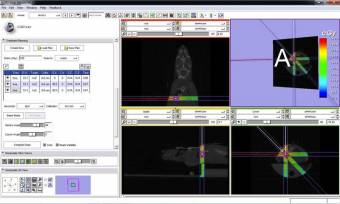

In cancer research, well characterized small animal models of human cancer, such as transgenic mice, have greatly accelerated the pace of development of cancer treatments. The goal of the Small Animal Radiation Research Platform (SARRP) is to make those same models available for the development and evaluation of novel radiation therapies. SARRP can deliver high resolution, sub millimeter, optimally planned conformal radiation with on-board cone-beam CT (CBCT) guidance. SARRP has been licensed to Xstrahl (previously Gulmay Medical) and is described on the Xstrahl Small Animal Radiation Research Platform page. Recent developments include a Treatment Planning System (TPS) module for 3D Slicer that allows the user to specify a treatment plan consisting of x-ray beams and conformal arcs and uses a GPU to quickly compute the resulting dose volume.

In cancer research, well characterized small animal models of human cancer, such as transgenic mice, have greatly accelerated the pace of development of cancer treatments. The goal of the Small Animal Radiation Research Platform (SARRP) is to make those same models available for the development and evaluation of novel radiation therapies. SARRP can deliver high resolution, sub millimeter, optimally planned conformal radiation with on-board cone-beam CT (CBCT) guidance. SARRP has been licensed to Xstrahl (previously Gulmay Medical) and is described on the Xstrahl Small Animal Radiation Research Platform page. Recent developments include a Treatment Planning System (TPS) module for 3D Slicer that allows the user to specify a treatment plan consisting of x-ray beams and conformal arcs and uses a GPU to quickly compute the resulting dose volume.

Active: 2005 - Present

This is a collaborative research project with Curexo Technology Corporation, developer and manufacturer of the ROBODOC® System.

For this project, we built the entire software stack of the robot system for research, from an interface to a commercial low-level motor controller to various applications (e.g., robot calibration, surgical workflow), using real-time operating systems with the component-based software engineering approach. We use the cisst package and Surgical Assistant Workstation components as the component-based framework.

This system is used to investigate several topics such as the exploration of kinematic models, new methods for calibration of robot kinematic parameters, and safety research of component-based medical and surgical robot systems. We also use this system for graduate-level course project such as the Enabling Technology for Robot Assisted Ultrasound Tomography project from 600.446 Advanced Computer Integrated Surgery (CIS II).

One common theme in research in our laboratory and elsewhere is combinining prior information about an individual patient or patient populations with new images to produce an improved model of the patient.

Examples may be found throughout our research writeups(e.g., Sparse X-Ray Reconstruction, 3D ACL Tunnel Position Estimation).

Some more examples may be found on the “Fusion of Images with Prior Data”

page.

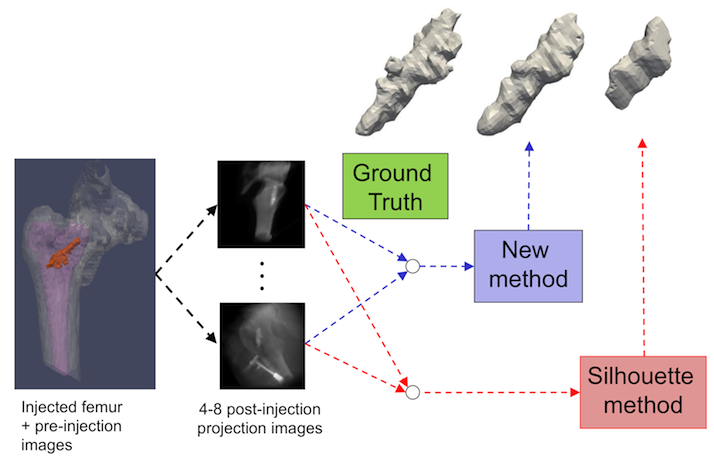

The Sparse X-Ray Reconstruction project aims to develop algorithms for reconstructing 3D models of objects from a few X-Ray projection images. The SxMAC algorithm uses multi-view active contour techniques to change the shape of a deformable model until the simulated appearance of the model that would be observed in X-Ray images matches the acquired images. The algorithm is applicable to intra-operative procedures where an object is injected or inserted into a patient, and the surgeon is interested in knowing the shape, orientation, and location of the object relative to the patient. The target application for this algorithm is a surgical procedure where bone cement is injected into a patient's osteoporotic femur to reinforce the bone. The surgeon is interested in knowing the shape of the cement during the injection process to guide execution of the surgical plan. More Info

The Sparse X-Ray Reconstruction project aims to develop algorithms for reconstructing 3D models of objects from a few X-Ray projection images. The SxMAC algorithm uses multi-view active contour techniques to change the shape of a deformable model until the simulated appearance of the model that would be observed in X-Ray images matches the acquired images. The algorithm is applicable to intra-operative procedures where an object is injected or inserted into a patient, and the surgeon is interested in knowing the shape, orientation, and location of the object relative to the patient. The target application for this algorithm is a surgical procedure where bone cement is injected into a patient's osteoporotic femur to reinforce the bone. The surgeon is interested in knowing the shape of the cement during the injection process to guide execution of the surgical plan. More Info

- B. C. Lucas, Y. Otake, M. Armand, and R. H. Taylor, “A Multi-view Active Contour Method for Bone Cement Segmentation in C-Arm X-Ray Images”, IEEE Trans.. Medical Imaging, p. in Press (epub date Oct 13), 2011. PMID 21997251.

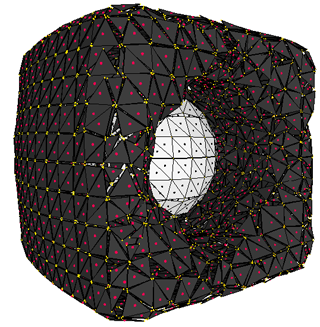

Deformable models are used in medical imaging for registering, segmenting, and tracking objects. A variety of geometric representations exist for deformable models. There are several imaging applications that execute a series of tasks which each favor a particular geometric representation. There are three major geometric representations: meshes, level sets, and particle systems. Each representation lacks a key feature that hinders its ability to perform one of the three core tasks for deformable models. In order to use the preferred representation for each task, intermediate steps are introduced to transform one representation into another. This leads to additional overhead, loss of information, and a fragmented system. The Spring Level Set (springls) representation unifies meshes, level sets, and particles into a single geometric representation that preserves the strengths of each, enabling simultaneous registration, segmentation, and tracking. In addition, the springls deformation algorithm is an embarrassingly parallel problem that can run at interactive frame rates on the GPU or CPU. More Info

Deformable models are used in medical imaging for registering, segmenting, and tracking objects. A variety of geometric representations exist for deformable models. There are several imaging applications that execute a series of tasks which each favor a particular geometric representation. There are three major geometric representations: meshes, level sets, and particle systems. Each representation lacks a key feature that hinders its ability to perform one of the three core tasks for deformable models. In order to use the preferred representation for each task, intermediate steps are introduced to transform one representation into another. This leads to additional overhead, loss of information, and a fragmented system. The Spring Level Set (springls) representation unifies meshes, level sets, and particles into a single geometric representation that preserves the strengths of each, enabling simultaneous registration, segmentation, and tracking. In addition, the springls deformation algorithm is an embarrassingly parallel problem that can run at interactive frame rates on the GPU or CPU. More Info

- B. C. Lucas, M. Kazhdan, and R. H. Taylor, “SpringLS: A Deformable Model Representation to provide Interoperability between Meshes and Level Sets”, MICCAI, Toronto, Sept 18-22, 2011.

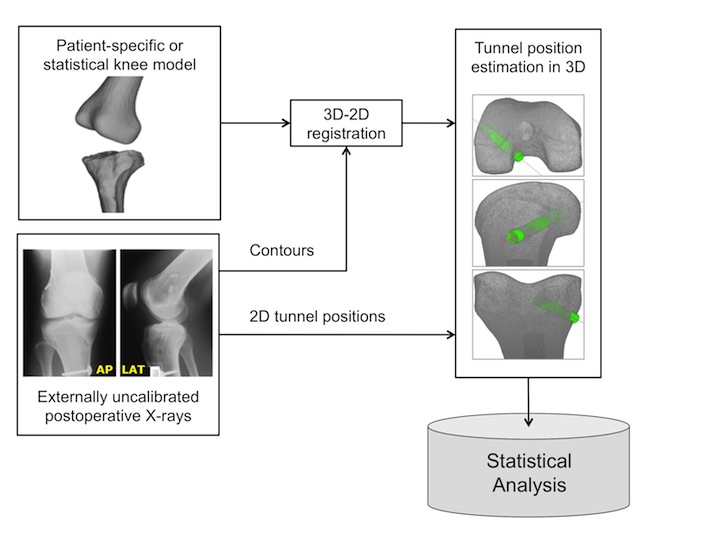

We are developing a 2D-3D registration method using post-operative radiographs to estimate

the tunnel positions in Anterior Cruciate Ligament (ACL) reconstructions by registering

a patient-specific 3D model of knee with two post-operative X-rays.

This could facilitate the precise identification of the tunnel locations as well as the

perception of their relationship to the topographical osseous anatomic landmarks.

The success in developing and validating the proposed workflow will allow convenient

but precise assessment of tunnel positions in patients receiving ACL reconstruction with

minimal risk of radiation hazard.

We are developing a 2D-3D registration method using post-operative radiographs to estimate

the tunnel positions in Anterior Cruciate Ligament (ACL) reconstructions by registering

a patient-specific 3D model of knee with two post-operative X-rays.

This could facilitate the precise identification of the tunnel locations as well as the

perception of their relationship to the topographical osseous anatomic landmarks.

The success in developing and validating the proposed workflow will allow convenient

but precise assessment of tunnel positions in patients receiving ACL reconstruction with

minimal risk of radiation hazard.

The method may also be used in statistical studies to assess variation in surgical technique and their effects on outcomes.

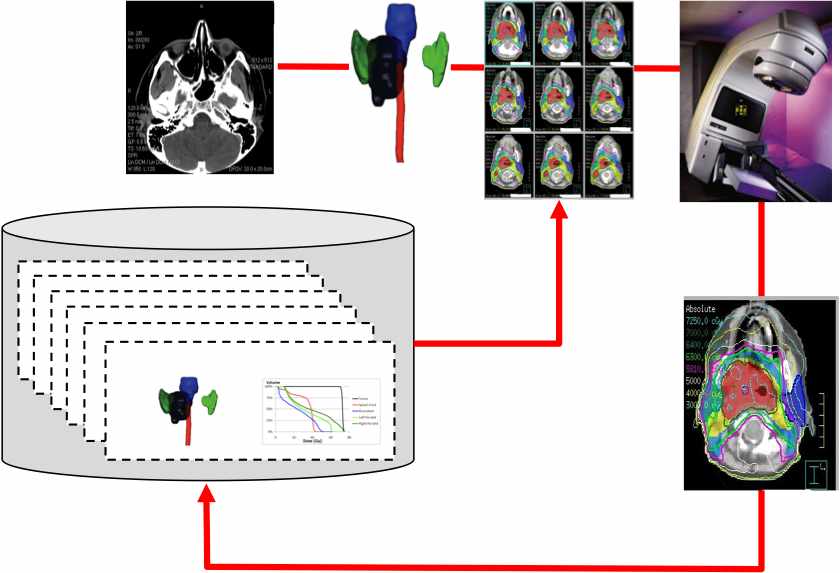

This joint project between the JHU CS Department and the JHU Radiation Oncology Department explores the statistical relationship between anatomic shape and treatment planning in radiation oncology. In one current project, our goal is to use a database of previously treated patients to improve radiation therapy planning for new patients. The key idea is that the geometric relationship between the tumor and surrounding critical anatomic structures has a crucial role in determining a dose distribution that treats the tumor while sparing critical structures. We have developed a novel shape descriptor, the Overlap Volume Histogram (OVH) characterizing the proximity of the treatment volume to critical structures. The OVH is then used to as an index into our patient data base. Retrieved information may be used both for plan quality sanity checking and as a means of speeding up the initial stages of treatment planning.

This joint project between the JHU CS Department and the JHU Radiation Oncology Department explores the statistical relationship between anatomic shape and treatment planning in radiation oncology. In one current project, our goal is to use a database of previously treated patients to improve radiation therapy planning for new patients. The key idea is that the geometric relationship between the tumor and surrounding critical anatomic structures has a crucial role in determining a dose distribution that treats the tumor while sparing critical structures. We have developed a novel shape descriptor, the Overlap Volume Histogram (OVH) characterizing the proximity of the treatment volume to critical structures. The OVH is then used to as an index into our patient data base. Retrieved information may be used both for plan quality sanity checking and as a means of speeding up the initial stages of treatment planning.

In closely related work, we explore advanced computational methods for radiation therapy treatment planning.

This work introduces a new variant of the popular Iterative Closest Point (ICP) algorithm for computing alignment between two shapes. Many variants of ICP have been introduced, most of which compute alignment based on positional shape data. We introduce a new probabilistic variant of ICP, namely Iterative Most Likely Oriented Point (IMLOP) which effectively incorporates both shape position and surface orientation data to compute shape alignment. Our tests demonstrate improvement over ICP in terms of registration accuracy, registration run-time, and ability to automatically detect if a registration result is indeed the “correct” registration.

The model completion projects aim to estimate an entire anatomical structure when only partial knowledge is available.

The model completion projects aim to estimate an entire anatomical structure when only partial knowledge is available.

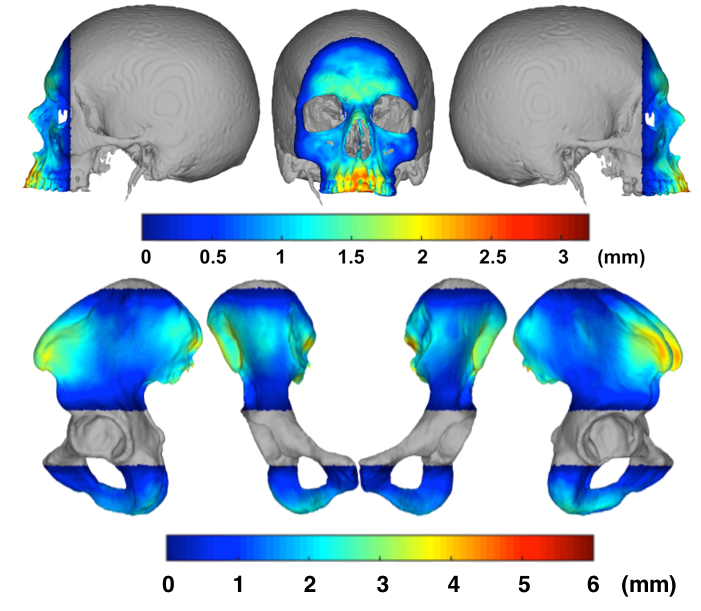

One clinical motivation is planning for restorative surgery of a patient who has experienced some trauma, but no prior 3D imaging is available. More specifically, we consider Total Face-Jaw-Teeth Replacement surgery. In this scenario, the original cephalometric measurements associated with the patient are not available due to missing or damaged skeletal structure, however these measurements can aid in surgical planning. Using the current undamaged, or known, anatomy of the skull and mandible surfaces, we estimate the currently unknown regions.

Another clinical application is completion of a patient's anatomy when only a partial CT is available. This scenario is plausible for computer assisted hip surgeries of young patients. Exposing reproductive organs to the radiation associated with a CT scan is not desirable, therefore we propose that a partial CT be taken and the remainder of the surface extrapolated.

First a statistical (PCA) model of the appropriate, complete, anatomical surfaces are created using the CT scans of other patients. The statistical model is partitioned into sets of vertices that represent the current patient's known and unknown anatomical regions, and the patient's known vertices, along with zeroes in the unknown region are projected onto the statistical model to estimate the entire surface. Around the boundary of the known shapes, a Thin Plate Spline is created to map vertices on the estimated surface to the actual patient surface. This mapping is then applied to the entire estimated surface in the unknown region and merged with the original surface of the patient's known anatomy.

Leave-one-out analysis has shown that skull and mandible surfaces may be extrapolated to within 1.5 mm (RMS surface distance) when over 50% of the structure is unknown. For the orthopedic cases, leave-one-out analysis shows that an entire pelvis surface may be estimated to within 2.5 mm (RMS surface distance) when the partial CT consists of only the acetabular joint region and the superior 5% of the iliac crest.

Publications:

The cisst software package is a collection of libraries designed to ease the development of computer assisted intervention systems. One motivation is the development of a Surgical Assistant Workstation (SAW), which is a platform that combines robotics, stereo vision, and intraoperative imaging (e.g., ultrasound) to enhance a surgeon's capabilities for minimally-invasive surgery (MIS). All software is available under an open source license. The source code can be obtained from Github, https://github.com/jhu-cisst/cisst.

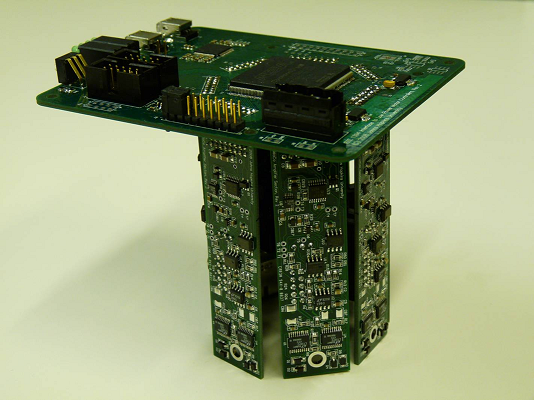

Research in surgical robots often calls for multi-axis controllers and other

I/O hardware for interfacing various devices with computers. As the need for

dexterity is increased, the hardware and software interfaces required to support

additional joints can become cumbersome and impractical. To facilitate

prototyping of robots and experimentation with large numbers of axes, it would

be beneficial to have controllers that scale well in this regard.

Research in surgical robots often calls for multi-axis controllers and other

I/O hardware for interfacing various devices with computers. As the need for

dexterity is increased, the hardware and software interfaces required to support

additional joints can become cumbersome and impractical. To facilitate

prototyping of robots and experimentation with large numbers of axes, it would

be beneficial to have controllers that scale well in this regard.

High speed serial buses such as IEEE 1394 (FireWire), and low-latency field programmable gate arrays make it possible to consolidate multiple data streams into a single cable. Contemporary computers running real-time operating systems have the ability to process such dense data streams, thus motivating a centralized processing, distributed I/O control architecture. This is particularly advantageous in education and research environments.

This project involves the design of a real-time (one kilohertz) robot controller inspired by these motivations and capitalizing on accessible yet powerful technologies. The device is developed for the JHU Snake Robot, a novel snake-like manipulator designed for minimally invasive surgery of the throat and upper airways.

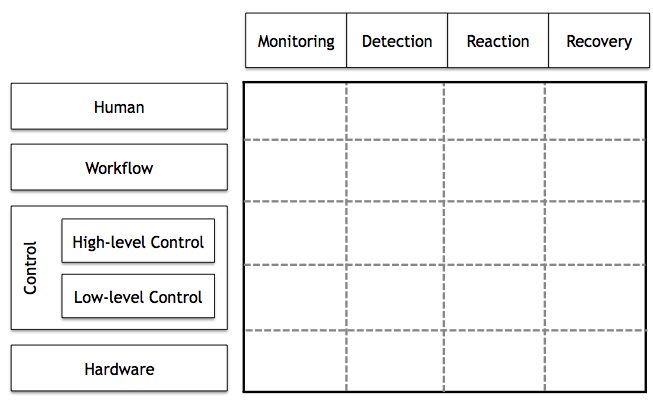

As the recent robot systems tend to operate closely with humans, the safety of

recent robot systems is getting more attention in the robotics community,

both in academia and industry.

However, safety has not received much attention within the medical and surgical

robotics domain, despite its crucial importance.

Another practical issue is that building medical and surgical robot systems with

safety is not a trivial process

because typical computer-assisted intervention applications use different sets

of devices such as haptic interfaces, tracking systems, imaging systems, robot controllers, and other

devices. Furthermore, this increases the scale and complexity of a system, making it

harder and harder to achieve both functional and non-functional requirements of the system.

As the recent robot systems tend to operate closely with humans, the safety of

recent robot systems is getting more attention in the robotics community,

both in academia and industry.

However, safety has not received much attention within the medical and surgical

robotics domain, despite its crucial importance.

Another practical issue is that building medical and surgical robot systems with

safety is not a trivial process

because typical computer-assisted intervention applications use different sets

of devices such as haptic interfaces, tracking systems, imaging systems, robot controllers, and other

devices. Furthermore, this increases the scale and complexity of a system, making it

harder and harder to achieve both functional and non-functional requirements of the system.

This project investigates the issue of safety of medical robot systems with the consideration of run-time aspects of component-based software systems. The goal is to improve the safety design process and to facilitate the development of robot systems with the consideration of safety, thereby building safe medical robot systems in a more effective, verifiable, and systematic manner. Our first step is to establish a conceptual framework that can systematically capture and present the design of safety features. The next step is to develop a software framework that can actually implement and realize our approach within component-based robot systems. As validation, we apply our approach and the developed framework to an actual commercial robot system for orthopaedic surgery, called the ROBODOC System (see above).

For this research, we use the cisst libraries, an open source C++ component-based software framework, and Surgical Assistant Workstation (SAW), a collection of reusable components for computer-assisted interventional systems.

This project implements a collection of haptic virtual fixture widgets within the cisst/SAW environment using an interface provided by the daVinci research API. More Info

The goal of this project is to accurately track 3D tool motion from stereo microscope video. This tool motion data will be used to analyze tool tremor in manual and robot-assisted surgery.

Physiological hand tremor has been measured to be 38 μm in retinal microsurgery [1]. The frequency of hand tremor can vary between 4-9 Hz [2]. Hand tremor poses a risk to the patient in microsurgery because the surgeon must navigate through and operate on sub - millimeter structures. Surgical robots help remove hand tremor. At Johns Hopkins, Steady-Hand Robots have been developed. Steady-Hand Robots are cooperative systems where the robot and the surgeon both hold the tool. The surgeon pushes on the tool, the robot reads the force applied and moves in the desired direction.

To compute the hand tremor from a surgical video the tool must be tracked. To accurately track the surgical tools we painted color markers on the tool shafts. The camera motion was computed by tracking color markers in the background. Any camera motion was detected using the background markers. The apparent tool movement was then compensated for camera movement. The 3D trajectory of the tool can be transformed in the frequency domain and the motion above the tremor threshold isolated ![]()

References: [1] S. P. N. Singhy and C. N. Riviere, “Physiological tremor amplitude during retinal microsurgery,” in Bioengineering Conference, Philadelphia, 2002. [2] R. N. Stiles, “Frequency and displacement amplitude relations for normal hand tremor,” Journal of Applied Physiology, vol. 40, no. 1, pp. 44-54, 1976. [3] K. C. Olds, P. Chalasani, P. Pacheco-Lopez, I. Iordachita, L. M. Akst and R. H. Taylor, “Preliminary evaluation of a new microsurgical robotic system for head and neck surgery,” in International Conference on Intelligent Robots and Systems, Chicago, 2014.

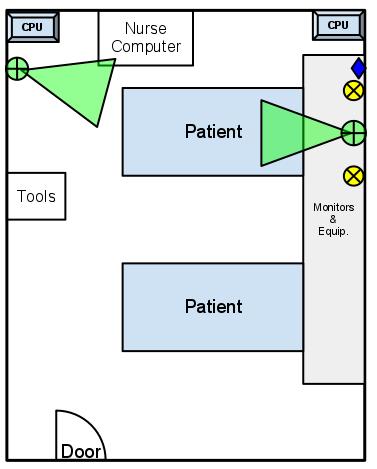

Process inefficiencies and underspecified physician orders and protocols in an Intensive Care Unit (ICU) can induce unnecessary workflow problems for nurses and cause sub-optimal conditions for the patient. During a patient’s stay there are many hundreds of small tasks that need to be completed, often in a specific sequence, and coordinated in time by a team of up to a hundred staff members. These include actions like giving medication, emptying chest tubes, and documenting vital signs. We are working on developing activity recognition techniques for logging the patient-staff interactions to improve workflow and potentially increase patient safety. An Xbox Kinect and other sensors are being used to monitor the ICU over extended periods of time and will be critical in identifying the tasks being performed.

Process inefficiencies and underspecified physician orders and protocols in an Intensive Care Unit (ICU) can induce unnecessary workflow problems for nurses and cause sub-optimal conditions for the patient. During a patient’s stay there are many hundreds of small tasks that need to be completed, often in a specific sequence, and coordinated in time by a team of up to a hundred staff members. These include actions like giving medication, emptying chest tubes, and documenting vital signs. We are working on developing activity recognition techniques for logging the patient-staff interactions to improve workflow and potentially increase patient safety. An Xbox Kinect and other sensors are being used to monitor the ICU over extended periods of time and will be critical in identifying the tasks being performed.

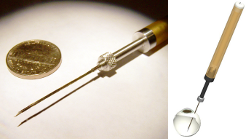

In this undergraduate research project, we have developed novel apparatus to assist in the extraction of salivary glands from mosquitoes. Mosquito-borne diseases such as malaria and yellow fever are among the most serious challenges to public health world-wide, affecting over 700 million people per year. In the case of malaria, one promising approach involves live organisms (plasmodium falciparum) harvested from the salivary glands of anopheles mosquitoes. One commercial effort to develop such a vaccine is being undertaken by Sanaria, Inc. (www.sanaria.com). Although the vaccine is showing promise clinically, one significant barrier to production of sufficient quantities of vaccine for large-scale trials or inoculation campaigns is the extraction of the salivary glands from large numbers of infected mosquitoes. The current production process used by Sanaria requires the use of tweezers and a hypodermic needle to extract glands one at a time. Working with Sanaria, we have developed production fixtures that enable human operators to perform key steps of this process in parallel, resulting in a very significant reduction in per-mosquito dissection rate while also significantly reducing the training time required per production worker.

In this undergraduate research project, we have developed novel apparatus to assist in the extraction of salivary glands from mosquitoes. Mosquito-borne diseases such as malaria and yellow fever are among the most serious challenges to public health world-wide, affecting over 700 million people per year. In the case of malaria, one promising approach involves live organisms (plasmodium falciparum) harvested from the salivary glands of anopheles mosquitoes. One commercial effort to develop such a vaccine is being undertaken by Sanaria, Inc. (www.sanaria.com). Although the vaccine is showing promise clinically, one significant barrier to production of sufficient quantities of vaccine for large-scale trials or inoculation campaigns is the extraction of the salivary glands from large numbers of infected mosquitoes. The current production process used by Sanaria requires the use of tweezers and a hypodermic needle to extract glands one at a time. Working with Sanaria, we have developed production fixtures that enable human operators to perform key steps of this process in parallel, resulting in a very significant reduction in per-mosquito dissection rate while also significantly reducing the training time required per production worker.

Work to refine our apparatus and production workflow is continuing with Sanaria and an engineering firm (Keytech, Inc.), with a goal of introducing our apparatus into Sanaria’s GMP vaccine production process. A preliminary patent has been filed, and a full utility patent will be filed this spring. Work to develop a more fully automated production process is also beginning, and Sanaria has submitted an NIH SBIR proposal with JHU as a partner to expedite this work.

Funding: JHU internal funds, Contract from Sanaria, Inc.

Key Personnel: (JHU) Russell Taylor, Amanda Canezin, Mariah Schrum, Suat Coemert, Yunus Sevimli, Greg Chirikjian; (Sanaria) Steve Hoffman, Sumana Chakravarty, Michelle Laskowski