Table of Contents

Voice Control of a Surgical Robot

Summary

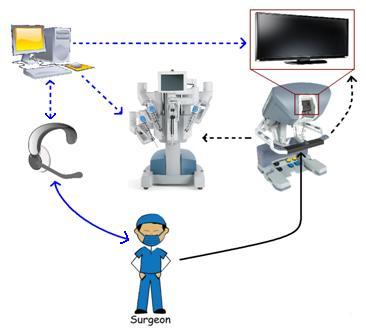

Our project explores voice integration with the da Vinci Surgical System from Intuive Surgical®. We aim to provide surgeons with an alternative method of performing basic functions such as varying camera zoom or “clutch” by simply using voice commands. Similarly, we hope that the same can also be applied the menu and graphics overlay system that was previously developed for the da Vinci by the ERC CISST. Currently, many of these functions demand tedious and/or complicated gestures by surgeons' hands and/or feet to perform. Moreover, these gestures often require the surgical tools be temporarily locked in place and the surgery briefly paused. We hope that voice integration would allow surgeons to allocate more of their time during surgery to manipulating the surgical tools instead of to performing peripheral functions, and thus ultimately smooth the surgical process.

- Students: Lindsey Dean, H. Shawn Xu

- Mentor: Anton Deguet

Background, Specific Aims, and Significance

Currently surgeons operate the Da Vinci® machine using their hands and feet. The master console is equipped with foot pedals and the newer models even have kinesthetic combinations where the surgeon must tap their leg against the side to perform a specific task. While these gestures are meant to be natural for the surgeon it has been proposed that adding voice-to-command utility to the machine could further simplify the surgeon’s interactions with the machine.

The CIIST libraries have developed the 3D-UI which is capable of allowing the surgeon to interact with the images and find information such as distance between points. Currently, however the 3D-UI cannot be used at the same time as the surgeon manipulates the salve, but rather the surgeon’s hands control a mouse on the 3D-UI. Through voice command there is potential for the surgeon to have an additional way of interacting with the interface without losing the functionality of their hands to manipulate the machine.

Our specific aims are:

- Increase ease of use of Da Vinci machine

- Add voice-control capability to 3D-UI

Deliverables

- Minimum:

- Well-documented program that adds singular functionality

- Status: [DONE] - measurement behavior

- Expected:

- Integration of voice and physical control (ie. Camera, clutch)

- Status: [DONE] for 3D-User Interface: voice and gestures work together or separately to control [IN PROGRESS] for camera manipulation

- Add additional multi-state functionality

- Additional demonstration(s) that show different functions voice can perform on Da Vinci

- Status: [DONE] with marker behavior [IN PROGRESS] for camera manipulation and viewing of a model

- Maximum:

- Fully-functioning library of states and commands that can easily be expanded upon

- Status: [IN PROGRESS] need to reconfigure some of the existing behavior files in the CISST libraries and make voice component flexible for future manipulation

Technical Approach

The surgeon will be able to speak commands into a microphone/headset to control what is displayed on the console display and the surgical robot. We also hope to implement speech feedback.

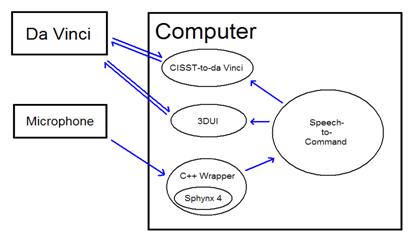

The general architecture of our software is as follows:

The surgeon's microphone communicates with Sphynx (which has a C++ wrapper). This in turn communicates with our speech-to-command system. This system sends commands to the already built CISTT-to-da Vinci and 3DUI systems. These can directly control the da Vinci robot and console display. We also hope that an additional layer of feedback and interfacing can be added through voice feedback.

The speech-to-command logic will be a state-based approach. The program will keep track of the current state of the system. When the surgeon wants to perform a certain voice controlled task, he first speaks the command to change the state of the system. Then he triggers the task with another command. Finally, he tells the system to revert back to the default state or to switch to another state. Each state will only have a few allowable commands, and this will reduce the risk of interference. We may also add universal commands (acceptable in all states), such as STOP.

Management Plan

Dependencies

Milestones

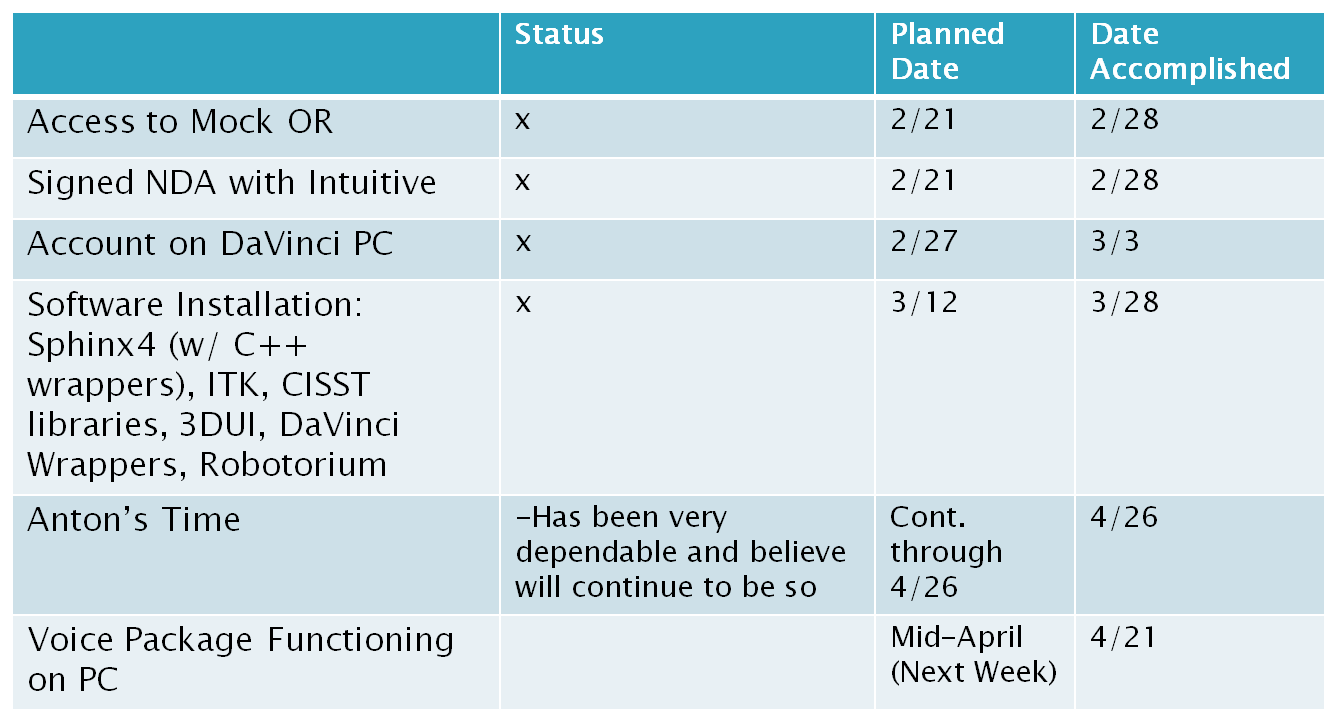

1. Overcome logistical dependencies: NDA, Mock OR access, JHED accounts

- Planned Date: 2/27/11

- Expected Date: xxxxx

- Status: DONE

2. Ready for Software Architechting: all necessary libraries on computer and functional walk through of how current system is working. Had more dependencies on Anton here then expected

- Planned Date: 3/12/11

- Expected Date: 3/17/11

- Status: DONE

3. Approved Document of software framework: create object oriented class design with all relevant necessary/required interfaces. Independent work, needs to be approved my Anton

- Planned Date: 3/12/11

- Expected Date: 3/23/11

- Status: DONE

4. Working demo of voice control on daVinci robot –INTUITIVE DEMONSTRATION

- Planned Date: 4/17/11

- Expected Date: 4/21/11

- Status: DONE

5. Incremental improvement of first voice demo: meeting with mentor in between and discuss strategies to improve existing demo (repeating milestone try to make as much progress here as possible)

- Planned Date: 4/20/11

- Expected Date: 5/12/11

- Status: IN PROGRESS

6. Wrap up code- DOCUMENTATION and CLEANING UP CODE - IMPROVE RUNTIME and BEHAVIOR structure

- Planned Date: 5/15/11

- Expected Date: 5/15/11

- Status: IN PROGRESS

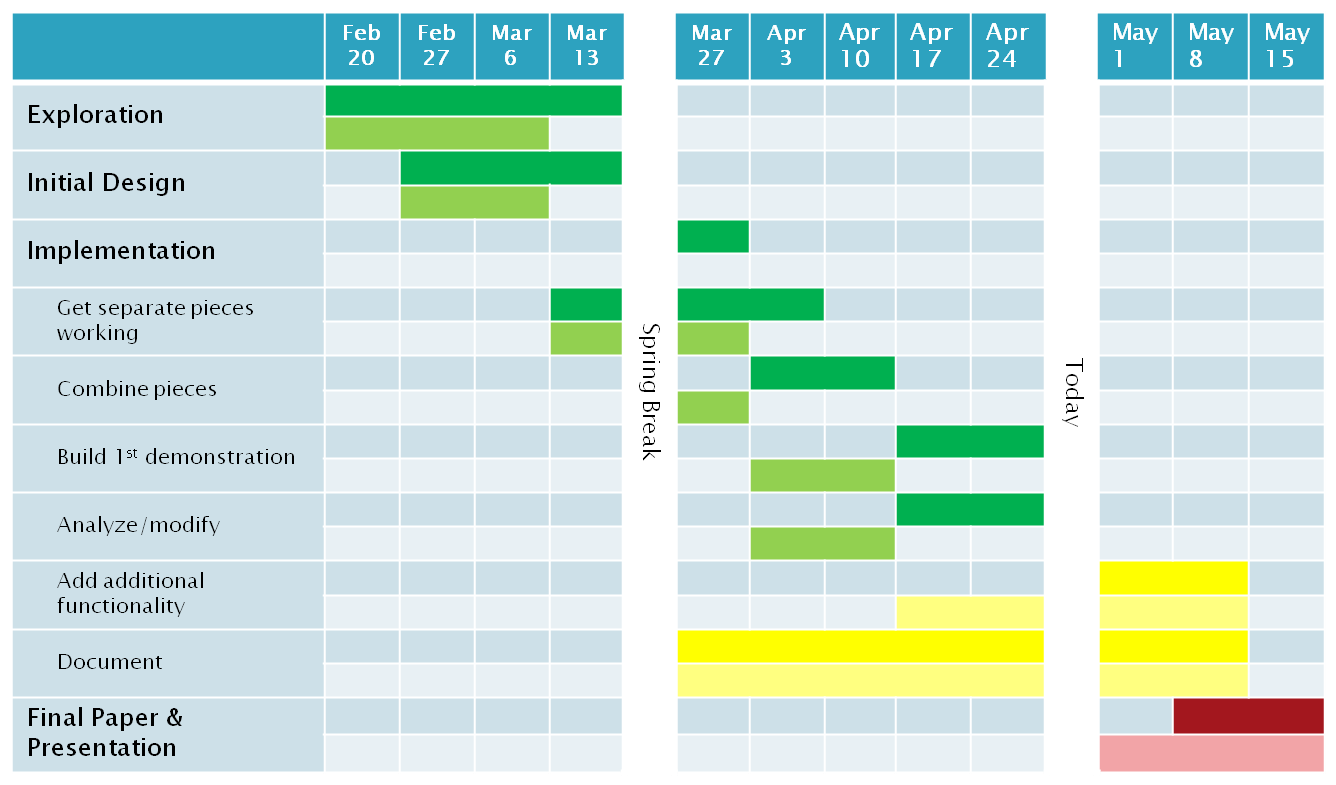

Timeline

Major changes or issues

- NOT APPLICABLE (4/28/11)

Results

-Experimenting with Sphynx4

- Limitations with over-matching or never matching

- Possible solution:

- create a “honey pot”, have a larger library of words with same number of keywords will give program ability to recognize more words and can have a way to do nothing for all recognized words that are not keywords. (used a setting in Sphinx that acted in a similar manner)

- create library of keywords distinct enough to minimize weaknesses in Sphynx4 – measurement words work best

-Experimenting with DaVinci

- 3D-User Interface

- menu options (drop marker, distance, atlas)

- have delved into code to find how to implement a new event to do the same - have added a clutch after finger grip to signify end of click

- Control of Robotic Arms

- surgeon's left and right arm can control up to three robotic arms and camera at once. When controlling 3 arms it is necessary to switch them frequently which is currently done by clutching in and out – add voice here to control which arm controls which robotic arm.

- camera control is currently very primitive - could add a command for “camera see tools” - camera using information about position of tools can readjust so both are in field of vision.

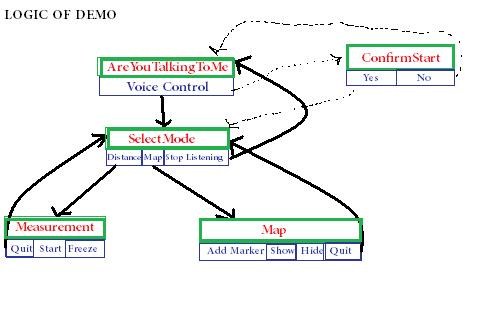

To test the viability of our technical approach we identified two menu options from the 3DUI: measuring distance and creating a 3Dimension map of markers to incorporate into a proof-of-concept demonstration. We felt these behaviors demonstrated the most practical use of voice integration. During our exploration phase on the daVinci in the beginning we noticed how cumbersome it was to use the master controller as a mouse in order to start an application. Additionally the clutch must be held down constantly while the user aims the finger grippers at the icon of their choice – pinch and release. However, now the user can enter the voice control interface and speak the commands “distance” and “map” to trigger the same events that previously required a great deal of physical exertion. The entire logic of our voice control demonstration is as follows:

In the preceding figure, the words on top are the contexts and below are the commands listened for while in the corresponding context. To facilitate development we included a widget that displays the possible words while in each context. We believe that visually cuing the surgeon to speak is more intuitive and less distracting than having the machine recite the options for each menu.

To enter the voice control interface the user must first recite the command word which we have set to “voice control,” and once this word has been recognized it causes the 3DUI to run in the background. From this step we currently have a confirmation context which as can be seen connected in red as in the future it is not necessary. Our original choice to have it was to try to decrease the probability of accidentally entering the interface, however in practice we found that it did not actually decrease the amount of times the interface was accidentally entered and therefore only decreases efficiency. Once the user is in select mode the command options are “distance”, “map” and “stop listening.” The latter of these simply returns the speech recognition back to its idle state.

“Distance” opens up the measurement feature in the 3DUI which causes a green number indicating the distance travelled to appear on the screen. Currently, whenever the measurement state is entered through voice a non-zero is displayed. Therefore it is necessary to say “start” in order to reset the value. To help the user with this glitch until it has been solved we implemented an alternative command of “reset” which serves the same function as “start” however since the meanings can be interpreted very vaguely we wanted to include all commands a user may want to say. In order to stop the measurement from changing the user must say “freeze.” The reason we chose this particular word was that we knew “stop” could cause too many words to be misrecognized since “start” was the first word we implemented. Finally “quit” simply returns the user back to “Select Mode.” We found this set of vocabulary to be particularly robust and able to handle a wide variety of voices and accents. In “map” mode, the surgeon can add, show, and hide overlay markers to the visual display in 3D space.

Our demonstration confirms that voice is an appropriate interface for controlling peripheral functionality on surgical robots. However, due to the limitations of speech recognition in terms of accuracy and reliability, the use of voice control for complex physical functions such as the movement of surgical equipment could potentially be dangerous and is therefore inappropriate.

Through our work this semester we successfully developed a proof of concept for the potential of integrating voice control. We have met all of our expected goals and were able to recover from unanticipated technical difficulties with compiling the framework and the always fickle Sphinx4.

Reports and presentations

- Project Plan

- Project Proposal: project_proposal.pdf

- Proposal Presentation: project_proposal_presentation.pdf

- Paper Presentation (Lindsey Dean):emotion_sensitive_speech_control_and_noise_reduction_in.pdf

- Paper Selections (Lindsey Dean): 08sch14.pdf09sch8.pdf

- Paper Review (Lindsey Dean): paper_review.pdf

- Checkpoint Presentation (April 7, 2011): checkpoint_presentation.pdf

- Mini-Checkpoint for Intuitive (April 26, 2011): chkpt_with_updates.pdf

- Paper Presentation (Shawn Xu): paper_presentation_xu.pdf

- Paper Review (Shawn Xu): paper_presentation_paper.pdf

- Poster Teaser : teaser_team6.pptx

- Poster Teaser (updated): posterteaser-voicecontrol_6_.pdf

- Final Report: finalreport-team6.pdf

- Final Code: submit.zip

Project Bibliography

- A. Kapoor, A. Deguet, and P. Kazanzides, “Software components and frameworks for medical robot control,” in Robotics and Automation, 2006. ICRA 2006. Proceedings 2006 IEEE International Conference on, 2006, pp. 3813-3818. “Sphinx-4.” CMU Sphinx - Speech Recognition Toolkit. Web. http://cmusphinx.sourceforge.net/sphinx4/javadoc/index.html.

- Liu, Peter X., A.D. C. Chan, and R. Chen. “Voice Based Robot Control.” International Conference on Information Acquisition (ICIA) (2005): 543. Web. http://ieeexplore.ieee.org.proxy3.library.jhu.edu/stamp/stamp.jsp?tp=&arnumber=1635148.

- Patel, Siddtharth. “A Cognitive Architecture Approach to Robot Voice Control and Respons.” Web. <http://support.csis.pace.edu/CSISWeb/docs/ MSThesis/PatelSiddtharth.pdf>. (2008)

- Schuller, Bjorn, Gerhard Rigoll, SalmanCan, and Hubertus Feussner. “Emotion Sensitive Speech Control for Human-Robot Interaction in Minimal Invasive Surgery.”Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication(2008): 453-58. Print.

- Schuller, Bjorn, SalmanCan, Hubertus Feussner, Martin Wollmer, DejanArisc, and BenediktHornler. “SPEECH CONTROL IN SURGERY: A FIELD ANALYSIS AND STRATEGIES.”Multimedia and Expo, 2009. ICME 2009. IEEE International Conference on (2009): 1214-217. Print.

- Sevinc, Gorkem. INTEGRATION AND EVALUATION OF INTERACTIVE SPEECH CONTROL IN ROBOTIC SURGERY. Thesis. Johns Hopkins University, 2010. Print.