Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Last updated: May. 8, 2014, 5:32 pm

Contact Information

Chris Paxton: cpaxton3 (at) jhu.edu

My goal is to develop tools to automate parts of a surgical procedure, so that the machine can make procedures faster and more efficient. Surgeries can be hours long, and contain many repetitive motions; automating parts of a task makes a surgeon's job easier and lets them focus on the patient. The Da Vinci robot has a third arm which is often used for supplementary tasks like cutting threads. A surgeon cannot control all three arms at once, so they need to clutch to switch which arm is being controlled. Automating small parts of the procedure that need the third arm would help reduce task complexity and decrease load on the user.

I want to focus on applying this to a simple collaborative peg-passing procedure first, then to a suturing example based on surgical training procedures, leveraging the large amount of information collected by the Language of Surgery project.

Human-robot collaboration is increasingly important as robots become more capable of contributing to skilled tasks in the workplace. Robotic Minimally Invasive Surgery (RMIS) is a part of this trend. Partial automation would decrease the load on surgeons during long procedures by automating repetitive sub-tasks, and it would improve surgeon performance if procedures are being performed over long distances in conditions of high latency.

This is a project that uniquely fits our position at JHU; The Language of Surgery project here has collected a large amount of surgical data used for skill classification and for providing feedback to surgeons in training. Recent work has also looked into automatic segmentation of video and kinematic data from these surgical procedures. I worked with Amir Masoud on methods for learning from demonstration that can incorporate information about the environment into following a preset trajectory during a manipulation task; the models used for this work are pictured above.

Stereo Registration and Reconstruction: Using the available video data for stereo reconstruction is difficult because the camera intrinsic parameters of the robots change from trial to trial, because the robots' camera focal distances can change. In addition, trials are recorded on different robots.

Recent work has been able to identify the Da Vinci tooltips in video. I want to use this to find locations of the tooltips in all collected video data, then use this together with the available camera position and tooltip.

Motion Model: Prior work from Prof. Hager's group used Gaussian Mixture Models to determine when rotations needed to occur. I am interested in using a different and hopefully more robust approach to model how interactions should occur. Some recent work in Inverse Optimal Control (IOC) has dealt with learning in continuous environments from locally optimal examples, and recent work submitted to IROS by Amir Masoud and myself under Prof. Hager has looked at learning how to incorporate new environmental information into demonstrated trajectories.

The approach I plan on using for modeling agents' motion is based on maximum-entropy IOC. In this case, we maximize the probability of each given actions a from each state s for the observed expert trajectories.

In this case, however, we also need to take into account noisy environmental features. Motions need to be in relation to observed features of interest (needle, suture points, peg being passed) and the tissue. Previous work has looked at this problem before through the use of hidden variable Markov Decision Processes for activity forecasting. This has also been used to predict the intention of an actor, which is useful for predicting when intervention should take place.

There are two possible approaches for this, based on work by two different groups. In the paper Continuous Inverse Optimal Control using Locally Optimal Examples by Levine et al., the authors look at methods for learning inverse optimal control solutions based on only locally optimal solutions. This is ideal for our application because we do not want to assume that the human demonstrations are globally optimal. The human can only control the end point of the arm, for example, and not the entire arm. Work by Pieter Abbeel's group in IOC has also recently looked into real world examples. They solve map a demonstration scene onto a test scene, and then solve a trajectory optimization problem.

The Peg Transfer task requires two components: grabbing a ring from one hand and putting it on a peg.To solve this IOC problem, I need a concrete list of features. For placing a ring on a peg, these would be:

When grabbing a ring from another gripper, necessary features are:

Task Model: While the IOC component is capable of modeling individual segments of a complex task, we also need some idea of how different task components fit together. Luckily, our surgical data has already been manually labeled and segmented with a set of rigorously tested and well-defined definitions available on the Language of Surgery wiki. We also know that, when performing third-arm tasks, the user will clutch to switch arm control, providing an easy segmentation of which parts of the task the software will be responsible for handling. Previous work in temporal planning and hierarchical control has elaborated on how to combine multiple sub-tasks.

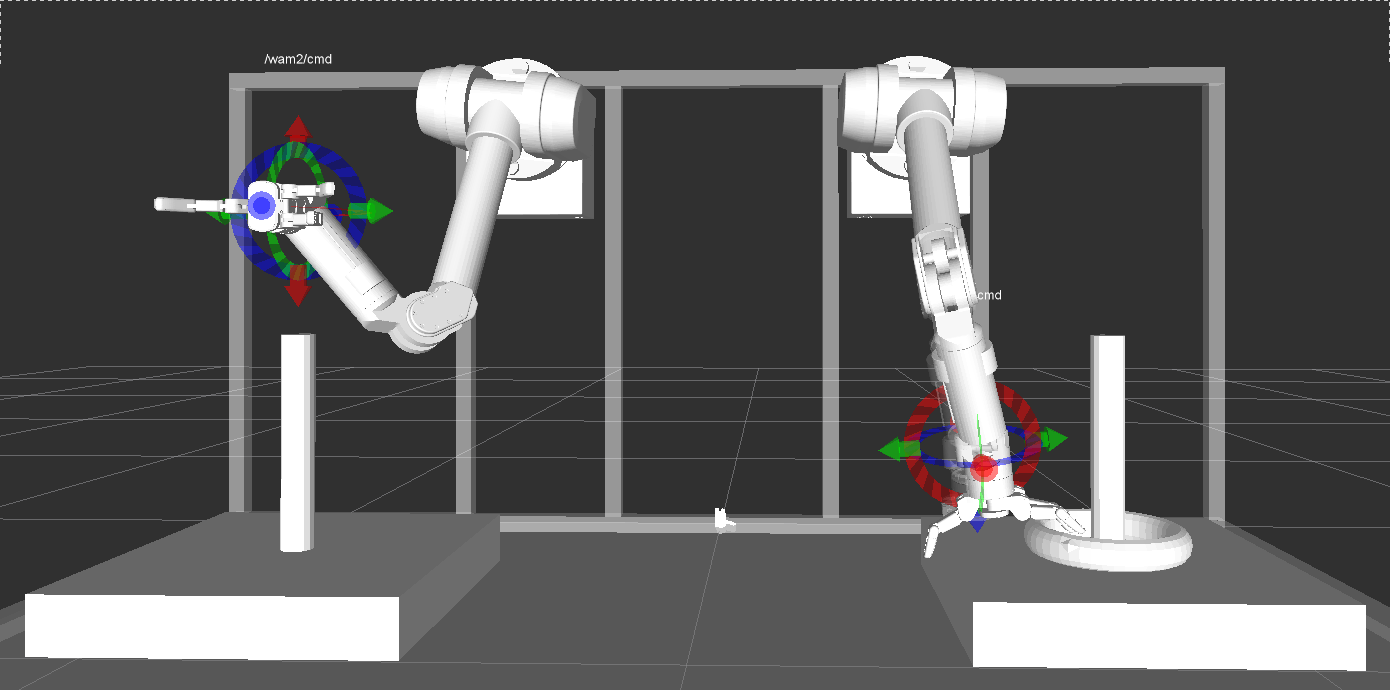

Simulation: I am using the ROS/OROCOS toolkit created by Jon Bphren, together with models and controllers for the Barrett WAM arm. This will allow me to develop methods that can be tested easily and quickly in simulation and on the physical WAM arm. I can pass simple trajectories and cartesian coordinates to the controller, which greatly simplifies programming and testing IOC algorithms.

I also upgraded my workstation with a new graphics card (NVidia GTX760) and 8 GB of additional RAM to improve its simulation capabilities. These additional dependencies are an integral part of the project; unfortunately, previously it had been unclear as to what would be the best way to move forward.

I can issue commands to the robot by publishing on a ROS topic:

rostopic pub -r 1 /gazebo/rml_traj/joint_traj_point_cmd trajectory_msgs/JointTrajectoryPoint "{ positions: [0.0,-1.57,0,3.0,0,-0.8,0.0] }"

Another way to control the robot is by providing position commands to an inverse kinematics solver, like either MoveIt (developed by Willow Garage) or an IK controller for the ROS/Orocos integrated code described above. The ROS/Orocos IK controller follows a destination TF frame with the tip of the robot end effector. I can control the position of the destination frame with a Phantom Omni (haptic feedback controller) or with a SpaceNav 3D mouse.

As of 3/28, the code to control the arm with a 3DConnexion SpaceNavigator mouse works reliably. There are a few features to add, however, like controlling the Barrett hand and switching between arms.

Controlling multiple arms has proven to be a bigger problem than anticipated. I mostly have the two arms working together now, working with (mentor) Jon Bohren.

The multiple arms required a change in the way the current Orocos/ROS integration set up handled components: there were a number of issues just because it was not set up to be able to launch multiple robots in the same context. Since ROS and Gazebo are multithreaded, there were a few race conditions where sometimes both arms would launch, sometimes only one arm would be able to launch, or sometimes neither would launch and the whole system would crash. This problem was caused by a mutex in the Orocos Gazebo plugin: threads could access Gazebo before everything was initialized, or attempt to access the world state at the same time.

As of April 4, both arms can be controlled by the 3DConnexion Space Navigator mouse in a simulated version of the stage set up in the LCSR Robotorium. While it would be possible to use either a Phantom Omni or the Da Vinci console, I am working with the Space Navigator mouse for the time being because it is simple to use, works cross platform, and because I can toggle controlling each of the two arms with a simple interface.

I can then use this setup to pick up and manipulate objects, like the cordless drill pictured here. The arm was struggling to pick up objects like this, so I tweaked the integral gains and integral bounds in the PID controller (the component responsible for compensating for gravity).

The Space Navigator mouse lets me move the end point of the WAM arm, and the buttons let me toggle which arm is being controlled and close or open the gripper. I can now use this to walk through the simulated peg transfer task, where both arms need to work together to move a torus modelled in Blender from one peg to another. I created the peg URDF models specifically for this task.

Here, you can see the handoff in action.

I made some changes to the UI. I wrote a plugin for Gazebo that publishes information to TF; the goal is to expose more of the necessary information about the world to my code. Right now, positions and orientations are published via TF as a tree of coordinate transforms. I can also record joint space positions and other information.

I made some changes to the UI. I wrote a plugin for Gazebo that publishes information to TF; the goal is to expose more of the necessary information about the world to my code. Right now, positions and orientations are published via TF as a tree of coordinate transforms. I can also record joint space positions and other information.

I can now control the arms through interactive TF frames, not just through the Space Navigator mouse. This gives me a slightly more precise way of controlling the arms.

Update 5/1/2014: The simulation works well, and I can use it to reliably manipulate objects. I can also use rosbag to record and replay trajectories. My next steps are to use the rosbag API to load and modify these trajectories, so that I can replay them given that an object is at a different location. To do this, I might want to have something publish a set of features I can record as well, and then modify the planned trajectory in response to the difference in feature counts.

To develop intelligent assistance for surgical procedures, I need access to a robot, task models the robot can perform, and a set of training data. As of Feb. 2014, I already have access to the BB API necessary for read/write instructions to the Da Vinci robot, and I can use the robot in Hackerman for research and development. I also have access to collected surgical data already, and can collect more using the Da Vinci for specific tasks.

I will also be using the open-source CISST and OpenCV libraries. CISST has a number of useful tools for robotics, but it also has a video codec necessary for recent data collected at MISTIC. OpenCV has tools to perform stereo calibration and 3D reconstruction. Both of these systems are already set up on my laptop and workstation. I will use NLOPT, an efficient cross-platform nonlinear optimization library written in C++, to solve necessary parts of the IOC problem for modeling motions.

It may be possible to speed up development with the use of a simulator instead of performing all experiments on the actual Da Vinci; however, at present it is unclear when or if this will happen. The Mimic simulator in question (at Johns Hopkins Bayview) is capable of simulating deformable materials and threads, but we need to wait on the company itself to see whether we can access the position of the needle and thread during the task and to be able to read out the kinematics. Current plans assume I will not be able to use the simulator this semester.

Another option for a simulator would be to use the Gazebo simulator integrated with ROS. This would speed up development for the peg-passing task, but Gazebo cannot simulate deformable materials, so it would not be useful for the suturing task I described.

Update 03/12/2014: I am planning on using ROS/OROCOS and Gazebo to simulate the rigid manipulation task described above.

Training and Certification

I have completed the necessary training for laboratory safety and human subjects research:

rosbag and its C++/Python API to modify these trajectories and read them in software.References and Background Reading:

Project Code: