Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Last updated: 5/14/2013 8:00pm

Computer and robot assisted eye surgery has great potential to address common problems encountered in many eye surgery tasks including poor visualization, lack of force sensing, hand tremor, and accessibility. Positional feedback of the instruments relative to the eye can be used to prevent undesirable collisions, improve remote center of motion interaction, and possibly automate tasks like insertion of instruments into trocars. The goal of this project is to create a prototype of a device that provides real-time 3D tracking of the eye and instruments relative to each other during surgical procedures.

Background

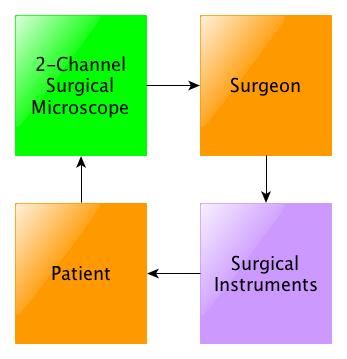

The current state-of-the-art techniques for ophthalmic surgery involve a surgeon looking through a 2-channel surgical microscope and using surgical instruments (see Figure 1). However, there are some obvious disadvantages to this method. The surgery is not very precise, accurate or stable. Also, the surgeon’s natural hand tremor has the potential to damage eye tissue and cause cataracts [2]. And since the microscope provides an amplified view of the eye, it narrows the surgeon’s field of view. This can lead to poor decision-making and poor interpretation of the qualitative data [2]. Finally, there is a lack of information provided to the operating room staff that is present to support the surgeon. According to a study conducted in 2009, ophthalmic surgery has the highest rate of incorrect procedures within the operating room [9].

The current state-of-the-art techniques for ophthalmic surgery involve a surgeon looking through a 2-channel surgical microscope and using surgical instruments (see Figure 1). However, there are some obvious disadvantages to this method. The surgery is not very precise, accurate or stable. Also, the surgeon’s natural hand tremor has the potential to damage eye tissue and cause cataracts [2]. And since the microscope provides an amplified view of the eye, it narrows the surgeon’s field of view. This can lead to poor decision-making and poor interpretation of the qualitative data [2]. Finally, there is a lack of information provided to the operating room staff that is present to support the surgeon. According to a study conducted in 2009, ophthalmic surgery has the highest rate of incorrect procedures within the operating room [9].

.

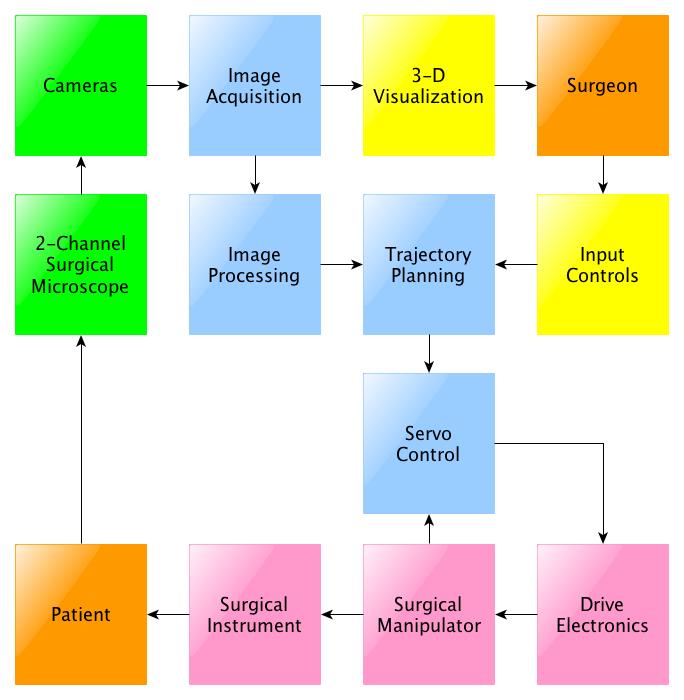

Researchers have turned their attention to integrating robotics for use in surgical methods. This has a more complicated process (see Figure 2). Robot-assisted surgery has increased precision, accuracy, and stability, and is capable of reducing hand tremor. Furthermore certain processes can me automated, and the surgeon does not have to be in the same room as the patient when utilizing teleoperation. However, there are some limitations that prevent this from use in clinical practice. Most notably, robot-assisted surgery has not resolved the problem of surgeon’s making poor decisions and interpreting the qualitative data poorly. It is also expensive to buy and maintain robots, there aren’t many robots available, there is a possibility of robot malfunctions, and gaining patient trust can be difficult.

Researchers have turned their attention to integrating robotics for use in surgical methods. This has a more complicated process (see Figure 2). Robot-assisted surgery has increased precision, accuracy, and stability, and is capable of reducing hand tremor. Furthermore certain processes can me automated, and the surgeon does not have to be in the same room as the patient when utilizing teleoperation. However, there are some limitations that prevent this from use in clinical practice. Most notably, robot-assisted surgery has not resolved the problem of surgeon’s making poor decisions and interpreting the qualitative data poorly. It is also expensive to buy and maintain robots, there aren’t many robots available, there is a possibility of robot malfunctions, and gaining patient trust can be difficult.

With the aid of an optical tracker that provides positional feedback, it is possible to improve the surgeon’s decision-making and interpretation of data. One suggestion for an optical tracking system is to attach a camera to the surgeon’s microscope. This approach cannot account for obfuscation due to equipment and surgeons’ hands, can interfere with the function of the microscope, and provides suboptimal resolution due to the distance from the surgical site and approach angle. Other solutions rely on the magnetic field, which can be affected by metal in the surgical field, and also has suboptimal accuracy for microsurgical applications.

Specific Aims

Instead, we propose an alternative optical tracking system that can be placed around the patient's eye without interfering the necessary intraocular surgical procedures. The system provides surgeons with positional feedback of instruments in relation to the eye. Specifically, tool position is desirable to ensure iso-centric rotation of instruments into the trocars, automate insertions of instruments into the trocars, prevent unintentional collisions with other instruments and anatomy, and prevent excessive stress on the sclera. Our aims are to provide the following:

Significance

Ultimately, this system will help monitor surgical protocols, assess surgical skill, and improve safety of ophthalmic procedures. For example, lubricant must be added to the eye during eye surgery to keep the eye moist. Utilizing an optical tracker, the system could check that the gel is added every four minutes. The tracking system could also be modified to identify incompatible tools and provide warnings to the surgeon. Furthermore, there is a way to identify the best movements for eye surgery. By assessing and improving surgical skill, this optical tracker has the potential to increase the efficiency of surgeons. Finally, collision warnings could be implemented to prevent surgeons from damaging eye tissue and hitting the lens, which can cause cataracts. This goggle device could also be adapted for other microsurgeries, such as for cochlear implantation.

Below is a chart of the final deliverables reached at the end of the semester.

Phase One: Research

First, an evaluation of the clinical eye surgery environment will be conducted through hospital visits, videos of ophthalmic surgeries, and a survey of literature in order to determine device constraints on orientation and position of the equipment. Once the constraints for our prototype are approved, we will evaluate current off-the-shelf RGB and infrared cameras to optimize focal length, field of view, camera synchronization, shutter speed, resolution, and cost.

Separately, we will research standard multi-camera calibration methods, segmentation methods, tracking algorithms and necessary equipment for different camera systems. This information will be used to design the tracking system pipeline and three tests to confirm successful implementation. In parallel, we will determine the number and type of markers to be placed on tools for easy tracking. At the end of this phase, we will have a layout of the device and the tracking system design.

Phase Two: Mechanical Device Design

Below on the left is the initial design of camera placement. Taking into account the capabilities of the cameras, we designed a scaled model for camera placement. The field of view was taken into account and drawn in a CAD model shown below. This was used to create a simple model of the eye with rigidly attached cameras for our initial prototype to test calibration.

Next, a model of the device to hold the camera was designed. Instead of designing in CAD and then building the device as initially planned, a mock up of the device was created first.

The device was then designed in CAD as shown above to the right. There are four rigid stands to hold the cameras at an angle, and three laser cut pieces bolted together with screws. Below is a look at the final device.

Phase 2: Software Design

To the left is the block flow design of the tracking algorithm. The major steps are offline multi-camera calibration, tool and trocar detection, and 3D point reconstruction. Below are more detailed block diagrams of each of the three majors steps.

Since single camera calibration is more accurate than pair wise camera calibration, the results from single camera calibration is used to determine intrinsic parameters. For each camera, there is a set of 20 images that is run through a pipeline to extract grid corners, calibrate, account for distortion, and optimally calibrate in order to determine the intrinsic parameters.

For extrinsic parameters, it is necessary to perform pair-wise camera calibration. In this case, the accuracy is limited by the shared field of view where the planar checkerboard can be placed. Similar to single camera calibration, each set of 20 images must be run through a pipeline to extract grid corners, calibrate, account for distortion, and optimize calibration. These results are then taken to determine stereo camera calibration, and then the optimal stereo camera calibration that determines intrinsic and extrinsic parameters. Only the extrinsic parameters are used in the tracking algorithm.

As described before, the next step in tracking is detecting the four color markers. The figure below describes the general process. First, a frame taken from a camera is converted from the RGB color space to YUV color space. This is because the YUV color space is more robust to changes in illumination. Next, the image is thresholded for each color of interest. The ranges of each color was determined by guess and check for multiple images with varying illumination and camera views. This noisy image is improved with a series of morphological operators such as dilate, erode, open, and close. Then, using OpenCV, the connected components are determined from the binary image. The largest one is assumed to be the marker and the center was calculated using moments. The final output is the pixel location of the center of each marker.

The detection algorithm was tested by overlaying original RGB images with the color thresholds. Specifically, detection was tested for varying camera views and illumination settings.

The final step in the tracking algorithm is the 3D point reconstruction for each marker. Each pixel location output from detection is transformed to find the distorted pinhole image projection using the camera matrix. This point must then be normalized with the distortion parameters also calculated during calibration. Specifically, there is no algebraic solution to undistort a point, so two corresponding mesh grids of pixels must be used to estimate the normalized plot. The normalized values represent the slope of the line from the camera origin to a detected marker center. In order to compare between cameras, these line equations must be transformed to the same camera space using the extrinsic properties calculated from calibration. We chose to transform everything to the space of Camera 1, the green camera. Next, the goal is to find the point of intersection between all the lines. However, due to noise there may not be a point of intersection. Therefore, we chose to look through each pair of lines and find the pair of points with the shortest distance between the lines through a least squares approach. Once the two corresponding points are found, the average was taken to be the true location of the marker. This was repeated for each pair of lines and averaged to find the final 3D point location. These are put in a graphic display to provide positional feedback.

Phase Three: Evaluation

In order to evaluate the success of our prototype, we will design a simple experiment to test the accuracy of our tracking system for a stationary tool, as well as a to measure the accuracy of a tool in motion. If time permits, we will also look into accuracy when a camera’s view is occluded, and under different illumination settings.

Here is the final chart of milestones achieved.

Here is an overview of when milestones were achieved. Dark green and blue are extensions. Green milestones are Yejin Kim's milestones, Blue milestones are Sue Kulason's milestons.

[1] J. D. Pitcher, J. T. Wilson, S. D. Schwartz, and J. Hubschman, “Robotic Eye Surgery : Past, Present, and Future,” J Comput Sci Syst Biol, pp. 1–4, 2012.

[2] J.-P. Hubschman, J. Son, B. S. D. Schwartz, and J.-L. Bourges, “Evaluation of the motion of surgical instruments during intraocular surgery,” Eye (London, England), vol. 25, no. 7, pp. 947–53, Jul. 2011.

[3] M. Nasseri, E. Dean, S. Nair, and M. Eder, “Clinical Motion Tracking and Motion Analysis during Ophthalmic Surgery using Electromagnetic Tracking System,” in 5th International Conference on BioMedical Engineering and Informatics (BMEI 2012). 2012.

[4] G. M. Saleh, G. Voyatzis, Y. Voyazis, J. Hance, J. Ratnasothy, and A. Darzi, “Evaluating surgical dexterity during corneal suturing.,” Archives of ophthalmology, vol. 124, no. 9, pp. 1263–6, Sep. 2006.

[5] K. Guerin, G. Vagvolgyi, A. Deguet, C.C.G. Chen, D. Yuh, and R. Kumar, “ReachIN: A Modular Vision Based Interface for Teleoperation,” in the MIDAS Journal - Computer Assisted Intervention, Aug. 2010.

[6] Tomas Svoboda. A Software for Complete Calibration of MultiCamera Systems. Talk given at MIT CSAIL. Jan 25, 2005.

[7] K. Zimmermann, J. Matas, and T. Svoboda. “Tracking by an Optimal Sequence of Linear Predictors.” IEEE Transactions on Pattern Analysis and Machine Intelligence. 31(4), 2009

[8] A. Borkar, M. Hayes, and M. T. Smith, “A Non Overlapping Camera Network: Calibration and Application Towards Lane Departure Warning” IPCV 2011: Proceedings of the 15th International Conference on Image Processing, Computer Vision, and Pattern Recognition. 2011.

[9] Neily, Mills, et al. “Incorrect Surgical Procedures Within and Outside of the Operating Room.” Archives of Surgery 16 Nov. 2009: Vol. 144, No.11:1028-1034. Web. 12 Feb. 2013

[10] D.L. Pham, C. Zu, and J.L. Prince. “Current Methods in Medical Image Segmentation.” Annual Review of Biomedical Engineering Vol. 2 pp 315-337. August 2000.

[11] Y. Deng. “Color Image Segmentation.” Computer Vision and Pattern Recognition, 1999 IEEE Computer Society Conference.

[12] K. Kim, L. S. Davis. “Multi-camera Tracking and Segmentation of Occluded People on Ground Plane Using Search-Guided Particle Filtering.” Computer Science Volume 2953, pp 98-109. 2006.

[13] A. Yilmaz, O. Javed, M. Shah. “Object tracking: A survey.” ACM Computing Surveys Volume 38 Issue 4, Article No. 13. 2006.

[14] M.-H. Yang and N. Ahuja, “Gaussian mixture model for human skin color and its applications in image and video databases,” Proc. SPIE:Storage and Retrieval for Image and Video Databases, vol. 3656, pp. 458–466, 1998.

[15] J. Bruce, T. Balch, and M. Veloso, “Fast and inexpensive color image segmentation for interactive robots,” in Proc. IEEE Intl. Conf. Intell. Robot. Syst., 2000, pp. 2061–2066.

[16] M. K. Hu, “Visual pattern recognition by moment invariants,” Information Theory, IRE Transactions on, vol. 8, no. 2, pp. 179–187, 1962.