Computer-Guided X-ray C-arm Positioning

Last updated: 05/01/16 16:34

Summary

Mobile C-arm is an X-ray imaging device with motors, which allows flexible positioning of its X-ray source. This device is widely used for diagnostic imaging or surgical procedures in many areas including orthopedic surgeries. Its usage in surgical procedures requires “fluoro-hunting,” a process to achieve a fine placement of the C-arm in order to display a preferred fluoroscopic view. Here, we propose a user interface (UI) that allows a simulation of fluoroscopic previews of the C-arm. The UI generates a digitally-reconstructed radiograph (DRR) of a pre-acquired patient CT data based on an X-ray source position, which is defined either virtually or physically, and provides a preview of the fluoroscopic view corresponding to the source position.

Students: Ju Young Ahn, Seung Wook Lee

Mentor(s): Dr. Jeff Siewerdsen, Dr. Matthew Jacobson, Dr. Tharindu De Silva, Dr. Joseph Goerres

Ju Young Ahn

Backgrounds: Matlab, C/C++, Java, Python.

Main responsibilities: CT/Pt/C-Arm Registration, UI Development

Seung Wook Lee

Background: Medical imaging, Instrumentation, Matlab, Python.

Main responsibilities: C-arm based DRR module/UI Development

Background, Relevance, and Significance

Mobile C-arm is widely used by surgeons as a means of providing fluoroscopy-based surgical guidance. Multiple degrees of freedom (DOF) of the arm with angular and orbital movement allows surgeons to set an optimal viewpoint for surgeries. However, placing the C-arm to the preferred position, a process called “fluoro-hunting”, often requires surgeons to manually drive the C-arm to various positions to take 5 to 10 X-ray shots. This process is time consuming, and may expose both patients and surgeon to more radiation. Also, it is physically cumbersome and requires skills for surgeons to manually maneuver the C-arm during the fluoro-hunting.

Our solution, a user interface capable of the optimal positioning of the C-arm based on digitally reconstructed radiograph (DRR) can resolve the problems followed by the fluoro-hunting; guidance of the C-arm based on radiation-free fluoroscopic preview is less time consuming and reduces the risk of radiation exposure of both surgeons and patients.

Deliverables

Technical Approach

Computer Interface

Computer Interface allows users to find their preferred view by driving a virtually defined C-arm source position. DRR corresponding to the virtual source position is generated and displayed on screen.

In order to implement the computer interface, 3D CT data of a patient is acquired first. Then, CT-patient-registration will be performed to align the patient position to the CT data. Also, C-arm position will be measured by optical trackers or an encoder embedded inside the C-arm. Using the registered CT data and C-arm position, the computer DRR generation module will produce a 2D DRR preview, a simulated fluoro image. Then, as the C-arm query position is manipulated, the DRR generation module will produce a 2D DRR preview in real-time. Once the operator is satisfied with the preview, the computer will drive the C-arm to the desired position using the SITA interface and will acquire an X-ray image. SITA is a built-in interface of the C-arm which allows motorized movement of the C-arm. Advantage of this method is that it is not physically cumbersome, as C-arm is driven and controlled solely based on the computer interface.

<Figure 1. Flowchart for Computer Interface>

<Figure 1. Flowchart for Computer Interface>

Physical Interface

Physical Interface allows users to find their preferred view by manually driving actual C-arm and checking corresponding DRR. DRR corresponding to the physical source position is generated and displayed on screen.

Physical interfaces shares many features with the computer interfaces. 3D CT data of a patient is acquired, and the CT data is registered to the patient using an optical tracker. One key difference is the method to acquire C-arm position. In the physical interface, C-arm query position is directly acquired from the C-arm as physicians move the C-arm to various test positions. Then, based on the registered CT data and C-arm query position, the DRR generation module will produce a 2D DRR preview. The preview is updated based on physical C-arm source position, and physicians can adjust position of the C-arm based on the DRR until they find the C-arm position for their preferred view. Once an operator locates the DRR, corresponding to the desired preferred view, surgeon can start their procedures right away as the C-arm is already located at the desired position. Advantage of this method is its smooth integration with surgical workflow; surgeons do not need to access computers during the procedure to change source positions.

<Figure 2. Flowchart for Physical Interface>

C-arm Position Measurement

We propose two methods for C-arm position measurement.

One is a tracker-based measurement and the other is an encoder-based measurement. For the tracker-based measurement, an optical marker will be attached to the C-arm and C-arm position will be tracked by an optical tracker with high precision.

The other is encoder-based measurement. For the encoder-based measurement, the orbital and angular information about rotation of C-arm can be read from the encoder embedded inside the C-arm. Using this information, we can omit an on-going real time tracking of the source of the detector.

Final Module

We decided to perform geometric calibration of C-arm. We decided to define source and detector positions at each angle using software called “GeoCalc,” developed by one of our mentors in the I-STAR Lab. Using this software, information of C-arm geometry, such as source or detector positions, at different purely angular or orbital positions was acquired. By purely angular or orbital positions, we mean orbital or angular position with the other position fixed at zero. The reason that the calibration data was collected for the purely angular or orbital movement lies in difficulties in anticipating all possible trajectories.

The matrices are acquired for the full 360 degrees for angular movements. Due to non-isocentricity of the C-arm’s orbital movement, calibration object did not stay in the scene for full 180°. Therefore, the matrices for pure orbital movements are collected within a predefined range of -40° to 20°. With the registration and calibration data, we could generate digitally reconstructed radiograph (DRR) with high accuracy.

CT-patient Registration

We propose two methods for the CT to patient registration.

One is a tracker-based registration and the other is a 3D-2D image registration. For the tracker-based registration, an optical marker will be attached to the patient and the CT data will be registered to the patient through an optical tracker.

The other is 3D-2D image registration. For the 3D-2D image registration, two X-ray scans will be taken. Then, using those two images, 3D CT data will be registered to the 2D images; thereby registering CT to a patient.

Ideally, by using encoder based C-arm position measurement and 3D-2D image registration for CT-Patient registration, we will not require tracking of the C-arm device anymore.

Final module

We decided to perform 3D-2D image registration to define relative positions between patients and the C-arm. We used 3D-2D image registration software available in the I-STAR lab to acquire relative positions between C-arm and patient. This 3D-2D image registration used a single fluoroscopic image of the phantom. With knowledge of C-arm geometry, transformation matrix that best corresponds a DRR with the fluoroscopic image was computed.

GUI

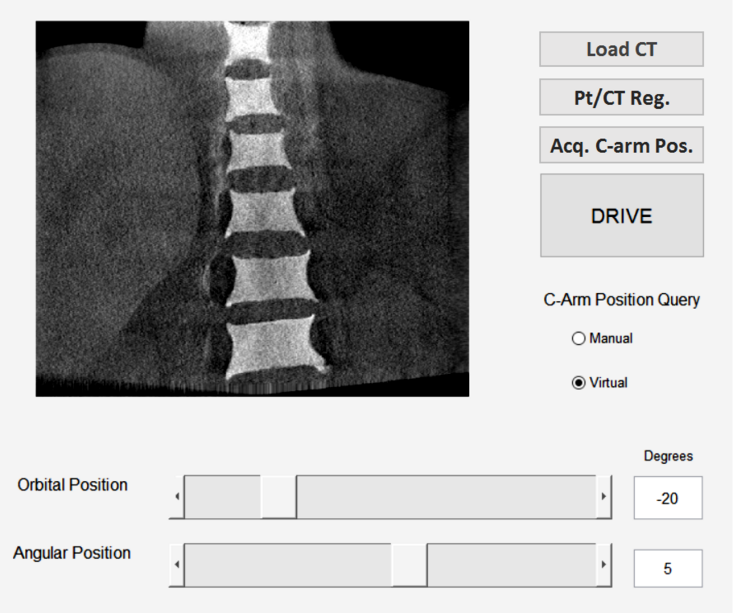

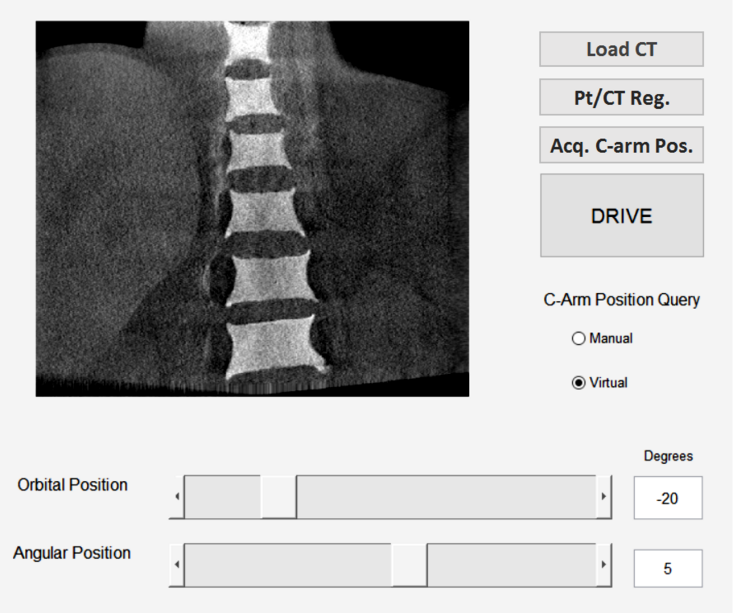

<Figure 3. Sample GUI>

GUI should be capable of the followings:

Loading CT data: Allow user to select/load patient CT data.

CT-Pt. registration: Register the patient CT data to patient, using optical trackers

Acquisition of C-arm position Acquire C-arm query position in two different modes:

Sliders representing source positions: Define source position with angular/orbital positions. For the computer interface, moving the sliders allows movement of a virtual source. For the physical interface, the sliders displays current physical source position in terms of angular/orbital positions.

Drive: In Computer Interfaces, allows users to drive the C-arm position to virtual source position defined by the slider.

Current GUI Image

<Figure 4. Updated/Current GUI>

Detailed information on the current UI could be found on the user manual linked as an appendix in this page.

Dependencies

A. Equipment Accessibility

Our project requires access to equipments like C-arm, optical tracker, optical markers, and 3D phantoms. The equipments are all available in Dr. Siewerden’s lab.

B. Software/ Existing tools

Necessary softwares such as VTK, Visual Studio, TREK, Team Viewer, Smart Git, etc are downloaded. We have access to all of existing tools from Dr. Siewerdsen’s lab through git repository. Also, we have a sample 3D CT data of a phantom that we can initially work with.

C. Version Control/Documentation

We downloaded Jupyter and smart git to deal with version control. We have our own separate branch where we can modify codes and then commit/push our changes.

D. Safety Training

Online radiation safety training course will be taken within this week.

E. Schedule with mentors

We have a weekly meeting with Dr. Siewerdsen. We will work at Dr. Siewerdsen’s lab on Monday and Wednesday and we will be able to discuss any complications and problems to our mentors during that time. (Refer to 7.3 Weekly Schedule)

F. Access to the lab

We are given an access to Dr. Siewerdsen’s lab.

Milestones and Status

Allow angular/orbital movement of the source position

Planned Date: 02/29/16

Expected Date: 03/01/16

Status: ———-Done———-

Define detector transformation in accordance with the source position

Planned Date: 03/07/16

Expected Date: 03/07/16

Status: ———-Done———-

Allow source position to mimic actual/physical C-arm movement (apply physical constraints, non-perfectly circular movement of C-arm)

Planned Date: 03/14/16

Expected Date: 03/22/16

Status: ———-Done———-

Register C-arm to CT data and phantom to CT data (possibly calibrate C-arm position)

Planned Date: 03/14/16

Expected Date: 03/22/16

Status: ———-Done———-

Verify registration process through TRE

Planned Date: 03/16/16

Expected Date: 03/24/16

Status: ———-Done———-

(PI) Build a bridge so physical movement of C-arm/Source can be tracked and inputted to the module in real-time

Planned Date: 04/11/16

Expected Date: 04/11/16

Status: ———-Done———-

(PI) Acquire X-ray image of preferred view, error check with generated DRR (Validation)

Planned Date: 04/18/16

Expected Date: 04/18/16

Status: ———-Done———-

(CI) Read C-arm/Source position with an optimal fluoroscopic preview

Planned Date: 04/25/16

Expected Date: 04/25/16

Status: ———-Done———-

(CI) Manually move C-arm to the desired position and acquire an X-ray image, Verification process by comparing a DRR preview to an acquired X-ray image (Validation)

Planned Date: 04/25/16

Expected Date: 04/25/16

Status: ———-Done———-

Using SITA interface, allow computer interface to drive C-arm to the desired position.

Planned Date: 05/02/16

Expected Date: 05/02/16

Status: Out of scope

Encoder-based C-arm position measurement

Planned Date: 03/28/16

Expected Date: 03/28/16

Status: ———-Done———-

Pt.-CT registration via 3D-2D registration with a few X-ray shots

Planned Date: 05/05/16

Expected Date: 05/05/16

Status: ———-Done———-

Verification process by comparing a DRR preview to an acquired X-ray image

Planned Date: 05/05/16

Expected Date: 05/05/16

Status: ———-Done———-

Reports and presentations

Project Bibliography

Navab, Nassir, Stefan Wiesner, Selim Benhimane, Ekkehard Euler, and Sandro Michael Heining. “Visual Servoing for Intraoperative Positioning and Repositioning of Mobile C-arms.” Medical Image Computing and Computer-Assisted Intervention – MICCAI 2006 Lecture Notes in Computer Science (2006): 551-60.

Siddon, Robert L. “Fast Calculation of the Exact Radiological Path for a Three-dimensional CT Array.” Med. Phys. Medical Physics 12.2 (1985): 252.

Hartley, Richard, and Andrew Zisserman. “More Single View Geometry.” Multiple View Geometry in Computer Vision: 153-163.

Long, Yong, Jeffrey A. Fessler, and James M. Balter. “3D Forward and Back-Projection for X-Ray CT Using Separable Footprints.” IEEE Transactions on Medical Imaging IEEE Trans. Med. Imaging 29.11 (2010): 1839-850.

Otake, Y. et al. “Automatic Localization of Vertebral Levels in X-Ray Fluoroscopy Using 3D-2D Registration: A Tool to Reduce Wrong-Site Surgery.”Physics in medicine and biology 57.17 (2012): 5485–5508.

Uneri, A. et al. “Known-Component 3D-2D Registration for Image Guidance and Quality Assurance in Spine Surgery Pedicle Screw Placement.”Proceedings of SPIE–the International Society for Optical Engineering 9415 (2015): 94151F.

Other Resources and Project Files

Main codes/project file version controlled via Git.

We could provide a link to the Git but access to the website would require approval.