Contact Us

CiiS Lab

Johns Hopkins University

112 Hackerman Hall

3400 N. Charles Street

Baltimore, MD 21218

Directions

Lab Director

Russell Taylor

127 Hackerman Hall

rht@jhu.edu

Last updated: 05/09/19 10:30

The objective of this project is to develop and validate models for tool gravity compensation and arm deflection for the Galen Surgical System. The gravity compensation model will be implemented for multiple tools for static and dynamic cases.

The Galen is a general purpose, hand-over-hand, admittance control robot developed mainly for otolaryngology. It is currently being commercialized by Galen Robotics inc, but was originally developed as PhD project in the LCSR and is still the subject of many research projects. The Galen reads values at a force/torque sensor and calculates the expected force/torque values at the user’s point of contact on a tool. The tool then moves proportionally to the force exerted, allowing a surgeon to use a tool with the additional benefits of stability and virtual fixtures. The force sensor, however, has no way distinguishing between the forces/torques exerted by the surgeon and those exerted by the tool itself. Currently the robot can cancel out values read at a particular moment, but rotating a tool results in new force/torque readings and often unintentional motion. The system also suffers from deflection issues, where the true position of the robot varies from the expected position because of flexibility in the joints.

Therefore, the objective of this project is to develop and validate models for gravity compensation and deflection for the Galen Surgical System. The gravity compensation model will be implemented for multiple tools and for static and dynamic motion.

o

1) Static Compensation There are three models that our technical approach will employ, each increasingly complex. The first model is a simple static gravity compensation. The force and torque due to gravity can be represented as wrench vectors.

Ft is the wrench on the tool due to gravity and Fb is the wrench on the tool adaptor, as well as other non-tool components that could affect the force sensor, due to gravity. These are both in frames that are parallel to the base frame. The rotation matrix between the sensor and this global frame (the tool frame) can be calculated as:

Here, s is the sensor frame, b is the tool adaptor frame, and t is the tool frame. Rbs, for example, is the rotation from the b frame to the s frame. pbs is the translation from the b frame to the s frame. Rbs(0) denotes the rotation of the force sensor on the robot without any roll or tilt. Assuming that the force sensor is biased without any tool when there is no roll or tilt and when the tool is not attached, the wrench at the sensor, without any tool attached, can be calculated as:

Adg denotes the adjoint matrix of g. gbs denotes the transformation between frame b and frame s. Given enough data, the bias weight and center of mass of the tool adaptor can be estimated via a least squares method using the following equations:

Likewise, assuming the same bias condition, once a tool is attached, the wrench at the sensor can be calculated as:

Again, given enough data, the tool weight and tool center of mass can be estimated via a least squares method using the following equations. Then, this equation can then be used to predict the force sensor readings. The true readings minus the predicted readings would then be the compensated force sensor values.

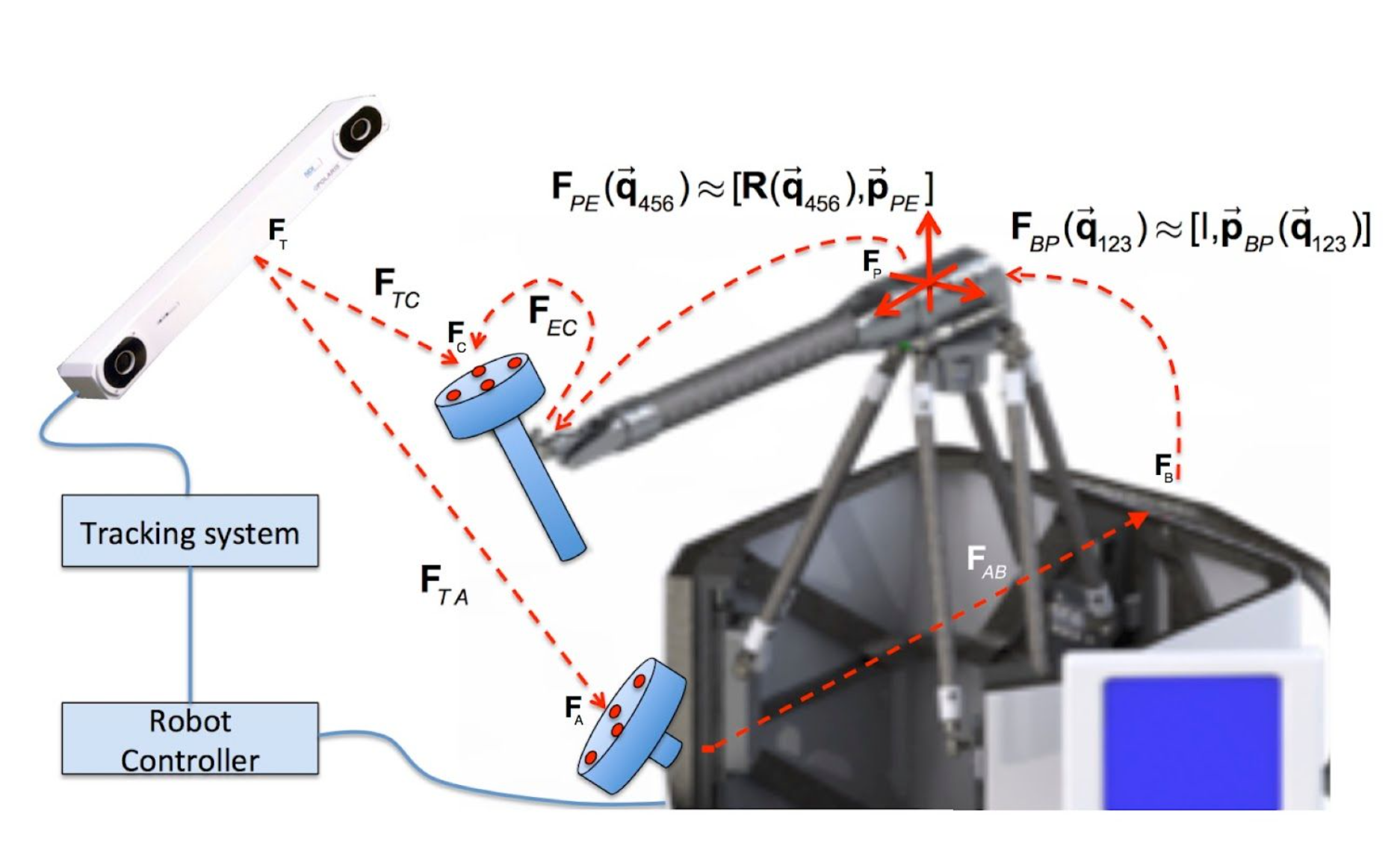

An image of the robot with the mentioned wrench vectors is shown below

2) Dynamic Compensation Since the tool is ideally rigidly attached to the force sensor, there should be no dynamic components that need to be compensated. However, since there are some flexibilities in the system, this is most likely not the case. Therefore, currently, our plan is to fit a model from commanded velocities/angular velocities of the joints to the force sensor readings. This model will be selected after data collection has been completed.

Once these models have been developed, our gravity compensation system can be represented as a block diagram.

In order to model the deflection of the system, we will collect force sensor data, joint angles, tool position as reported by the robot, and tool position as measured by an optical tracker. (add deflection tech approach)

3) Deflection Characterization Lastly, we will build a model of arm deflection in the system due to forces and position applied to the tool at the robot end-effector. We will first create a CAD model for a tracker tool that can be attached to the new tool adaptor used on the Galen system. This will be 3D printed and tracker bodies will be attached.

Image Credit: CIS 1 2018 homework 4

Image Credit: CIS 1 2018 homework 4

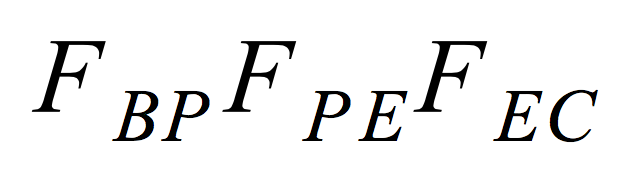

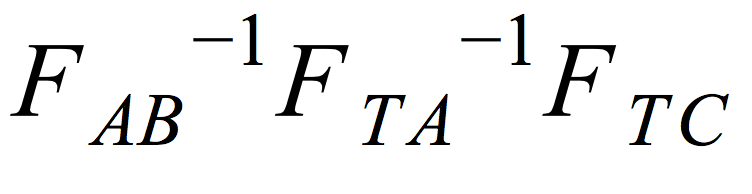

We begin by finding the frame transformations shown in the image above. F BP and FP E for a given position can be calculated using the Galen forward kinematics. F EC is a static displacement that will be determined from the tracker tool CAD model and will be verified. F TC and F TA will be reported by the Optical Tracking System. F AB can be found by assuming no deflection at the home position with no force and calculating

We then move the robot to various positions within the workspace and apply forces in different directions, recording force sensor data, joint angles, tool position as reported by the robot, and tool position as measured by an optical tracker. We calculate the expected position using

and the actual position using

and the actual position using

.

.

After collecting this data and doing the calculations above, we will develop a model to predict the deflection values at a location using a shallow neural net. We will attempt different configurations and document the performance of each. Parameters that we will change will include the number of layers, activation function, and loss function.

Here give list of other project files (e.g., source code) associated with the project. If these are online give a link to an appropriate external repository or to uploaded media files under this name space (2019-04).