HMD-Based Navigation for Ventriculostomy

Last updated: 05/08 10:56 AM

Summary

This project is aimed to introduce image guidance via augmented reality on HoloLens.

Students: Yiwei Jiang, Mingyi Zheng

Mentors: Peter Kazanzides, Ehsan Azimi

Background, Specific Aims, and Significance

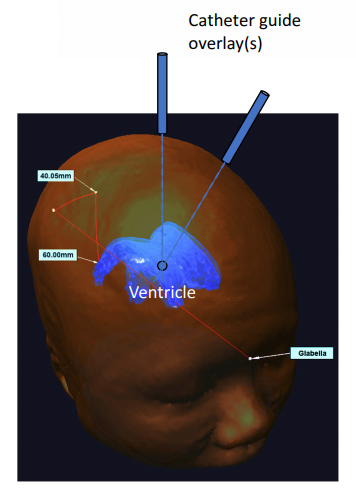

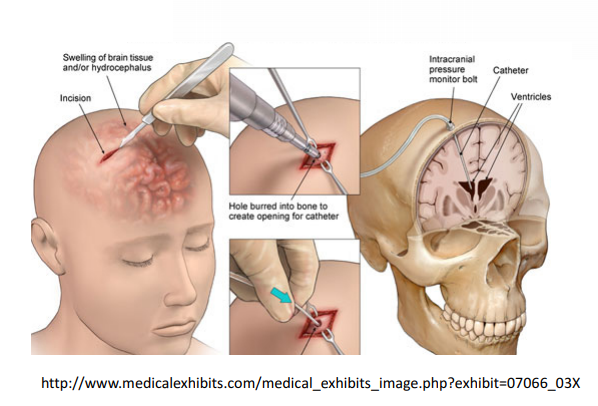

A ventriculostomy is a device that drains excess cerebrospinal fluid from the head. It is

also used to measure the pressure in the head (referred to as ICP, intracranial pressure).

The system is made up of a small tube, drainage bag, and monitor. Here is a brief surgical

procedure for ventriculostomy refer to Figure 1 below:

1. Incision

2. Hole burred into bone to create opening for catheter

3. Insert catheter and drain excess fluid from ventricle

Deliverables

Technical Approach

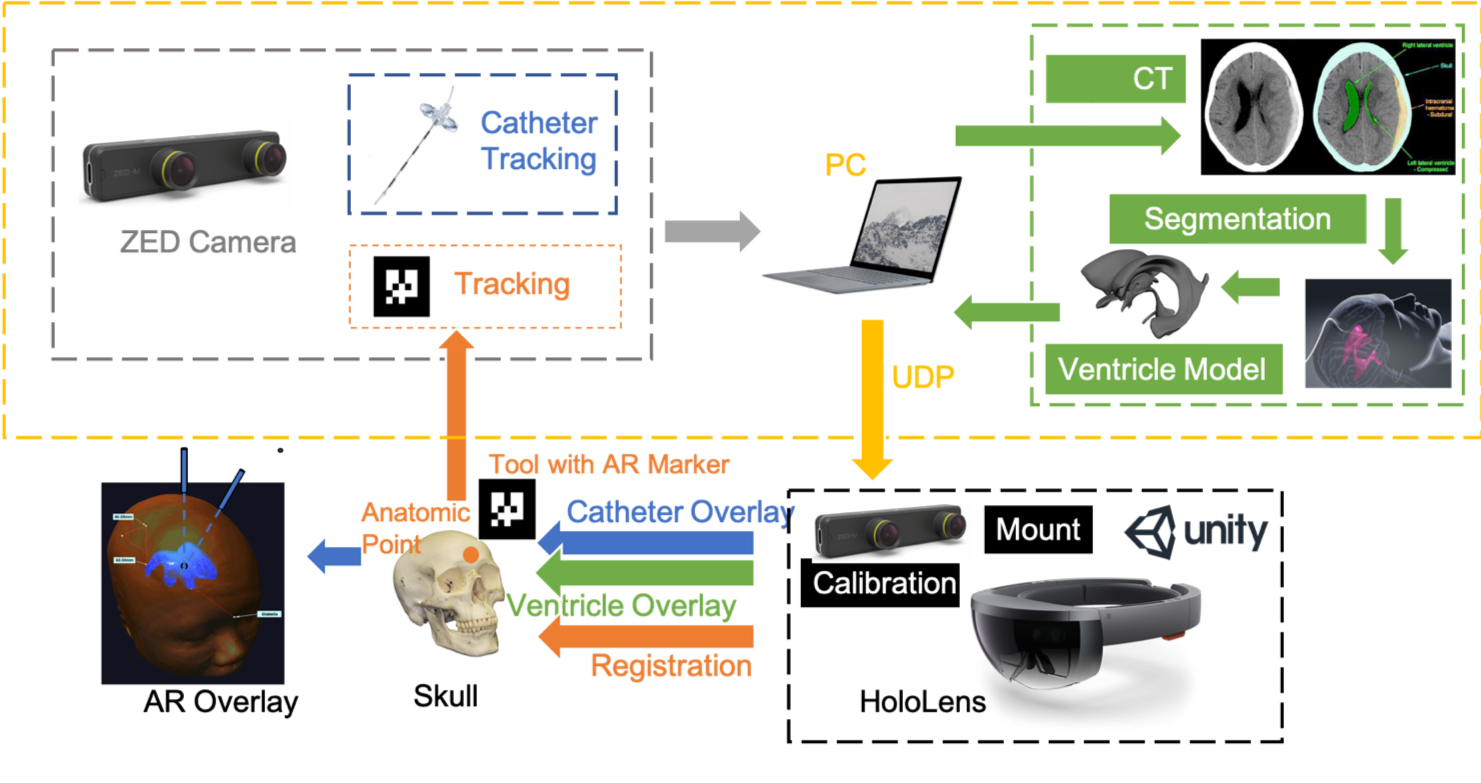

Our navigation system work flow diagram is shown as Fig. 3, and the following steps

describe the workflow in detail.

a. A ZED mini camera mounted to HoloLens to track skull(AR marker) and catheter

b. Register CT to patient by touching anatomic points (glabella)

c. Create ventricle model by segmenting CT on PC and import model to Unity

d. Unity generate AR overlay of ventricle and overlay via HoloLens

i. Target accuracy within 3 mm

e. Unity generates entry point by touching and overlay via HoloLens

f. Display Catheter guide line on HoloLens, which a virtual line from centroid of

ventricle to entry point with possibility for entry point adjustment

g. Catheter tracking result including catheter insertion depth, angle that processed on

PC and send to Unity through UDP

h. Unity receives catheter tracking result from PC and overlay the information via

HoloLens

CAD Design

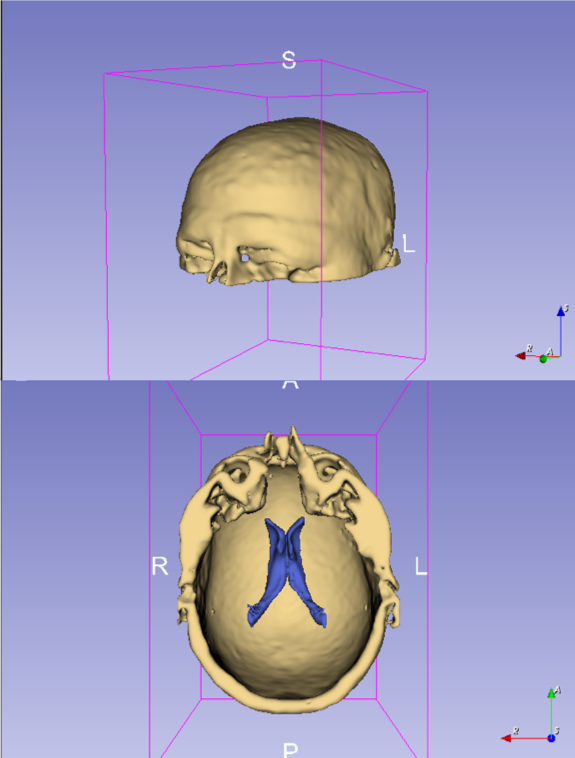

Ventricle Segmentation

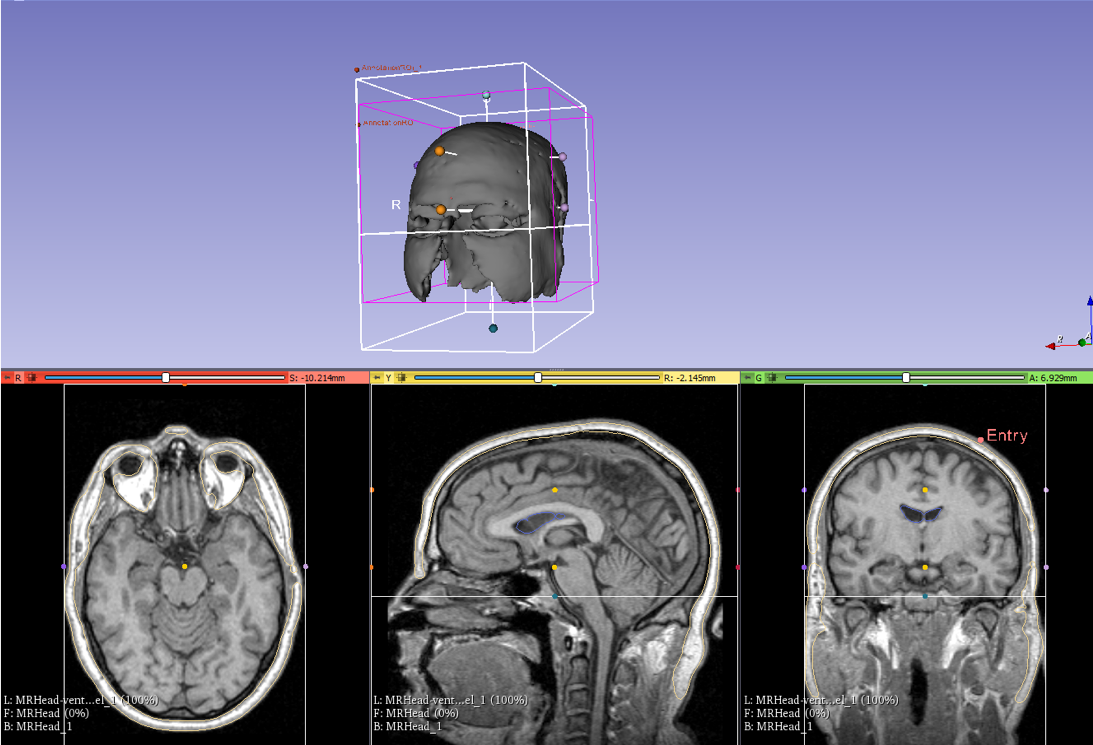

- Ventricle and Skull segmentation in 3D slicer

Thresholding

Select target object

Close holes

Smooth and mesh

- Manually select anatomic point and entry point to get relative position

Test

- 3D print top half part of segmented head

- Print 2 parts, skull and ventricle

- Skull

- Ventricle

- Test

Workflow

Software Design

Software Design

Registration

Catheter Tracking

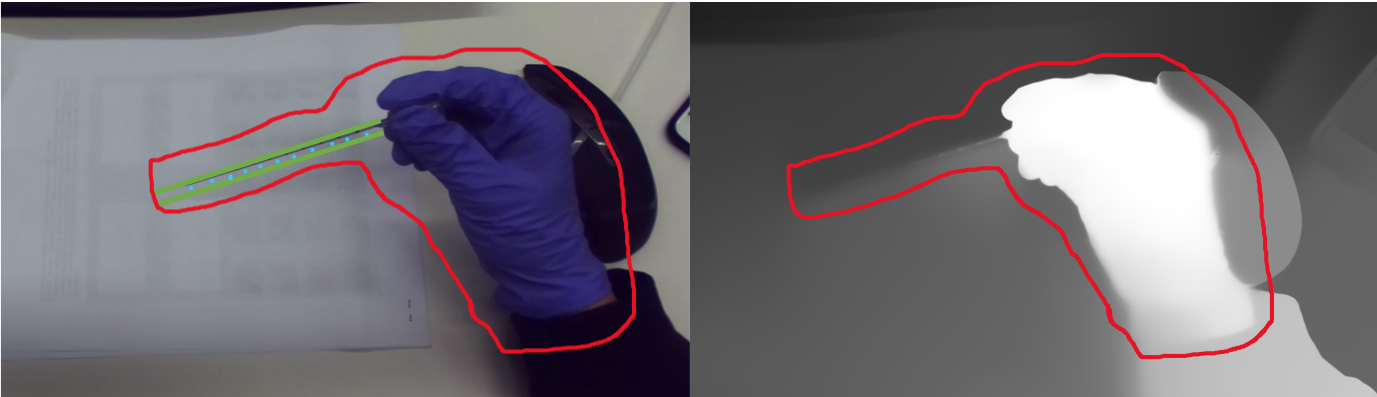

1. RGB image to locate hand position as seed points with purple gloves

2. Mask the region around seed point with similar depth

3. Hough Transformation to find the catheter

4. Thresholding to get tip and scale lines

5. Calculate tip position and angle of catheter

Overlay

Without MRI Mode

Overlay a 5cm sphere on the origin of the constructed frame;

Cast a ray from the origin, At a 45 degree angle to the x’o’y’ plane.

With MRI Mode

Overlay a 3D ventricle model on T_ventricleCenter_anatomical(x,y,z,R,P,Y) obtained from MRI;

Also overlay a 3cm sphere on P_entry_anatomical(x,y,z);

Cast a line from the center of ventricle to entry.

Dependencies

Schedule

Milestones and Status

Segmentation and 3D reconstruction of Skull and Ventricle

Planned Date: 3/5

Expected Date: 3/5

Status: Completed

Navigation System Without-MRI Mode

Planned Date: 4/2

Expected Date: 4/5

Status: Completed

Navigation System With-MRI Mode

Planned Date: 4/9

Expected Date: 4/10

Status: Completed

ZED Camera Calibration

Planned Date: 4/10

Expected Date: 4/13

Status: Completed

Catheter Tracking

Planned Date: 4/30

Expected Date: 5/3

Status: In progress

Evaluation of Performance with Skull Phantom

Planned Date: 5/6

Expected Date: 5/6

Status: In progress

Final Report and Poster

Planned Date: 5/8

Expected Date: 5/8

Status: In progress

Reports and presentations

Project Plan

Project Background Reading

Project Checkpoint

Paper Seminar Presentations

Project Teaser

Project Final Presentation

Project Final Report

Project Bibliography

Azimi, E., Doswell, J., Kazanzides, P.: Augmented reality goggles with an inte- grated tracking system for navigation in neurosurgery. In: Virtual Reality Short Papers and Posters (VRW), pp. 123–124. IEEE (2012)

Azimi, E., et al.: Can mixed-reality improve the training of medical procedures? In: IEEE Engineering in Medicine and Biology Conference (EMBC), pp. 112–116, July 2018

Sadda, P., Azimi, E., Jallo, G., Doswell, J., Kazanzides, P.: Surgical navigation with a head-mounted tracking system and display. Stud. Health Technol. Inform. 184, 363–369 (2012)

Chen, L., Day, T., Tang, W., John, N.W.: Recent developments and future chal- lenges in medical mixed reality. In: IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 123–135 (2017)

Qian, L., Azimi, E., Kazanzides, P., Navab, N.: Comprehensive tracker based dis- play calibration for holographic optical see-through head-mounted display. arXiv preprint arXiv:1703.05834 (2017)

Saucer, F., Khamene, A., Bascle, B., Rubino, G.J.: A head-mounted display system for augmented reality image guidance: towards clinical evaluation for imri-guided nuerosurgery. In: Niessen, W.J., Viergever, M.A. (eds.) MICCAI 2001. LNCS, vol. 2208, pp. 707–716. Springer, Heidelberg (2001).

Azimi, Ehsan, et al.: Interactive Training and Operation Ecosystem for Surgical Tasks in Mixed Reality. OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis. Springer, Cham, 20-29.(2018).

External Link

Other Resources and Project Files